Reduce Return Rdd . X) what this will do is, it will pass. The reduce action takes an aggregation function as its parameter but does not require an initial value. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Instead, it uses the first. .reduce( lambda x, y : You can find all rdd examples explained in that article at github pyspark examples project for quick reference. Reduce is a spark action that aggregates a data set (rdd) element using a function. That function takes two arguments and returns one. This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in the data. Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). Yournewrdd = youroldrdd.reduce( lambda x, y : Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective data processing in pyspark.

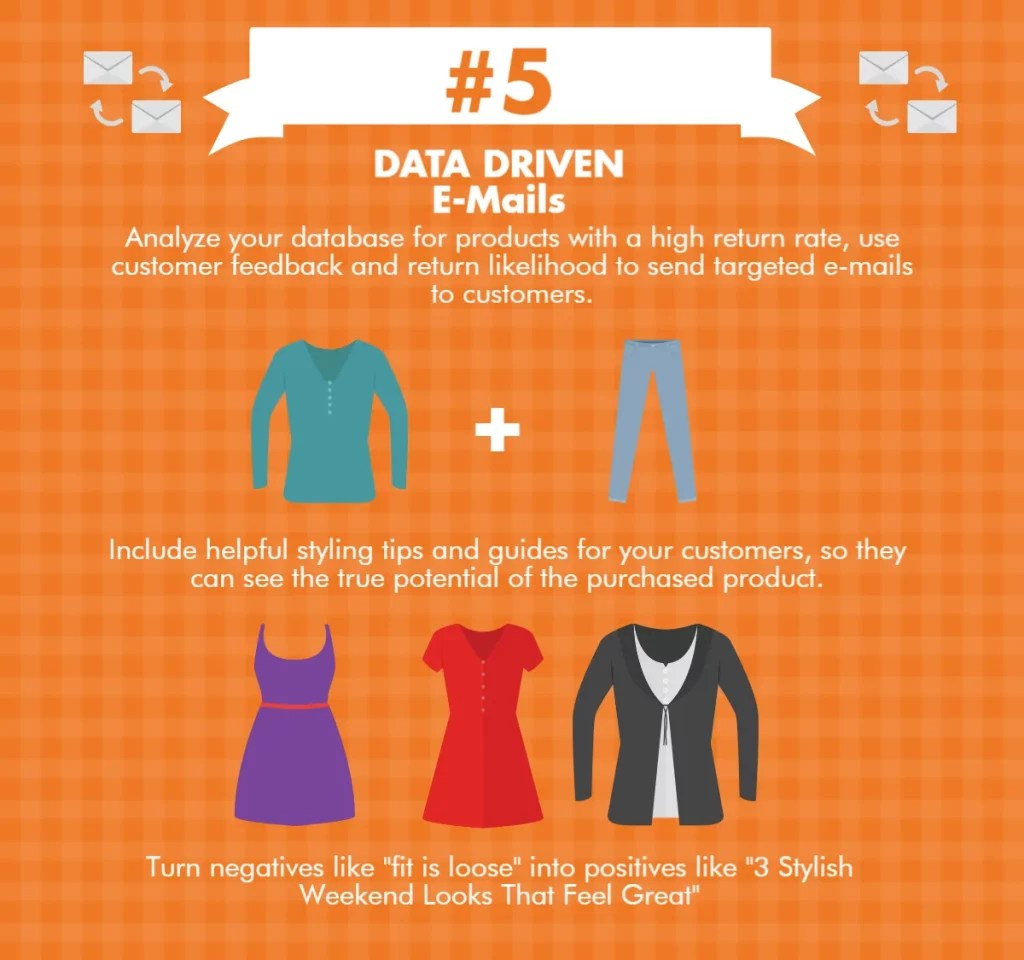

from www.returnlogic.com

Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective data processing in pyspark. Instead, it uses the first. You can find all rdd examples explained in that article at github pyspark examples project for quick reference. Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in the data. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. X) what this will do is, it will pass. This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). The reduce action takes an aggregation function as its parameter but does not require an initial value. .reduce( lambda x, y :

How to Reduce Returns in 7 Best Practices

Reduce Return Rdd Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). The reduce action takes an aggregation function as its parameter but does not require an initial value. Instead, it uses the first. You can find all rdd examples explained in that article at github pyspark examples project for quick reference. That function takes two arguments and returns one. .reduce( lambda x, y : X) what this will do is, it will pass. Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective data processing in pyspark. Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. Reduce is a spark action that aggregates a data set (rdd) element using a function. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in the data. Yournewrdd = youroldrdd.reduce( lambda x, y :

From osacommerce.com

Using Data to Reduce Returns, Retain Customers, and Improve Supply Reduce Return Rdd Instead, it uses the first. The reduce action takes an aggregation function as its parameter but does not require an initial value. You can find all rdd examples explained in that article at github pyspark examples project for quick reference. Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial. Reduce Return Rdd.

From slideplayer.com

Apache Spark Lorenzo Di Gaetano ppt download Reduce Return Rdd .reduce( lambda x, y : X) what this will do is, it will pass. Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in the data. Instead, it uses the first. That function takes two arguments and returns one.. Reduce Return Rdd.

From www.cloudduggu.com

Apache Spark RDD Introduction Tutorial CloudDuggu Reduce Return Rdd The reduce action takes an aggregation function as its parameter but does not require an initial value. X) what this will do is, it will pass. Yournewrdd = youroldrdd.reduce( lambda x, y : Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Actions in rdd that return a. Reduce Return Rdd.

From www.pinterest.com

Reduce Returns Business data, Cloud based, The north face logo Reduce Return Rdd You can find all rdd examples explained in that article at github pyspark examples project for quick reference. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to. Reduce Return Rdd.

From giovhovsa.blob.core.windows.net

Rdd Reduce Spark at Mike Morales blog Reduce Return Rdd .reduce( lambda x, y : That function takes two arguments and returns one. Reduce is a spark action that aggregates a data set (rdd) element using a function. Yournewrdd = youroldrdd.reduce( lambda x, y : This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use. Reduce Return Rdd.

From www.shoptimize.ai

5 proven ways to reduce your Product Return Rate Reduce Return Rdd Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in the data. The reduce action takes an aggregation function as its parameter but does not require an initial value. That function takes two arguments and returns one. Callable [[t,. Reduce Return Rdd.

From velaro.com

Practical Strategies to Reduce Return Rates and Optimize Online Sales Reduce Return Rdd That function takes two arguments and returns one. Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in the data. You can find all rdd examples explained in that article at github pyspark examples project for quick reference. X). Reduce Return Rdd.

From tavanoteam.com

How to Reduce Returns and Manage Them When They Happen Tavano Team Reduce Return Rdd X) what this will do is, it will pass. Reduce is a spark action that aggregates a data set (rdd) element using a function. Instead, it uses the first. Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in. Reduce Return Rdd.

From www.showmeai.tech

图解大数据 基于RDD大数据处理分析Spark操作 Reduce Return Rdd This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. Yournewrdd = youroldrdd.reduce( lambda x, y : Reduce is a spark action that aggregates a data set (rdd) element using a function. That function takes two arguments and returns one.. Reduce Return Rdd.

From www.linkedin.com

ReBound on LinkedIn Returns Management What Are the Six Hidden Costs Reduce Return Rdd That function takes two arguments and returns one. This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective. Reduce Return Rdd.

From slideplayer.com

Spark. ppt download Reduce Return Rdd .reduce( lambda x, y : Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. You can find all rdd examples explained in that article at github pyspark examples project for quick reference. X) what this will do is, it will pass. Actions in rdd that return a value. Reduce Return Rdd.

From www.youtube.com

RDD Advance Transformation And Actions groupbykey And reducebykey Reduce Return Rdd The reduce action takes an aggregation function as its parameter but does not require an initial value. Yournewrdd = youroldrdd.reduce( lambda x, y : Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). You can find all rdd examples explained in that article at github pyspark examples. Reduce Return Rdd.

From www.youtube.com

Amazon FBA Returns and Refunds Explained Pro Tips for Amazon Sellers Reduce Return Rdd Instead, it uses the first. Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective data processing in pyspark. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. This pyspark rdd tutorial will help you understand. Reduce Return Rdd.

From parcellab.com

11 Proven Strategies to Reduce Return Rates Reduce Return Rdd X) what this will do is, it will pass. Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective data processing in pyspark. Reduce is a. Reduce Return Rdd.

From www.returnlogic.com

How to Reduce Returns in 7 Best Practices Reduce Return Rdd Reduce is a spark action that aggregates a data set (rdd) element using a function. Instead, it uses the first. Yournewrdd = youroldrdd.reduce( lambda x, y : The reduce action takes an aggregation function as its parameter but does not require an initial value. That function takes two arguments and returns one. This pyspark rdd tutorial will help you understand. Reduce Return Rdd.

From giovhovsa.blob.core.windows.net

Rdd Reduce Spark at Mike Morales blog Reduce Return Rdd You can find all rdd examples explained in that article at github pyspark examples project for quick reference. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for. Reduce Return Rdd.

From slideplayer.com

Introduction to Hadoop and Spark ppt download Reduce Return Rdd The reduce action takes an aggregation function as its parameter but does not require an initial value. You can find all rdd examples explained in that article at github pyspark examples project for quick reference. That function takes two arguments and returns one. .reduce( lambda x, y : Actions in rdd that return a value include the reduce function, which. Reduce Return Rdd.

From www.attentive.com

4 Powerful Strategies to Reduce Returns — Blog Attentive Reduce Return Rdd Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in the data. The reduce action takes an aggregation function as its parameter but does not require an initial value. Yournewrdd = youroldrdd.reduce( lambda x, y : Instead, it uses. Reduce Return Rdd.

From rla.org

How to Reduce Returns Reduce Return Rdd This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. X) what this will do is, it will pass. .reduce( lambda x, y : Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (),. Reduce Return Rdd.

From happyreturns.com

5 best practices to reduce return costs — Happy Returns Reduce Return Rdd .reduce( lambda x, y : Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in the data.. Reduce Return Rdd.

From www.helium10.com

How to Reduce Amazon Returns Helium 10 Reduce Return Rdd Yournewrdd = youroldrdd.reduce( lambda x, y : Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). Instead, it uses the first. This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. Reduce Return Rdd.

From www.transdirect.com.au

7 Ways To Reduce Product Returns Blog Reduce Return Rdd Instead, it uses the first. Yournewrdd = youroldrdd.reduce( lambda x, y : This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. .reduce( lambda x, y : X) what this will do is, it will pass. Callable [[t, t], t]). Reduce Return Rdd.

From www.youtube.com

Pyspark RDD Operations Actions in Pyspark RDD Fold vs Reduce Glom Reduce Return Rdd Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective data processing in pyspark. Rdd operations involve transformations (returning a new rdd) and actions (returning a value. Reduce Return Rdd.

From www.amazonlistingservice.com

The Best and Proven Tactics to Reduce Returns or Refunds Reduce Return Rdd Reduce is a spark action that aggregates a data set (rdd) element using a function. Yournewrdd = youroldrdd.reduce( lambda x, y : Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its. Reduce Return Rdd.

From data-flair.training

PySpark RDD With Operations and Commands DataFlair Reduce Return Rdd This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective data processing in pyspark. Rdd operations involve transformations. Reduce Return Rdd.

From slideplayer.com

Apache Spark Vibhatha Abeykoon Tyler Balson Gregor von Laszewski ppt Reduce Return Rdd You can find all rdd examples explained in that article at github pyspark examples project for quick reference. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. That function takes two arguments and returns one. X) what this will do is, it will pass. This pyspark rdd tutorial. Reduce Return Rdd.

From loensgcfn.blob.core.windows.net

Rdd.getnumpartitions Pyspark at James Burkley blog Reduce Return Rdd Reduce is a spark action that aggregates a data set (rdd) element using a function. Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). You can find all rdd examples explained in that article at github pyspark examples project for quick reference. Understanding transformations (e.g., map (),. Reduce Return Rdd.

From www.returnlogic.com

How to Reduce Returns in 7 Best Practices Reduce Return Rdd Yournewrdd = youroldrdd.reduce( lambda x, y : This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). That. Reduce Return Rdd.

From slideplayer.com

Map Reduce Program September 25th 2017 Kyung Eun Park, D.Sc. ppt download Reduce Return Rdd .reduce( lambda x, y : Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). You can find all rdd examples explained in that article at github pyspark examples project for quick reference. Instead, it uses the first. This pyspark rdd tutorial will help you understand what is. Reduce Return Rdd.

From www.nogin.com

How to Reduce Returns in 6 PROVEN Tactics that Work Reduce Return Rdd .reduce( lambda x, y : This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which. Reduce Return Rdd.

From www.returnlogic.com

How to Reduce Returns in 7 Best Practices ReturnLogic Reduce Return Rdd That function takes two arguments and returns one. X) what this will do is, it will pass. The reduce action takes an aggregation function as its parameter but does not require an initial value. Rdd operations involve transformations (returning a new rdd) and actions (returning a value to the driver program or writing data to storage). Actions in rdd that. Reduce Return Rdd.

From developer.aliyun.com

图解大数据 基于RDD大数据处理分析Spark操作阿里云开发者社区 Reduce Return Rdd You can find all rdd examples explained in that article at github pyspark examples project for quick reference. .reduce( lambda x, y : The reduce action takes an aggregation function as its parameter but does not require an initial value. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. Reduce Return Rdd.

From www.connectpointz.com

10 Ways to Reduce Returns From Customers ConnectPointz Reduce Return Rdd You can find all rdd examples explained in that article at github pyspark examples project for quick reference. Actions in rdd that return a value include the reduce function, which performs a rolling computation on a data set, and the count function, which calculates the number of elements in the data. Yournewrdd = youroldrdd.reduce( lambda x, y : Instead, it. Reduce Return Rdd.

From blog.locus.sh

Reduce Returns in Logistics Top 5 Strategies for Shippers Reduce Return Rdd Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective data processing in pyspark. This pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it, along with github examples. Actions in rdd that. Reduce Return Rdd.

From www.chegg.com

Solved 5) (6 points) Suppose that there is a RDD named MyRdd Reduce Return Rdd Yournewrdd = youroldrdd.reduce( lambda x, y : Instead, it uses the first. .reduce( lambda x, y : Understanding transformations (e.g., map (), filter (), reducebykey ()) and actions (e.g., count (), first (), collect ()) is crucial for effective data processing in pyspark. X) what this will do is, it will pass. You can find all rdd examples explained in. Reduce Return Rdd.