Toy Models Of Superposition Pdf . this repo is a replication of the paper 'toy models of superposition', by elhage et al. Both monosemantic and polysemantic neurons can form. view pdf abstract: this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing. toy models of superposition is a groundbreaking machine learning research paper published by authors affiliated with. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. toy models of superposition. we find preliminary evidence that superposition may be linked to adversarial examples and grokking, and. a replication of "toy models of superposition," in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. yue, xiao and li, xin and chen, jiankui and chen, wei and yang, hua and gao, jincheng and yin, zhouping, multi. We investigate phase transitions in a toy model of superposition (tms) using singular. in a collaboration with jess smith, we read through the anthropic paper toy models of superposition and discuss,. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features.

from www.givingwhatwecan.org

we investigate phase transitions in a toy model of superposition (tms) (elhage et al., 2022) using singular learning theory. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. toy models of superposition is a groundbreaking machine learning research paper published by authors affiliated with. a replication of "toy models of superposition," view pdf abstract: in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. yue, xiao and li, xin and chen, jiankui and chen, wei and yang, hua and gao, jincheng and yin, zhouping, multi. this repo is a replication of the paper 'toy models of superposition', by elhage et al.

AI interpretability research at Harvard University · Giving What We Can

Toy Models Of Superposition Pdf yue, xiao and li, xin and chen, jiankui and chen, wei and yang, hua and gao, jincheng and yin, zhouping, multi. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models. toy models of superposition. superposition is a real, observed phenomenon. in a collaboration with jess smith, we read through the anthropic paper toy models of superposition and discuss,. in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. toy models of superposition is a groundbreaking machine learning research paper published by authors affiliated with. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. yue, xiao and li, xin and chen, jiankui and chen, wei and yang, hua and gao, jincheng and yin, zhouping, multi. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing. a replication of "toy models of superposition," view pdf abstract: we find preliminary evidence that superposition may be linked to adversarial examples and grokking, and. Both monosemantic and polysemantic neurons can form. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features.

From www.researchgate.net

(PDF) Superposition Model and its Applications Toy Models Of Superposition Pdf in a collaboration with jess smith, we read through the anthropic paper toy models of superposition and discuss,. superposition is a real, observed phenomenon. consider a toy model where we train an embedding of five features of varying importance 1 in two dimensions,. in this paper, we use toy models — small relu networks trained on. Toy Models Of Superposition Pdf.

From www.researchgate.net

(PDF) Extremal behaviour in models of superposition of random variables Toy Models Of Superposition Pdf this repo is a replication of the paper 'toy models of superposition', by elhage et al. we find preliminary evidence that superposition may be linked to adversarial examples and grokking, and. Both monosemantic and polysemantic neurons can form. view a pdf of the paper titled toy models of superposition, by nelson elhage and 15 other authors. . Toy Models Of Superposition Pdf.

From www.youtube.com

A Walkthrough of Toy Models of Superposition w/ Jess Smith YouTube Toy Models Of Superposition Pdf superposition is a real, observed phenomenon. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. Both monosemantic and polysemantic neurons can form. in this paper, we use toy models —. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf A groundbreaking machine learning research paper. view a pdf of the paper titled toy models of superposition, by nelson elhage and 15 other authors. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing. in this paper, we use toy models — small relu networks trained on synthetic. Toy Models Of Superposition Pdf.

From enginedbscientizes.z21.web.core.windows.net

Law Of Superposition Pdf Toy Models Of Superposition Pdf consider a toy model where we train an embedding of five features of varying importance 1 in two dimensions,. a replication of "toy models of superposition," Both monosemantic and polysemantic neurons can form. A groundbreaking machine learning research paper. We investigate phase transitions in a toy model of superposition (tms) using singular. we investigate phase transitions in. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf we find preliminary evidence that superposition may be linked to adversarial examples and grokking, and. view a pdf of the paper titled toy models of superposition, by nelson elhage and 15 other authors. a replication of "toy models of superposition," in this paper, we use toy models — small relu networks trained on synthetic data with. Toy Models Of Superposition Pdf.

From www.anthropic.com

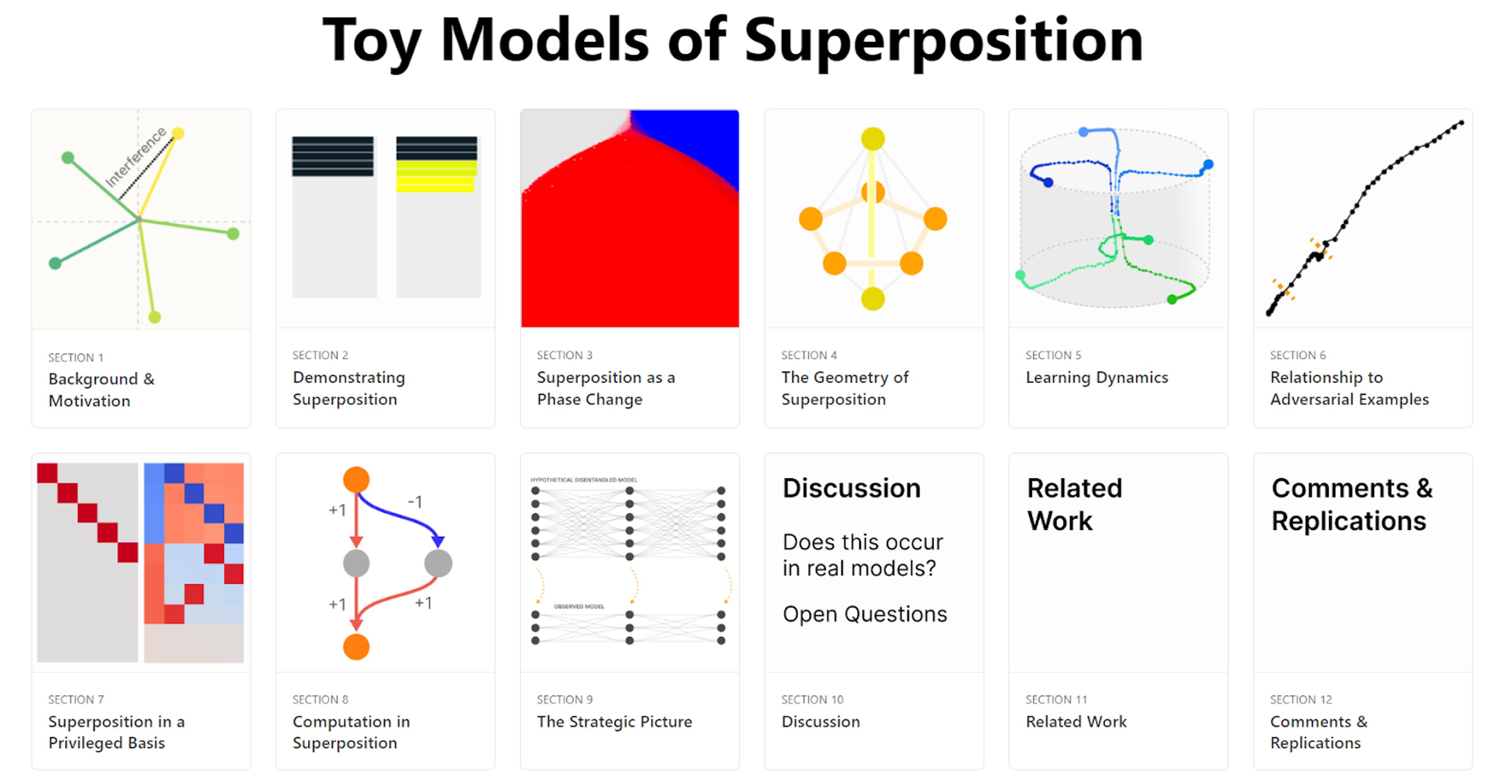

Anthropic Toy Models of Superposition Toy Models Of Superposition Pdf in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing. this repo is a replication of the paper 'toy models of superposition', by elhage et al. in this paper, we use. Toy Models Of Superposition Pdf.

From www.youtube.com

Principle of Superposition Explained with Example Mechanics of Solid Toy Models Of Superposition Pdf a replication of "toy models of superposition," in a collaboration with jess smith, we read through the anthropic paper toy models of superposition and discuss,. A groundbreaking machine learning research paper. we find preliminary evidence that superposition may be linked to adversarial examples and grokking, and. this paper provides a toy model where polysemanticity can be. Toy Models Of Superposition Pdf.

From www.researchgate.net

(PDF) A Combined Superposition and Model Evolution Calculus Toy Models Of Superposition Pdf We investigate phase transitions in a toy model of superposition (tms) using singular. in a collaboration with jess smith, we read through the anthropic paper toy models of superposition and discuss,. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. view a pdf of. Toy Models Of Superposition Pdf.

From deepai.org

Toy Models of Superposition DeepAI Toy Models Of Superposition Pdf in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. we investigate phase transitions in a toy model of superposition (tms) using singular learning theory. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. yue, xiao and. Toy Models Of Superposition Pdf.

From github.com

GitHub anthropics/toymodelsofsuperposition Notebooks Toy Models Of Superposition Pdf in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. we find preliminary evidence that superposition may be linked to adversarial examples and grokking, and. a replication of "toy models of superposition," We investigate phase transitions in a toy model of superposition (tms) using singular. consider a toy model where. Toy Models Of Superposition Pdf.

From dokumen.tips

(PDF) Superposition and Model Evolution Combined DOKUMEN.TIPS Toy Models Of Superposition Pdf in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. Both monosemantic and polysemantic neurons can form. we investigate phase transitions in a toy model of superposition (tms) (elhage. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf we investigate phase transitions in a toy model of superposition (tms) using singular learning theory. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. A groundbreaking machine learning research paper. Both monosemantic and polysemantic neurons can form. this paper provides a toy model where polysemanticity can be. Toy Models Of Superposition Pdf.

From modelshop.co.uk

4D model gallery Toy Models Of Superposition Pdf view a pdf of the paper titled toy models of superposition, by nelson elhage and 15 other authors. in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. we investigate phase transitions in a toy model of superposition (tms) using singular learning theory. this paper provides a toy model where. Toy Models Of Superposition Pdf.

From www.reddit.com

[R] Toy Models of Superposition r/MachineLearning Toy Models Of Superposition Pdf we investigate phase transitions in a toy model of superposition (tms) (elhage et al., 2022) using singular learning theory. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. a replication of "toy models of superposition," view a pdf of the paper titled toy models of superposition,. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf in a collaboration with jess smith, we read through the anthropic paper toy models of superposition and discuss,. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. toy models of superposition is a groundbreaking machine learning research paper published by authors affiliated with. . Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf we investigate phase transitions in a toy model of superposition (tms) (elhage et al., 2022) using singular learning theory. view pdf abstract: We investigate phase transitions in a toy model of superposition (tms) using singular. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. in this. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf view pdf abstract: this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing. yue, xiao and li, xin and chen, jiankui and chen, wei and yang, hua and gao, jincheng and yin, zhouping, multi. We investigate phase transitions in a toy model of superposition (tms) using singular. . Toy Models Of Superposition Pdf.

From www.youtube.com

Toy Models of Superposition Pt 2 Singular Learning Theory Seminar 38 Toy Models Of Superposition Pdf toy models of superposition is a groundbreaking machine learning research paper published by authors affiliated with. in a collaboration with jess smith, we read through the anthropic paper toy models of superposition and discuss,. We investigate phase transitions in a toy model of superposition (tms) using singular. in anthropic's paper toy models of superposition, they illustrate how. Toy Models Of Superposition Pdf.

From www.youtube.com

Superposition Theorem Circuits & Systems YouTube Toy Models Of Superposition Pdf consider a toy model where we train an embedding of five features of varying importance 1 in two dimensions,. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing.. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf A groundbreaking machine learning research paper. superposition is a real, observed phenomenon. in a collaboration with jess smith, we read through the anthropic paper toy models of superposition and discuss,. yue, xiao and li, xin and chen, jiankui and chen, wei and yang, hua and gao, jincheng and yin, zhouping, multi. we investigate phase transitions in. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf we find preliminary evidence that superposition may be linked to adversarial examples and grokking, and. This notebook includes the toy model training framework used to generate most of the results in the. a replication of "toy models of superposition," A groundbreaking machine learning research paper. Both monosemantic and polysemantic neurons can form. view pdf abstract: toy. Toy Models Of Superposition Pdf.

From www.givingwhatwecan.org

AI interpretability research at Harvard University · Giving What We Can Toy Models Of Superposition Pdf this repo is a replication of the paper 'toy models of superposition', by elhage et al. This notebook includes the toy model training framework used to generate most of the results in the. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. we find preliminary evidence that. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf we investigate phase transitions in a toy model of superposition (tms) using singular learning theory. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. . Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf view a pdf of the paper titled toy models of superposition, by nelson elhage and 15 other authors. consider a toy model where we train an embedding of five features of varying importance 1 in two dimensions,. in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. in a collaboration. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models. superposition is a real, observed phenomenon. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. this paper provides a toy model where polysemanticity can be fully understood, arising. Toy Models Of Superposition Pdf.

From www.semanticscholar.org

Figure 14 from Engineering Monosemanticity in Toy Models Semantic Scholar Toy Models Of Superposition Pdf in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. We investigate phase transitions in a toy model of superposition (tms) using singular. we investigate phase transitions in a toy model of superposition (tms) using singular learning theory. this repo is a replication of the paper 'toy models. Toy Models Of Superposition Pdf.

From shopee.sg

Beili Bridge Material Package Scientific Experiment Mechanical Toy Models Of Superposition Pdf toy models of superposition. superposition is a real, observed phenomenon. toy models of superposition is a groundbreaking machine learning research paper published by authors affiliated with. this repo is a replication of the paper 'toy models of superposition', by elhage et al. yue, xiao and li, xin and chen, jiankui and chen, wei and yang,. Toy Models Of Superposition Pdf.

From www.youtube.com

MLBBQ Toy Models of Superposition by Eloy Geenjaar YouTube Toy Models Of Superposition Pdf we investigate phase transitions in a toy model of superposition (tms) using singular learning theory. superposition is a real, observed phenomenon. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models. consider a toy model where we train an embedding of five features of varying importance 1 in. Toy Models Of Superposition Pdf.

From www.researchgate.net

Structural superposition of the modeltemplate pairs. Structural Toy Models Of Superposition Pdf This notebook includes the toy model training framework used to generate most of the results in the. yue, xiao and li, xin and chen, jiankui and chen, wei and yang, hua and gao, jincheng and yin, zhouping, multi. this repo is a replication of the paper 'toy models of superposition', by elhage et al. superposition is a. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. a replication of "toy models of superposition," consider a toy model where we train an embedding of five features of varying importance 1 in two dimensions,. yue, xiao and li, xin and chen, jiankui. Toy Models Of Superposition Pdf.

From zhuanlan.zhihu.com

翻译 Toy Models of Superposition 知乎 Toy Models Of Superposition Pdf in anthropic's paper toy models of superposition, they illustrate how neural networks represent more features than. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. toy models of superposition is a groundbreaking machine learning research paper published by authors affiliated with. superposition is a real, observed. Toy Models Of Superposition Pdf.

From circuitglobe.com

What is a Superposition Theorem? Circuit Globe Toy Models Of Superposition Pdf this repo is a replication of the paper 'toy models of superposition', by elhage et al. A groundbreaking machine learning research paper. Both monosemantic and polysemantic neurons can form. in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. This notebook includes the toy model training framework used to. Toy Models Of Superposition Pdf.

From github.com

GitHub Jannoshh/superposition Replicating Toy Models of Toy Models Of Superposition Pdf view pdf abstract: this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing additional sparse features. this paper provides a toy model where polysemanticity can be fully understood, arising as a result of models storing. This notebook includes the toy model training framework used to generate most of. Toy Models Of Superposition Pdf.

From www.researchgate.net

(PDF) Superposition Toy Models Of Superposition Pdf view pdf abstract: in this paper, we use toy models — small relu networks trained on synthetic data with sparse input features. superposition is a real, observed phenomenon. in a collaboration with jess smith, we read through the anthropic paper toy models of superposition and discuss,. toy models of superposition is a groundbreaking machine learning. Toy Models Of Superposition Pdf.