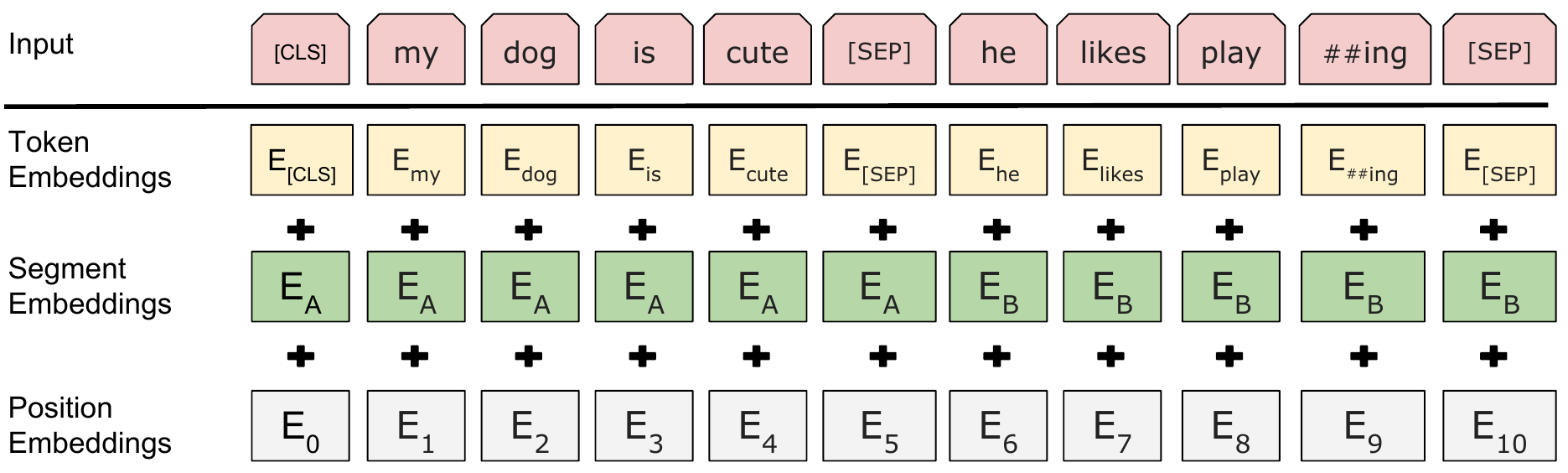

What Is Bert Embeddings . This tutorial covers the basics. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Find out how to use bert. It is based on the. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. To represent textual input data, bert relies on 3 distinct types of embeddings:

from www.adityaagrawal.net

To represent textual input data, bert relies on 3 distinct types of embeddings: Find out how to use bert. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. This tutorial covers the basics. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. It is based on the.

Bidirectional Encoder Representations from Transformers (BERT) Aditya

What Is Bert Embeddings Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. This tutorial covers the basics. It is based on the. Find out how to use bert. To represent textual input data, bert relies on 3 distinct types of embeddings:

From paperswithcode.com

Papers with Code SentenceBERT Sentence Embeddings using Siamese What Is Bert Embeddings Find out how to use bert. It is based on the. To represent textual input data, bert relies on 3 distinct types of embeddings: Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Learn how to use bert to. What Is Bert Embeddings.

From techblog.assignar.com

How to use BERT Sentence Embedding for Clustering text Assignar Tech Blog What Is Bert Embeddings Find out how to use bert. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. To represent textual input data, bert relies on 3 distinct types of embeddings: Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Learn about bert,. What Is Bert Embeddings.

From www.scaler.com

Extracting embeddings from pretrained BERT Huggingface Transformers What Is Bert Embeddings To represent textual input data, bert relies on 3 distinct types of embeddings: Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. This tutorial covers the basics. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. It is based on. What Is Bert Embeddings.

From www.analyticsvidhya.com

Introduction to BERT and Segment Embeddings What Is Bert Embeddings Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Find out how to use bert. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. This. What Is Bert Embeddings.

From www.crowdstrike.com

BERT Embeddings Part 2 A Modern ML Approach For Detecting Malware What Is Bert Embeddings Find out how to use bert. It is based on the. To represent textual input data, bert relies on 3 distinct types of embeddings: Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using. What Is Bert Embeddings.

From krishansubudhi.github.io

Visualizing Bert Embeddings Krishan’s Tech Blog What Is Bert Embeddings Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. To represent textual input data, bert relies on 3 distinct types of embeddings: Bert stands for bidirectional encoder representations from transformers and is a language representation model by. It is based on the. Learn about bert, a. What Is Bert Embeddings.

From krishansubudhi.github.io

Visualizing Bert Embeddings Krishan’s Tech Blog What Is Bert Embeddings To represent textual input data, bert relies on 3 distinct types of embeddings: Find out how to use bert. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. It is based on the. This tutorial covers the basics. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Learn. What Is Bert Embeddings.

From www.researchgate.net

2D Visualization of BERT Embeddings of Words after UMAP Clustering What Is Bert Embeddings It is based on the. To represent textual input data, bert relies on 3 distinct types of embeddings: Find out how to use bert. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next. What Is Bert Embeddings.

From www.vrogue.co

Nlp How To Convert Small Dataset Into Word Embeddings vrogue.co What Is Bert Embeddings Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. To represent textual input data, bert relies on 3 distinct types of embeddings: Find out how to use bert. Learn how to use bert to extract word and sentence embeddings. What Is Bert Embeddings.

From mccormickml.com

BERT Word Embeddings Tutorial · Chris McCormick What Is Bert Embeddings Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. It is based on the. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. To represent textual input data, bert relies on 3 distinct types of embeddings: Find out how to. What Is Bert Embeddings.

From medium.com

Richer Sentence Embeddings using SentenceBERT — Part I by Founders What Is Bert Embeddings This tutorial covers the basics. Find out how to use bert. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. It is based on the. Learn how to use bert to extract word and sentence embeddings from text data,. What Is Bert Embeddings.

From www.mdpi.com

Applied Sciences Free FullText Sentiment Analysis of Text Reviews What Is Bert Embeddings This tutorial covers the basics. It is based on the. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Find out how to use bert. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. To represent textual input data, bert. What Is Bert Embeddings.

From www.labellerr.com

BERT Explained Stateoftheart language model for NLP What Is Bert Embeddings Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. This tutorial covers the basics. To represent textual input data, bert relies on 3 distinct types of embeddings: Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Find out how to use bert. It is based on the. Learn. What Is Bert Embeddings.

From barcelonageeks.com

Explicación del Modelo BERT PNL Barcelona Geeks What Is Bert Embeddings Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. To represent textual input data, bert relies on 3 distinct types of embeddings: Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. It is based on the. This tutorial covers the. What Is Bert Embeddings.

From www.pinterest.com

Improving sentence embeddings with BERT and Representation Learning What Is Bert Embeddings It is based on the. This tutorial covers the basics. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. To represent textual input data, bert relies on 3 distinct types of embeddings: Find out how to use bert. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn. What Is Bert Embeddings.

From is-rajapaksha.medium.com

BERT Word Embeddings Deep Dive. Dives into BERT word embeddings with What Is Bert Embeddings Bert stands for bidirectional encoder representations from transformers and is a language representation model by. It is based on the. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. This tutorial. What Is Bert Embeddings.

From www.vrogue.co

How To Use Bert Embeddings In Pytorch Reason Town Word Deep Dive Dives What Is Bert Embeddings Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. To represent textual input data, bert relies on 3 distinct types of embeddings: Find out how to use bert. It is based. What Is Bert Embeddings.

From nlp.gluon.ai

Pretrained BERT Models — gluonnlp 0.10.0 documentation What Is Bert Embeddings Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Find out how to use bert. This tutorial covers the basics. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. To represent textual input data, bert relies on 3 distinct types of embeddings: It is based on the. Learn. What Is Bert Embeddings.

From velog.io

SentenceBERT Sentence Embeddings using Siamese What Is Bert Embeddings To represent textual input data, bert relies on 3 distinct types of embeddings: This tutorial covers the basics. It is based on the. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Bert stands for bidirectional encoder representations from transformers and is a language representation model. What Is Bert Embeddings.

From mccormickml.com

BERT Tutorial with PyTorch · Chris McCormick What Is Bert Embeddings This tutorial covers the basics. Find out how to use bert. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Bert stands for bidirectional encoder representations from transformers and is a. What Is Bert Embeddings.

From www.youtube.com

Understanding BERT Embeddings and Tokenization NLP HuggingFace What Is Bert Embeddings To represent textual input data, bert relies on 3 distinct types of embeddings: This tutorial covers the basics. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. It is based on the. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence. What Is Bert Embeddings.

From jalammar.github.io

The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning What Is Bert Embeddings It is based on the. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Find out how to use bert. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. To represent textual input data, bert relies on 3 distinct types. What Is Bert Embeddings.

From github.com

GitHub yanliang12/bert_text_embedding Embedding a text to a vector What Is Bert Embeddings To represent textual input data, bert relies on 3 distinct types of embeddings: Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Find out how to use bert. This tutorial covers the basics. It is based on the. Learn. What Is Bert Embeddings.

From dvgodoy.github.io

BERT dlvisuals What Is Bert Embeddings To represent textual input data, bert relies on 3 distinct types of embeddings: It is based on the. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Find out how to use bert. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. This tutorial covers the basics. Learn. What Is Bert Embeddings.

From towardsdatascience.com

BERT Visualization in Embedding Projector by Gergely D. Németh What Is Bert Embeddings Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. Find out how to use bert. To represent textual input data, bert relies on 3 distinct types of embeddings: This tutorial covers. What Is Bert Embeddings.

From www.researchgate.net

Visualizations of example BERT embeddings for stimuli table.n cover.n What Is Bert Embeddings It is based on the. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. To represent textual input data, bert relies on 3 distinct types of embeddings: Find out how to. What Is Bert Embeddings.

From medium.com

1 line to BERT Word Embeddings with NLU in Python by Christian Kasim What Is Bert Embeddings This tutorial covers the basics. Find out how to use bert. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. It is based on the. Learn about bert, a bidirectional transformer. What Is Bert Embeddings.

From towardsdatascience.com

Examining BERT’s raw embeddings. Are they of any use standalone? by What Is Bert Embeddings Find out how to use bert. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. It is based on the. To represent textual input data, bert relies on 3 distinct types of embeddings: Bert stands for bidirectional encoder representations from transformers and is a language representation. What Is Bert Embeddings.

From www.mzes.uni-mannheim.de

BERT and Explainable AI Methods Bites What Is Bert Embeddings Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. It is based on the. Find out how to use bert. This tutorial covers the basics. To represent textual input data, bert relies on 3 distinct types of embeddings: Learn about bert, a bidirectional transformer pretrained on. What Is Bert Embeddings.

From www.youtube.com

Understanding BERT Embeddings and How to Generate them in SageMaker What Is Bert Embeddings It is based on the. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. To represent textual input data, bert relies on 3 distinct types of embeddings: This tutorial covers the. What Is Bert Embeddings.

From tinkerd.net

Understanding BERT Embeddings What Is Bert Embeddings This tutorial covers the basics. Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. To represent. What Is Bert Embeddings.

From roomylee.github.io

SentenceBERT Sentence Embeddings using Siamese (EMNLP What Is Bert Embeddings This tutorial covers the basics. Find out how to use bert. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. It is based on the. To represent textual input data, bert relies on 3 distinct types of embeddings: Learn how to use bert to extract word and sentence embeddings from text data, and how. What Is Bert Embeddings.

From stacklima.com

Explication du modèle BERT PNL StackLima What Is Bert Embeddings This tutorial covers the basics. Find out how to use bert. It is based on the. To represent textual input data, bert relies on 3 distinct types of embeddings: Learn how to use bert to extract word and sentence embeddings from text data, and how to measure their similarity using cosine similarity. Learn about bert, a bidirectional transformer pretrained on. What Is Bert Embeddings.

From www.adityaagrawal.net

Bidirectional Encoder Representations from Transformers (BERT) Aditya What Is Bert Embeddings This tutorial covers the basics. Bert stands for bidirectional encoder representations from transformers and is a language representation model by. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. To represent textual input data, bert relies on 3 distinct types of embeddings: It is based on the. Learn how to use bert to extract. What Is Bert Embeddings.

From myencyclopedia.github.io

Bert 中文短句相似度计算 Docker CPU镜像 MyEncyclopedia What Is Bert Embeddings To represent textual input data, bert relies on 3 distinct types of embeddings: This tutorial covers the basics. Find out how to use bert. Learn about bert, a bidirectional transformer pretrained on masked language modeling and next sentence prediction. It is based on the. Learn how to use bert to extract word and sentence embeddings from text data, and how. What Is Bert Embeddings.