Dropout Neural Network Keras . You can find more details in keras’s documentation. Start with a low dropout rate: Begin with a dropout rate of around 20% and adjust based on the model’s performance. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. It takes the dropout rate as the first parameter. To effectively use dropout in neural networks, consider the following tips: Dropout is a simple and powerful regularization technique for neural networks and deep learning models. In this post, you will. How to add dropout regularization to mlp, cnn, and rnn layers using the keras api. Keras provides a dropout layer using tf.keras.layers.dropout. In this blog post, we cover how to implement keras based neural networks with dropout. Higher dropout rates (up to 50%) can be used for more complex models. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. At every training step, each neuron has a chance of being.

from machinelearningmastery.com

Start with a low dropout rate: At every training step, each neuron has a chance of being. Begin with a dropout rate of around 20% and adjust based on the model’s performance. How to add dropout regularization to mlp, cnn, and rnn layers using the keras api. It takes the dropout rate as the first parameter. In this post, you will. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. We do so by firstly recalling the basics of dropout, to understand at a high level what we're working with. Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. In this blog post, we cover how to implement keras based neural networks with dropout.

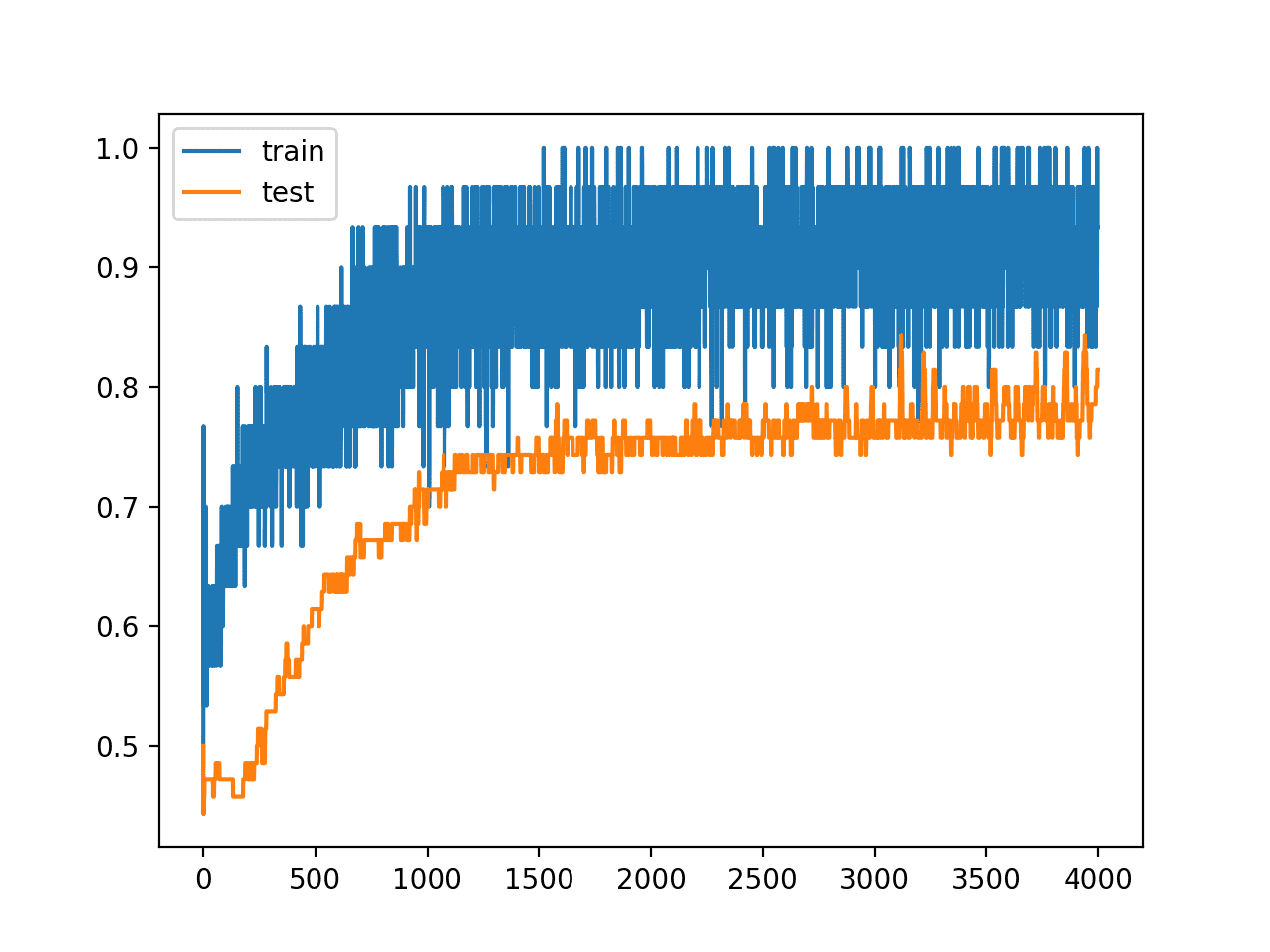

How to Reduce Overfitting With Dropout Regularization in Keras

Dropout Neural Network Keras How to create a dropout layer using the keras api. The dropout layer randomly sets input. In this blog post, we cover how to implement keras based neural networks with dropout. Higher dropout rates (up to 50%) can be used for more complex models. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. You can find more details in keras’s documentation. Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. Start with a low dropout rate: How to add dropout regularization to mlp, cnn, and rnn layers using the keras api. To effectively use dropout in neural networks, consider the following tips: It takes the dropout rate as the first parameter. Keras provides a dropout layer using tf.keras.layers.dropout. In this post, you will. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. At every training step, each neuron has a chance of being.

From www.researchgate.net

Neural network model using dropout. Download Scientific Diagram Dropout Neural Network Keras Higher dropout rates (up to 50%) can be used for more complex models. In this blog post, we cover how to implement keras based neural networks with dropout. Keras provides a dropout layer using tf.keras.layers.dropout. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. It takes the dropout rate as the. Dropout Neural Network Keras.

From www.researchgate.net

Keras Convolutional Neural Network. Download Scientific Diagram Dropout Neural Network Keras In this blog post, we cover how to implement keras based neural networks with dropout. Higher dropout rates (up to 50%) can be used for more complex models. The dropout layer randomly sets input. You can find more details in keras’s documentation. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. To effectively use. Dropout Neural Network Keras.

From www.researchgate.net

13 Dropout Neural Net Model (Srivastava et al., 2014) a) standard Dropout Neural Network Keras In this blog post, we cover how to implement keras based neural networks with dropout. You can find more details in keras’s documentation. Start with a low dropout rate: Higher dropout rates (up to 50%) can be used for more complex models. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. How to create. Dropout Neural Network Keras.

From www.linkedin.com

Dropout A Powerful Regularization Technique for Deep Neural Networks Dropout Neural Network Keras Higher dropout rates (up to 50%) can be used for more complex models. How to create a dropout layer using the keras api. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. You can find more details in keras’s documentation. In this post, you will. It takes the dropout rate as. Dropout Neural Network Keras.

From towardsdatascience.com

Dropout Neural Network Layer In Keras Explained by Cory Maklin Dropout Neural Network Keras Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. The dropout layer randomly sets input. In this blog post, we cover how to implement keras based neural networks with dropout. In this post, you will. Start with a low dropout rate: Keras provides a dropout. Dropout Neural Network Keras.

From www.researchgate.net

Layer structure of our neural network. Left labels are the function Dropout Neural Network Keras To effectively use dropout in neural networks, consider the following tips: In this post, you will. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. Higher dropout rates (up to 50%) can be used for more complex models. Begin with a dropout rate of around 20% and adjust based on the model’s performance. You. Dropout Neural Network Keras.

From www.vrogue.co

How Dropout Regularization Mitigates Overfitting In N vrogue.co Dropout Neural Network Keras In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. The dropout layer randomly sets input. To effectively use dropout in neural networks, consider the following tips: Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. We. Dropout Neural Network Keras.

From www.mdpi.com

Electronics Free FullText A Review on Dropout Regularization Dropout Neural Network Keras Start with a low dropout rate: To effectively use dropout in neural networks, consider the following tips: We do so by firstly recalling the basics of dropout, to understand at a high level what we're working with. You can find more details in keras’s documentation. In this blog post, we cover how to implement keras based neural networks with dropout.. Dropout Neural Network Keras.

From machinelearningmastery.com

How to Use the Keras Functional API for Deep Learning Dropout Neural Network Keras Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. In this blog post, we cover how to implement keras based neural networks with dropout. Keras provides a dropout layer using tf.keras.layers.dropout. In this post, you will. You can find more details in keras’s documentation. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. How. Dropout Neural Network Keras.

From towardsdatascience.com

Dropout Neural Network Layer In Keras Explained by Cory Maklin Dropout Neural Network Keras Higher dropout rates (up to 50%) can be used for more complex models. In this blog post, we cover how to implement keras based neural networks with dropout. In this post, you will. At every training step, each neuron has a chance of being. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. Dropout. Dropout Neural Network Keras.

From www.anyrgb.com

Overfitting, dropout, recurrent Neural Network, keras, convolutional Dropout Neural Network Keras Start with a low dropout rate: Keras provides a dropout layer using tf.keras.layers.dropout. You can find more details in keras’s documentation. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. To effectively use dropout in neural networks, consider the following tips: At every training step, each neuron has a chance of being. Begin with. Dropout Neural Network Keras.

From www.reddit.com

Dropout in neural networks what it is and how it works r Dropout Neural Network Keras Higher dropout rates (up to 50%) can be used for more complex models. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. Begin with a dropout rate of around 20% and adjust based on the model’s performance. In this post, you will. You can find more details in keras’s documentation. The dropout layer randomly. Dropout Neural Network Keras.

From machinelearningmastery.com

How to Reduce Overfitting With Dropout Regularization in Keras Dropout Neural Network Keras The dropout layer randomly sets input. Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. Keras provides a dropout layer using tf.keras.layers.dropout. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. At every training step, each neuron has a chance of being. In this post, you will. In this tutorial, you discovered the keras. Dropout Neural Network Keras.

From www.reddit.com

This cheat sheet provides you with six steps that you can go through to Dropout Neural Network Keras You can find more details in keras’s documentation. In this blog post, we cover how to implement keras based neural networks with dropout. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. Begin with a dropout rate of around 20% and adjust based on the model’s performance. Dropout is a simple and powerful regularization. Dropout Neural Network Keras.

From www.youtube.com

Dropout in Keras to Prevent Overfitting in Neural Networks YouTube Dropout Neural Network Keras In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. To effectively use dropout in neural networks, consider the following tips: How to add dropout regularization to mlp, cnn, and rnn layers using the keras api. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. Start. Dropout Neural Network Keras.

From wikidocs.net

Z_15. Dropout EN Deep Learning Bible 1. from Scratch Eng. Dropout Neural Network Keras The dropout layer randomly sets input. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. How to add dropout regularization to mlp, cnn, and rnn layers using the keras api. Keras provides a dropout layer using tf.keras.layers.dropout. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network. Dropout Neural Network Keras.

From www.techtarget.com

What is Dropout? Understanding Dropout in Neural Networks Dropout Neural Network Keras In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. In this blog post, we cover how to implement keras based neural networks with dropout. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. You can find more details in keras’s documentation. Dropout technique works by. Dropout Neural Network Keras.

From www.youtube.com

Dropout in Neural Network Explained Deep Learning Tensorflow Dropout Neural Network Keras At every training step, each neuron has a chance of being. In this blog post, we cover how to implement keras based neural networks with dropout. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. In this post, you will. Begin with a dropout rate of around 20% and adjust based. Dropout Neural Network Keras.

From www.vrogue.co

Dropout Neural Network For The Classification Of Mnist Handwritten Vrogue Dropout Neural Network Keras You can find more details in keras’s documentation. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. It takes the dropout rate as the first parameter. In this blog post, we cover how to implement keras. Dropout Neural Network Keras.

From learnopencv.com

Implementing a CNN in TensorFlow & Keras Dropout Neural Network Keras Begin with a dropout rate of around 20% and adjust based on the model’s performance. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. Keras provides a dropout layer using tf.keras.layers.dropout. To effectively use dropout in neural networks, consider the following tips: Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. We do so. Dropout Neural Network Keras.

From aashay96.medium.com

Experimentation with Variational Dropout Do exist inside a Dropout Neural Network Keras The dropout layer randomly sets input. In this blog post, we cover how to implement keras based neural networks with dropout. Higher dropout rates (up to 50%) can be used for more complex models. You can find more details in keras’s documentation. Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. At every training step, each neuron has a chance. Dropout Neural Network Keras.

From wandb.ai

Keras Dense Layer How to Use It Correctly kerasdense Weights & Biases Dropout Neural Network Keras How to add dropout regularization to mlp, cnn, and rnn layers using the keras api. At every training step, each neuron has a chance of being. To effectively use dropout in neural networks, consider the following tips: You can find more details in keras’s documentation. Begin with a dropout rate of around 20% and adjust based on the model’s performance.. Dropout Neural Network Keras.

From www.baeldung.com

How ReLU and Dropout Layers Work in CNNs Baeldung on Computer Science Dropout Neural Network Keras In this post, you will. To effectively use dropout in neural networks, consider the following tips: Keras provides a dropout layer using tf.keras.layers.dropout. Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. At every training step, each neuron has a chance of being. The dropout layer randomly sets input. It takes the dropout rate as the first parameter. In this. Dropout Neural Network Keras.

From learnopencv.com

convolutional neural network diagram LearnOpenCV Dropout Neural Network Keras It takes the dropout rate as the first parameter. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. Keras provides a dropout layer using tf.keras.layers.dropout. Start with a low dropout rate: At every training step, each neuron has a chance of being. You can find more details in keras’s documentation. Dropout is a simple. Dropout Neural Network Keras.

From stackabuse.com

Introduction to Neural Networks with ScikitLearn Dropout Neural Network Keras Begin with a dropout rate of around 20% and adjust based on the model’s performance. How to create a dropout layer using the keras api. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. Keras provides a dropout layer using tf.keras.layers.dropout. In this post, you. Dropout Neural Network Keras.

From machinelearningmastery.com

How to Reduce Overfitting With Dropout Regularization in Keras Dropout Neural Network Keras To effectively use dropout in neural networks, consider the following tips: Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. In this post, you will. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. How to add dropout regularization to mlp, cnn, and rnn layers using the keras api. We do so by firstly. Dropout Neural Network Keras.

From www.educba.com

Keras Neural Network How to Use Keras Neural Network? Layers Dropout Neural Network Keras We do so by firstly recalling the basics of dropout, to understand at a high level what we're working with. In this post, you will. Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. Higher dropout rates (up to 50%) can be used for more complex models. You can find more details in keras’s documentation. How to create a dropout. Dropout Neural Network Keras.

From victorzhou.com

Keras for Beginners Building Your First Neural Network Dropout Neural Network Keras How to create a dropout layer using the keras api. In this post, you will. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural network. To effectively use dropout in neural networks, consider the following tips: How to add dropout regularization to mlp, cnn, and rnn layers using the keras api. Dropout is a simple. Dropout Neural Network Keras.

From programmathically.com

Dropout Regularization in Neural Networks How it Works and When to Use Dropout Neural Network Keras We do so by firstly recalling the basics of dropout, to understand at a high level what we're working with. Keras provides a dropout layer using tf.keras.layers.dropout. How to create a dropout layer using the keras api. Higher dropout rates (up to 50%) can be used for more complex models. Dropout is a simple and powerful regularization technique for neural. Dropout Neural Network Keras.

From www.vrogue.co

Dropout Neural Network For The Classification Of Mnist Handwritten What Dropout Neural Network Keras Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. In this blog post, we cover how to implement keras based neural networks with dropout. Keras provides a dropout layer using tf.keras.layers.dropout. In this post, you will. At every training step, each neuron has a chance of being. The dropout layer randomly sets input. How to create a dropout layer using. Dropout Neural Network Keras.

From data-flair.training

Keras Convolution Neural Network Layers and Working DataFlair Dropout Neural Network Keras Start with a low dropout rate: How to add dropout regularization to mlp, cnn, and rnn layers using the keras api. We do so by firstly recalling the basics of dropout, to understand at a high level what we're working with. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. Keras provides a dropout. Dropout Neural Network Keras.

From stackabuse.com

Deep Learning in Keras Building a Deep Learning Model Dropout Neural Network Keras You can find more details in keras’s documentation. It takes the dropout rate as the first parameter. In this post, you will. Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. Higher dropout rates (up to 50%) can be used for more complex models. How to create a dropout layer using the keras api. The dropout layer randomly sets input.. Dropout Neural Network Keras.

From towardsdatascience.com

Dropout Neural Network Layer In Keras Explained by Cory Maklin Dropout Neural Network Keras Dropout is a simple and powerful regularization technique for neural networks and deep learning models. How to create a dropout layer using the keras api. To effectively use dropout in neural networks, consider the following tips: Keras provides a dropout layer using tf.keras.layers.dropout. Begin with a dropout rate of around 20% and adjust based on the model’s performance. Start with. Dropout Neural Network Keras.

From pysource.com

Flatten and Dense layers Computer Vision with Keras p.6 Pysource Dropout Neural Network Keras Start with a low dropout rate: Keras.layers.dropout(rate, noise_shape=none, seed=none, **kwargs) applies dropout to the input. Dropout is a simple and powerful regularization technique for neural networks and deep learning models. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. In this blog post, we cover how to implement keras based neural. Dropout Neural Network Keras.

From www.youtube.com

dropout in neural network deep learning شرح عربي YouTube Dropout Neural Network Keras In this blog post, we cover how to implement keras based neural networks with dropout. In this tutorial, you discovered the keras api for adding dropout regularization to deep learning neural network models. Begin with a dropout rate of around 20% and adjust based on the model’s performance. Dropout is a simple and powerful regularization technique for neural networks and. Dropout Neural Network Keras.