Airflow Job Example . As a result, is an ideal solution for etl and mlops use cases. Apache airflow is used for defining and managing a directed acyclic graph of tasks. To kick it off, all you need to do is. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. This tutorial builds on the regular airflow tutorial and focuses specifically on writing data pipelines using the taskflow api paradigm which is. In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. Data guys programmatically orchestrate and schedule data pipelines and also set retry and. Extracting data from many sources, aggregating them, transforming them, and store in a data warehouse. Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. First, you need to define the dag, specifying the schedule of when the scripts. To automate this task, a great solution is scheduling these tasks within apache airflow. There are 3 main steps when using apache airflow. The airflow scheduler is designed to run as a persistent service in an airflow production environment.

from airflow.apache.org

First, you need to define the dag, specifying the schedule of when the scripts. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. There are 3 main steps when using apache airflow. To automate this task, a great solution is scheduling these tasks within apache airflow. Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. The airflow scheduler is designed to run as a persistent service in an airflow production environment. As a result, is an ideal solution for etl and mlops use cases. Data guys programmatically orchestrate and schedule data pipelines and also set retry and. Extracting data from many sources, aggregating them, transforming them, and store in a data warehouse.

What is Airflow®? — Airflow Documentation

Airflow Job Example This tutorial builds on the regular airflow tutorial and focuses specifically on writing data pipelines using the taskflow api paradigm which is. In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. As a result, is an ideal solution for etl and mlops use cases. Apache airflow is used for defining and managing a directed acyclic graph of tasks. Data guys programmatically orchestrate and schedule data pipelines and also set retry and. There are 3 main steps when using apache airflow. Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. This tutorial builds on the regular airflow tutorial and focuses specifically on writing data pipelines using the taskflow api paradigm which is. To automate this task, a great solution is scheduling these tasks within apache airflow. First, you need to define the dag, specifying the schedule of when the scripts. The airflow scheduler is designed to run as a persistent service in an airflow production environment. Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. Extracting data from many sources, aggregating them, transforming them, and store in a data warehouse. To kick it off, all you need to do is. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost.

From kubernetes.io

Airflow on (Part 1) A Different Kind of Operator Airflow Job Example To automate this task, a great solution is scheduling these tasks within apache airflow. Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. In this tutorial, i share with you, ways to create dag's in apache. Airflow Job Example.

From medium.com

Airflow a workflow management platform by AirbnbEng The Airbnb Airflow Job Example First, you need to define the dag, specifying the schedule of when the scripts. The airflow scheduler is designed to run as a persistent service in an airflow production environment. To automate this task, a great solution is scheduling these tasks within apache airflow. To kick it off, all you need to do is. Apache airflow is used for defining. Airflow Job Example.

From www.run.ai

Apache Airflow Use Cases, Architecture, and Best Practices Airflow Job Example In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. Data guys programmatically orchestrate and schedule data pipelines and also set retry and. To kick it off, all you need to do is. To automate this task, a great solution is scheduling these tasks within apache airflow. Once you have airflow up. Airflow Job Example.

From towardsdatascience.com

An Introduction to Apache Airflow by Frank Liang Towards Data Science Airflow Job Example There are 3 main steps when using apache airflow. To automate this task, a great solution is scheduling these tasks within apache airflow. First, you need to define the dag, specifying the schedule of when the scripts. As a result, is an ideal solution for etl and mlops use cases. In this blog post, we’ll discuss how to leverage the. Airflow Job Example.

From www.levels.fyi

Absolute Airflow Jobs Levels.fyi Airflow Job Example As a result, is an ideal solution for etl and mlops use cases. In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. To kick it off, all you need to do is. To automate this task, a great solution is. Airflow Job Example.

From www.saagie.com

How to Easily Schedule Jobs with Apache Airflow? Saagie Airflow Job Example In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. Apache airflow is used for defining and managing a directed acyclic graph of tasks. To kick it off, all you need to do is. Extracting data from many sources, aggregating them, transforming them, and store in a data warehouse. The airflow scheduler. Airflow Job Example.

From airflow.apache.org

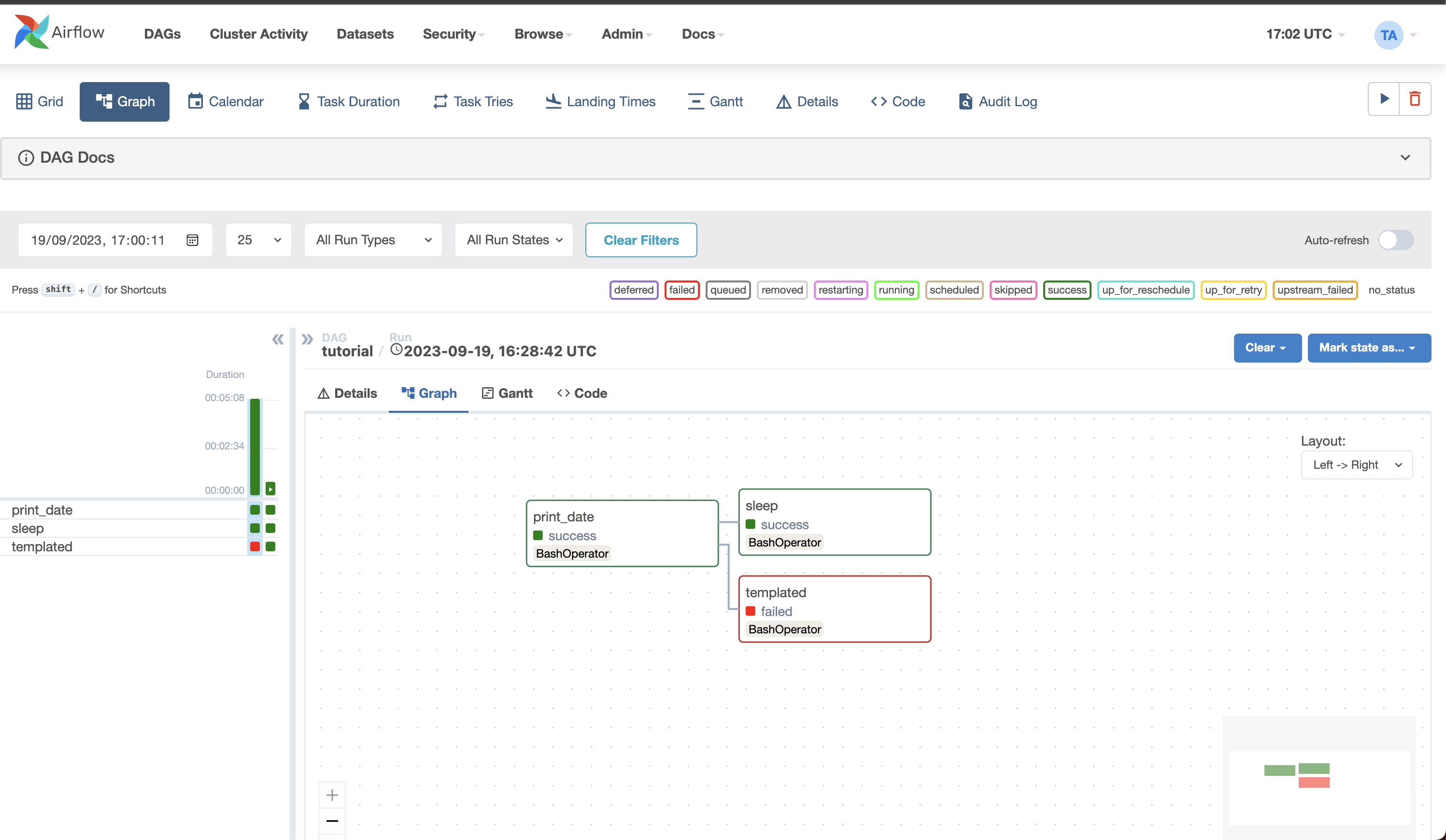

UI / Screenshots — Airflow Documentation Airflow Job Example Apache airflow is used for defining and managing a directed acyclic graph of tasks. In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. First, you need to define the. Airflow Job Example.

From docs.astronomer.io

An introduction to Apache Airflow Astronomer Documentation Airflow Job Example This tutorial builds on the regular airflow tutorial and focuses specifically on writing data pipelines using the taskflow api paradigm which is. There are 3 main steps when using apache airflow. To automate this task, a great solution is scheduling these tasks within apache airflow. In this tutorial, i share with you, ways to create dag's in apache airflow capable. Airflow Job Example.

From tantusdata.com

Monitoring Airflow jobs with TIG 1 system metrics TantusData Airflow Job Example This tutorial builds on the regular airflow tutorial and focuses specifically on writing data pipelines using the taskflow api paradigm which is. To kick it off, all you need to do is. To automate this task, a great solution is scheduling these tasks within apache airflow. Once you have airflow up and running with the quick start, these tutorials are. Airflow Job Example.

From hevodata.com

The Ultimate Guide on Airflow Scheduler Hevo Airflow Job Example Data guys programmatically orchestrate and schedule data pipelines and also set retry and. Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. Apache airflow is used for defining and managing a directed acyclic graph of tasks. In this blog post, we’ll discuss how to. Airflow Job Example.

From www.kalosflorida.com

Airflow What It Is and How We Measure It Kalos Services Airflow Job Example In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. The airflow scheduler is designed to run as a persistent service in an airflow production environment. Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for. Airflow Job Example.

From www.velvetjobs.com

Aerodynamics Engineer Resume Samples Velvet Jobs Airflow Job Example First, you need to define the dag, specifying the schedule of when the scripts. Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. There are 3 main steps when using apache airflow. To kick it off, all you need to do is. The airflow. Airflow Job Example.

From www.upsolver.com

Apache Airflow When to Use it & Avoid it Airflow Job Example Extracting data from many sources, aggregating them, transforming them, and store in a data warehouse. First, you need to define the dag, specifying the schedule of when the scripts. In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. To automate this task, a great solution is scheduling these tasks within apache. Airflow Job Example.

From towardsdatascience.com

Getting started with Apache Airflow by Adnan Siddiqi Towards Data Airflow Job Example To automate this task, a great solution is scheduling these tasks within apache airflow. Extracting data from many sources, aggregating them, transforming them, and store in a data warehouse. Data guys programmatically orchestrate and schedule data pipelines and also set retry and. There are 3 main steps when using apache airflow. The airflow scheduler is designed to run as a. Airflow Job Example.

From airflow-doc-zh.readthedocs.io

UI/截图 Airflowdoczh Airflow Job Example In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. First, you need to define the dag, specifying the schedule of when the scripts. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. Apache airflow is used for defining. Airflow Job Example.

From aws.amazon.com

Running Airflow Workflow Jobs on Amazon EKS with EC2 Spot Instances Airflow Job Example Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. There are 3 main steps when using apache airflow. Data guys programmatically orchestrate and schedule data pipelines and also set retry and. To kick it off, all you need to do is. First, you need to define the dag, specifying the schedule of when the scripts. The airflow scheduler. Airflow Job Example.

From airflow.apache.org

Architecture Overview — Airflow Documentation Airflow Job Example Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. Apache airflow is used for defining and managing a directed acyclic graph of tasks. In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. To kick it. Airflow Job Example.

From docs.astronomer.io

Airflow components Astronomer Documentation Airflow Job Example First, you need to define the dag, specifying the schedule of when the scripts. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. Extracting data from many sources, aggregating. Airflow Job Example.

From www.youtube.com

Part 1 Project Overview Airflow Tutorial Automate EMR Jobs with Airflow Job Example This tutorial builds on the regular airflow tutorial and focuses specifically on writing data pipelines using the taskflow api paradigm which is. In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. First, you need to define the dag, specifying the schedule of when the scripts. In this blog post, we’ll discuss. Airflow Job Example.

From medium.com

Mastering Apache Airflow 10 Key Concepts with Practical Examples and Airflow Job Example Apache airflow is used for defining and managing a directed acyclic graph of tasks. As a result, is an ideal solution for etl and mlops use cases. To kick it off, all you need to do is. First, you need to define the dag, specifying the schedule of when the scripts. Apache airflow is a tool for authoring, scheduling, and. Airflow Job Example.

From labs.jumpsec.com

Implementation and Dynamic Generation for Tasks in Apache Airflow Airflow Job Example The airflow scheduler is designed to run as a persistent service in an airflow production environment. Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. Data. Airflow Job Example.

From xenonstack.com

Apache Airflow Benefits and Best Practices Quick Guide Airflow Job Example Apache airflow is used for defining and managing a directed acyclic graph of tasks. Data guys programmatically orchestrate and schedule data pipelines and also set retry and. Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. To automate this task, a great solution is. Airflow Job Example.

From www.campfireanalytics.com

Apache Airflow How to Schedule and Automate... — Campfire Analytics Airflow Job Example To automate this task, a great solution is scheduling these tasks within apache airflow. Apache airflow is used for defining and managing a directed acyclic graph of tasks. Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. As a result, is an ideal solution. Airflow Job Example.

From machinelearninggeek.com

Apache Airflow A Workflow Management Platform Machine Learning Geek Airflow Job Example In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. The airflow scheduler is designed to run as a persistent service in an airflow production environment. There are 3 main steps when using apache airflow. To automate this task, a great solution is scheduling these tasks within apache airflow. Data guys programmatically. Airflow Job Example.

From airflow.apache.org

Apache Airflow For Apache Airflow Airflow Job Example In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. To kick it off, all you need to do is. Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. First, you need to define the dag, specifying the schedule of when the scripts. Once you have airflow up and running. Airflow Job Example.

From www.qubole.com

Apache Airflow What is Apache Airflow? Qubole Airflow Job Example Once you have airflow up and running with the quick start, these tutorials are a great way to get a sense for how airflow works. The airflow scheduler is designed to run as a persistent service in an airflow production environment. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create. Airflow Job Example.

From medium.com

Understanding Apache Airflow’s key concepts Dustin Stansbury Medium Airflow Job Example In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. Apache airflow is used for defining and managing a directed acyclic graph of tasks. First, you need to define the dag, specifying the schedule of when the scripts. To automate this task, a great solution is scheduling these. Airflow Job Example.

From www.mobilize.net

Using Airflow with Snowpark Airflow Job Example Data guys programmatically orchestrate and schedule data pipelines and also set retry and. The airflow scheduler is designed to run as a persistent service in an airflow production environment. Apache airflow is used for defining and managing a directed acyclic graph of tasks. This tutorial builds on the regular airflow tutorial and focuses specifically on writing data pipelines using the. Airflow Job Example.

From www.projectpro.io

Apache Airflow for Beginners Build Your First Data Pipeline Airflow Job Example In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. The airflow scheduler is designed to run as a persistent service in an airflow production environment. To automate this task, a great solution is scheduling these tasks within apache airflow. In this blog post, we’ll discuss how to leverage the new databricks. Airflow Job Example.

From blog.knoldus.com

Data Engineering Exploring Apache Airflow Knoldus Blogs Airflow Job Example Data guys programmatically orchestrate and schedule data pipelines and also set retry and. Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. To automate this task, a great solution is scheduling these tasks within apache airflow. Apache airflow is used for defining and managing a directed acyclic graph of tasks. Extracting data from many sources, aggregating them, transforming. Airflow Job Example.

From airflow.apache.org

What is Airflow®? — Airflow Documentation Airflow Job Example There are 3 main steps when using apache airflow. Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. Data guys programmatically orchestrate and schedule data pipelines and also set retry and. To automate this task, a great solution is scheduling these tasks within apache airflow. Extracting data from many sources, aggregating them, transforming them, and store in a. Airflow Job Example.

From ayc-data.com

A guide on Airflow best practices Airflow Job Example In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. Data guys programmatically orchestrate and schedule data pipelines and also set retry and. There are 3 main steps when using apache airflow. To automate this task, a great solution is scheduling these tasks within apache airflow. The airflow scheduler is designed to. Airflow Job Example.

From aws.amazon.com

Migrate from mainframe CA7 job schedules to Apache Airflow in AWS AWS Airflow Job Example In this tutorial, i share with you, ways to create dag's in apache airflow capable of running apache. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. Apache airflow is a tool for authoring, scheduling, and monitoring pipelines. Extracting data from many sources, aggregating them, transforming them,. Airflow Job Example.

From www.youtube.com

Run PySpark Job using Airflow Apache Airflow Practical Tutorial Part Airflow Job Example This tutorial builds on the regular airflow tutorial and focuses specifically on writing data pipelines using the taskflow api paradigm which is. In this blog post, we’ll discuss how to leverage the new databricks jobs feature with apache airflow to create powerful and cost. Extracting data from many sources, aggregating them, transforming them, and store in a data warehouse. In. Airflow Job Example.

From blog.redactics.com

How To Poll an Airflow Job (i.e. DAG Run) Airflow Job Example First, you need to define the dag, specifying the schedule of when the scripts. There are 3 main steps when using apache airflow. To kick it off, all you need to do is. This tutorial builds on the regular airflow tutorial and focuses specifically on writing data pipelines using the taskflow api paradigm which is. As a result, is an. Airflow Job Example.