Reduce In Rdd . Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. What you pass to methods map and reduce are. Map and reduce are methods of rdd class, which has interface similar to scala collections.

from data-flair.training

What you pass to methods map and reduce are. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Map and reduce are methods of rdd class, which has interface similar to scala collections. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd

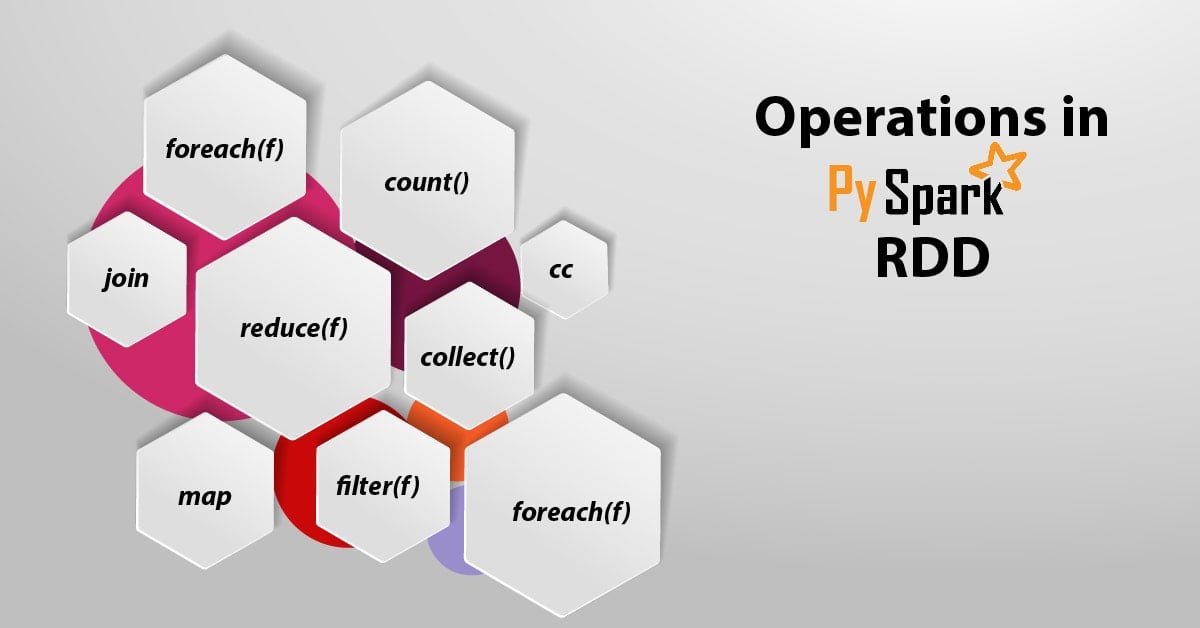

PySpark RDD With Operations and Commands DataFlair

Reduce In Rdd Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. What you pass to methods map and reduce are.

From www.linkedin.com

21 map() and reduce () in RDD’s Reduce In Rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the. Reduce In Rdd.

From techvidvan.com

Spark RDD Features, Limitations and Operations TechVidvan Reduce In Rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Map and reduce are methods of rdd class, which has interface similar to scala collections. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. Reduce In Rdd.

From www.educba.com

What is RDD? How It Works Skill & Scope Features & Operations Reduce In Rdd Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. What you pass to methods map and reduce are. I’ll show two examples where i use python’s ‘reduce’ from. Reduce In Rdd.

From giovhovsa.blob.core.windows.net

Rdd Reduce Spark at Mike Morales blog Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. What you pass to methods map and reduce are. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply. Reduce In Rdd.

From www.hadoopinrealworld.com

What is RDD? Hadoop In Real World Reduce In Rdd What you pass to methods map and reduce are. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. Callable [[t, t], t]) → t [source] reduces the elements. Reduce In Rdd.

From www.youtube.com

Pyspark RDD Operations Actions in Pyspark RDD Fold vs Reduce Glom Reduce In Rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Map and reduce are methods of rdd class, which has interface similar to scala collections. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function. Reduce In Rdd.

From www.cloudduggu.com

Apache Spark RDD Introduction Tutorial CloudDuggu Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. What you pass to methods map and reduce are. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Callable [[t, t], t]) → t [source] reduces the elements. Reduce In Rdd.

From www.researchgate.net

Examples of assignment rules used in RDD. RDD, regression discontinuity Reduce In Rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark What you pass to methods map and reduce are. Map and reduce are methods of rdd class, which has interface similar to scala collections. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative. Reduce In Rdd.

From www.youtube.com

RDD Advance Transformation And Actions groupbykey And reducebykey Reduce In Rdd What you pass to methods map and reduce are. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Map and reduce are methods of. Reduce In Rdd.

From www.youtube.com

Spark RDD Transformations and Actions PySpark Tutorial for Beginners Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd What you pass to methods map and reduce are. I’ll show two examples where i use python’s ‘reduce’ from. Reduce In Rdd.

From erikerlandson.github.io

Implementing an RDD scanLeft Transform With Cascade RDDs tool monkey Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function. Reduce In Rdd.

From www.itweet.cn

Why Spark RDD WHOAMI Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark. Reduce In Rdd.

From developer.aliyun.com

图解大数据 基于RDD大数据处理分析Spark操作阿里云开发者社区 Reduce In Rdd What you pass to methods map and reduce are. Map and reduce are methods of rdd class, which has interface similar to scala collections. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd I’ll show two examples where i use python’s ‘reduce’ from. Reduce In Rdd.

From slides.com

Map Reduce in Ruby Slides Reduce In Rdd Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. What you pass to methods map and reduce are. I’ll show two examples where. Reduce In Rdd.

From note-on-clouds.blogspot.com

[SPARK] RDD, Action 和 Transformation (2) Reduce In Rdd Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd What you pass to methods map and reduce are. Map and reduce are methods of rdd class, which has interface similar to scala collections. Callable [[t, t], t]) → t [source] reduces the elements. Reduce In Rdd.

From zhenye-na.github.io

APIOriented Programming RDD Programming Zhenye's Blog Reduce In Rdd Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Map and reduce are methods of rdd class, which has interface similar to scala. Reduce In Rdd.

From data-flair.training

Spark RDD OperationsTransformation & Action with Example DataFlair Reduce In Rdd What you pass to methods map and reduce are. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Map and reduce are methods. Reduce In Rdd.

From giobtyevn.blob.core.windows.net

Df Rdd Getnumpartitions Pyspark at Lee Lemus blog Reduce In Rdd What you pass to methods map and reduce are. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Map and reduce are methods of. Reduce In Rdd.

From manushgupta.github.io

SPARK vs Hadoop MapReduce Manush Gupta Reduce In Rdd Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the. Reduce In Rdd.

From www.javaprogramto.com

Java Spark RDD reduce() Examples sum, min and max opeartions Reduce In Rdd Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Map and reduce are methods of rdd class, which has interface similar to scala collections. What you pass to methods map and reduce are. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply. Reduce In Rdd.

From www.showmeai.tech

图解大数据 基于RDD大数据处理分析Spark操作 Reduce In Rdd Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd What you pass to methods map and reduce are. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Map and reduce are methods of. Reduce In Rdd.

From medium.com

Apache Spark — RDD…….A data structure of Spark by Berselin C R May Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark What you pass to methods map and reduce are. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in. Reduce In Rdd.

From blog.knoldus.com

Things to know about Spark RDD Knoldus Blogs Reduce In Rdd Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Map and reduce are methods of rdd class, which has interface similar to scala collections. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. Reduce In Rdd.

From giovhovsa.blob.core.windows.net

Rdd Reduce Spark at Mike Morales blog Reduce In Rdd What you pass to methods map and reduce are. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Spark rdd reduce() aggregate action function is used to calculate min, max,. Reduce In Rdd.

From bigdataworld.ir

معرفی و آشنایی با آپاچی اسپارک مدرسه علوم داده و بیگ دیتا Reduce In Rdd Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark What you pass to methods map and reduce are. Spark rdd reduce() aggregate action function is used to calculate min, max,. Reduce In Rdd.

From slideplayer.com

Introduction to Hadoop and Spark ppt download Reduce In Rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this. Reduce In Rdd.

From www.prathapkudupublog.com

Snippets Lineage of RDD Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function. Reduce In Rdd.

From www.prathapkudupublog.com

Snippets Common methods in RDD Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark What you pass to methods map and reduce are. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in. Reduce In Rdd.

From www.showmeai.tech

图解大数据 基于RDD大数据处理分析Spark操作 Reduce In Rdd I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this. Reduce In Rdd.

From sparkbyexamples.com

Python reduce() Function Spark By {Examples} Reduce In Rdd Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. What you pass to methods map and reduce are. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Spark rdd reduce() aggregate action function is used to calculate min, max,. Reduce In Rdd.

From matnoble.github.io

Spark RDD 中的数学统计函数 MatNoble Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd What you pass to methods map and reduce are. Callable [[t, t], t]) → t [source] reduces the elements. Reduce In Rdd.

From www.youtube.com

What is RDD partitioning YouTube Reduce In Rdd Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark. Reduce In Rdd.

From data-flair.training

PySpark RDD With Operations and Commands DataFlair Reduce In Rdd Map and reduce are methods of rdd class, which has interface similar to scala collections. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function. Reduce In Rdd.

From medium.com

Spark RDD (Low Level API) Basics using Pyspark by Sercan Karagoz Reduce In Rdd What you pass to methods map and reduce are. Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Spark rdd reduce() aggregate action function is used to calculate min, max,. Reduce In Rdd.

From www.cloudduggu.com

Apache Spark Transformations & Actions Tutorial CloudDuggu Reduce In Rdd What you pass to methods map and reduce are. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark Callable [[t, t], t]) → t [source] reduces the elements of this rdd using the specified commutative and associative binary. Map and reduce are methods of rdd class, which has interface similar. Reduce In Rdd.