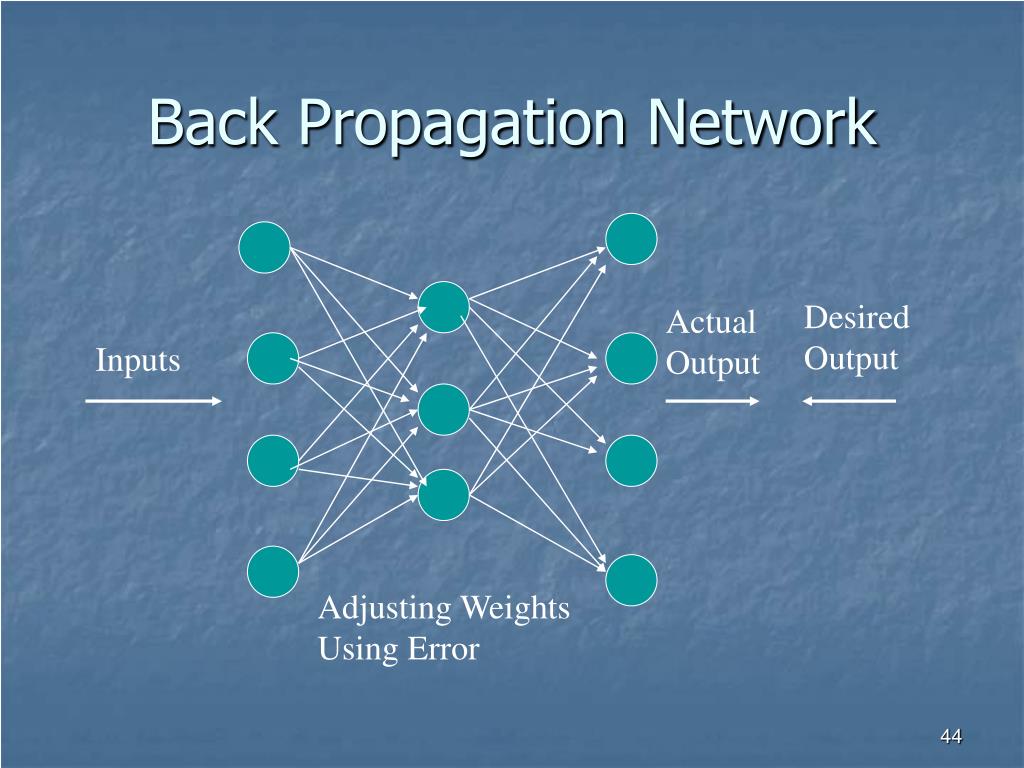

Back Propagation In Soft Computing . Backpropagation algorithm is probably the most fundamental building block in a neural network. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. The early pioneers of neural. It efficiently computes one layer. Here’s what you need to know. The algorithm adjusts the network's weights to minimize any gaps. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. It was first introduced in 1960s and almost.

from www.slideserve.com

Backpropagation algorithm is probably the most fundamental building block in a neural network. It efficiently computes one layer. Here’s what you need to know. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. The algorithm adjusts the network's weights to minimize any gaps. The early pioneers of neural. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. It was first introduced in 1960s and almost.

PPT Artificial Intelligence PowerPoint Presentation, free download

Back Propagation In Soft Computing The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. It was first introduced in 1960s and almost. Here’s what you need to know. The early pioneers of neural. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. It efficiently computes one layer. The algorithm adjusts the network's weights to minimize any gaps. Backpropagation algorithm is probably the most fundamental building block in a neural network. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule.

From www.slideteam.net

Working Of Backpropagation Algorithm In Neural Networks Soft Computing Back Propagation In Soft Computing A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. Here’s what you need to know. Backpropagation, short for “backward propagation of errors,” was first introduced in the late. Back Propagation In Soft Computing.

From www.anotsorandomwalk.com

Backpropagation Example With Numbers Step by Step A Not So Random Walk Back Propagation In Soft Computing Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. It efficiently computes one layer. The back propagation algorithm in neural network computes the gradient of the loss. Back Propagation In Soft Computing.

From www.slideshare.net

Classification using back propagation algorithm Back Propagation In Soft Computing The early pioneers of neural. Here’s what you need to know. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. It efficiently computes one layer. The algorithm adjusts the network's weights to minimize any gaps. Backpropagation is the neural network training process of feeding error rates back. Back Propagation In Soft Computing.

From www.newworldai.com

What is backpropagation really doing? New World Artificial Intelligence Back Propagation In Soft Computing A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. Backpropagation algorithm is probably the most fundamental building block in a neural network. It was first introduced in 1960s and. Back Propagation In Soft Computing.

From slideplayer.com

Intelligent Systems and Soft Computing ppt download Back Propagation In Soft Computing The early pioneers of neural. It was first introduced in 1960s and almost. Here’s what you need to know. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. It. Back Propagation In Soft Computing.

From www.youtube.com

Back Propagation algorithm Soft Computing Lecture Series YouTube Back Propagation In Soft Computing It was first introduced in 1960s and almost. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. It efficiently computes one layer. Backpropagation algorithm is probably the most fundamental building block in a neural network. Backpropagation is the neural network training process of feeding error rates back. Back Propagation In Soft Computing.

From www.researchgate.net

The architecture of back propagation network model Download Back Propagation In Soft Computing Backpropagation algorithm is probably the most fundamental building block in a neural network. Here’s what you need to know. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. The. Back Propagation In Soft Computing.

From www.youtube.com

Solved Numerical Example on Back Propagation algorithm Application of Back Propagation In Soft Computing Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight. Back Propagation In Soft Computing.

From www.researchgate.net

BackPropagation Artificial Neural Network (BPANN) structure for data Back Propagation In Soft Computing A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. It efficiently computes one layer. Backpropagation algorithm is probably the most fundamental building block in a neural network. The early. Back Propagation In Soft Computing.

From studyglance.in

Back Propagation NN Tutorial Study Glance Back Propagation In Soft Computing Here’s what you need to know. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. The back propagation algorithm in neural network computes the gradient of the loss function. Back Propagation In Soft Computing.

From www.researchgate.net

BackPropagation procedure [8] Download Scientific Diagram Back Propagation In Soft Computing Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. Here’s what you need to know. Backpropagation is the neural network training process of feeding error rates back through a. Back Propagation In Soft Computing.

From www.youtube.com

Solved Example Back Propagation Algorithm Neural Networks YouTube Back Propagation In Soft Computing A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. It efficiently computes one layer. Backpropagation algorithm is probably the most fundamental building block in a neural network. The early pioneers of neural. Here’s what you need to know. The algorithm adjusts the network's weights to minimize any gaps. Backpropagation is. Back Propagation In Soft Computing.

From velog.io

오차역전파 (back propagation) Back Propagation In Soft Computing The early pioneers of neural. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's. Back Propagation In Soft Computing.

From www.semanticscholar.org

Figure 4 from SCIFIA Project Proposal Soft Computing for Identificati Back Propagation In Soft Computing It efficiently computes one layer. The algorithm adjusts the network's weights to minimize any gaps. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. Here’s what you need to know. The early pioneers of neural. Backpropagation algorithm is probably the most fundamental building block in a neural network. The back. Back Propagation In Soft Computing.

From www.researchgate.net

Forward propagation and back propagation in BP network Download Back Propagation In Soft Computing The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. It was first introduced in 1960s and almost. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. Backpropagation, short for “backward propagation of errors,” was first. Back Propagation In Soft Computing.

From www.slideserve.com

PPT Soft computing PowerPoint Presentation ID3920119 Back Propagation In Soft Computing A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. It was first introduced in 1960s and almost. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. The algorithm adjusts the network's weights to minimize any gaps. Backpropagation. Back Propagation In Soft Computing.

From loelcynte.blob.core.windows.net

Back Propagation Neural Network Classification at Stephen Vanhook blog Back Propagation In Soft Computing The early pioneers of neural. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. It was first introduced in 1960s and almost. The algorithm adjusts the. Back Propagation In Soft Computing.

From www.researchgate.net

Flowchart of ANN backpropagation offline training algorithm Download Back Propagation In Soft Computing It was first introduced in 1960s and almost. Here’s what you need to know. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. The algorithm adjusts the network's weights to minimize any gaps. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s. Back Propagation In Soft Computing.

From www.youtube.com

Backpropagation Details Pt. 1 Optimizing 3 parameters simultaneously Back Propagation In Soft Computing The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. Backpropagation is the neural network training process of feeding error rates back through a neural network to. Back Propagation In Soft Computing.

From rushiblogs.weebly.com

The Journey of Back Propagation in Neural Networks Rushi blogs. Back Propagation In Soft Computing It was first introduced in 1960s and almost. It efficiently computes one layer. The early pioneers of neural. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. Backpropagation algorithm is probably the most fundamental building block in a neural network. The back propagation algorithm in neural network computes. Back Propagation In Soft Computing.

From www.slideserve.com

PPT Artificial Intelligence PowerPoint Presentation, free download Back Propagation In Soft Computing Backpropagation algorithm is probably the most fundamental building block in a neural network. The algorithm adjusts the network's weights to minimize any gaps. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. It was first introduced in 1960s and almost. Here’s what you need to know. It efficiently computes one. Back Propagation In Soft Computing.

From www.slideserve.com

PPT Overview of Back Propagation Algorithm PowerPoint Presentation Back Propagation In Soft Computing It efficiently computes one layer. The early pioneers of neural. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. It was first introduced in 1960s and almost. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain. Back Propagation In Soft Computing.

From www.slideserve.com

PPT Classification by Back Propagation PowerPoint Presentation, free Back Propagation In Soft Computing The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. It was first introduced in 1960s and almost. Here’s what you need to know. It efficiently computes one layer. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models.. Back Propagation In Soft Computing.

From serokell.io

What is backpropagation in neural networks? Back Propagation In Soft Computing Backpropagation algorithm is probably the most fundamental building block in a neural network. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. It efficiently computes one layer. The early pioneers of neural. Backpropagation is the neural network training process of feeding error rates back through a neural. Back Propagation In Soft Computing.

From www.mdpi.com

Mathematics Free FullText Perceptron Learning, Generalization Back Propagation In Soft Computing Here’s what you need to know. It efficiently computes one layer. The early pioneers of neural. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. The. Back Propagation In Soft Computing.

From medium.com

Backpropagation. Backpropagation(BP)是目前深度學習大多數NN(Neural… by WenWei Back Propagation In Soft Computing A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. The algorithm adjusts the network's weights to minimize any gaps. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. Here’s what you need to know. It efficiently computes one. Back Propagation In Soft Computing.

From www.youtube.com

L 7 Unit 2 ANN Backpropagation Neural networks Application to Back Propagation In Soft Computing Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. It efficiently computes one layer. Backpropagation algorithm is probably the most fundamental building block in a neural network.. Back Propagation In Soft Computing.

From www.researchgate.net

The structure of back propagation neural network (BPN). Download Back Propagation In Soft Computing The early pioneers of neural. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. It efficiently computes one layer. Here’s what you need to know. Backpropagation algorithm is probably the most fundamental building block in a neural network. A backpropagation algorithm, or backward propagation of errors, is an. Back Propagation In Soft Computing.

From www.slideteam.net

Overview Of Backpropagation Algorithm In Neural Networks Soft Computing Back Propagation In Soft Computing Here’s what you need to know. The early pioneers of neural. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. Backpropagation is the neural network training process of. Back Propagation In Soft Computing.

From www.researchgate.net

Structural model of the backpropagation neural network [30 Back Propagation In Soft Computing A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. The algorithm adjusts the network's weights to minimize any gaps. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. Here’s what you need to know. It efficiently computes one. Back Propagation In Soft Computing.

From www.youtube.com

soft computing back propagation 3 YouTube Back Propagation In Soft Computing Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. The early pioneers of neural. Backpropagation is the neural network training process of feeding error rates back. Back Propagation In Soft Computing.

From www.slideserve.com

PPT Overview of Back Propagation Algorithm PowerPoint Presentation Back Propagation In Soft Computing The early pioneers of neural. Here’s what you need to know. The back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. The algorithm adjusts the network's weights to minimize any gaps. Backpropagation algorithm is probably the most fundamental building block in a neural network. It was first introduced. Back Propagation In Soft Computing.

From slideplayer.com

Back propagation Soft computing NN Lecture 2. Backpropagation Nets Back Propagation In Soft Computing It efficiently computes one layer. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it more accurate. It was first introduced in 1960s and almost. Here’s what you need to. Back Propagation In Soft Computing.

From www.researchgate.net

Feed forward back propagation network. Download Scientific Diagram Back Propagation In Soft Computing The algorithm adjusts the network's weights to minimize any gaps. Backpropagation algorithm is probably the most fundamental building block in a neural network. It efficiently computes one layer. It was first introduced in 1960s and almost. The early pioneers of neural. Backpropagation is the neural network training process of feeding error rates back through a neural network to make it. Back Propagation In Soft Computing.

From www.semanticscholar.org

Figure 4 from SCIFIA Project Proposal Soft Computing for Identificati Back Propagation In Soft Computing It was first introduced in 1960s and almost. Here’s what you need to know. A backpropagation algorithm, or backward propagation of errors, is an algorithm that's used to help train neural network models. Backpropagation, short for “backward propagation of errors,” was first introduced in the late 1970s as a mechanism for training neural networks. Backpropagation is the neural network training. Back Propagation In Soft Computing.