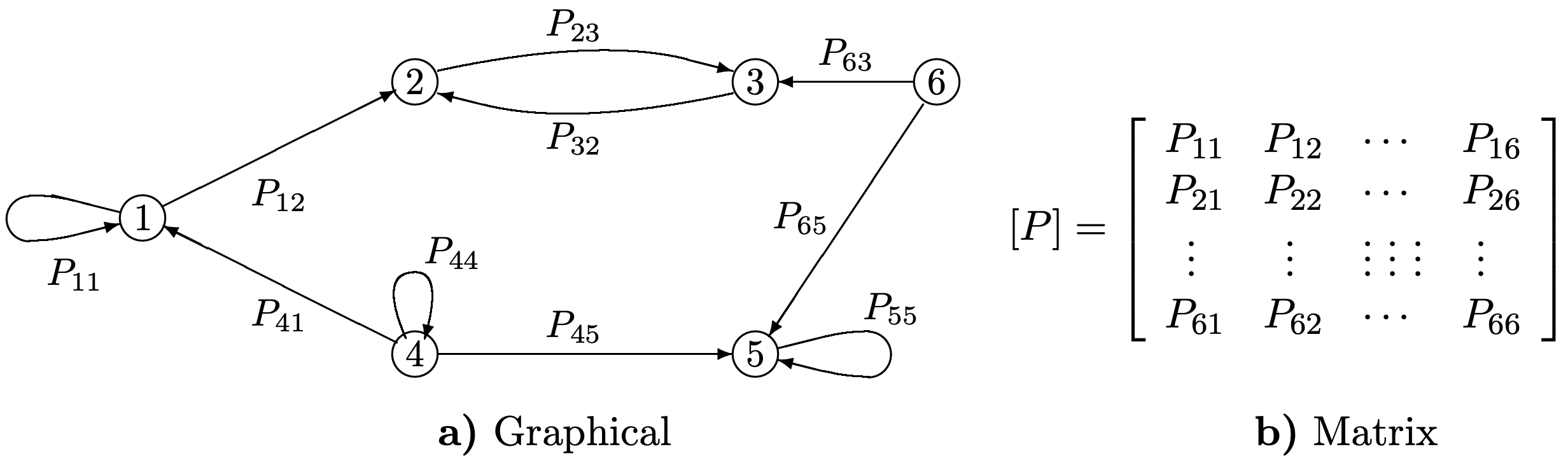

What Is A State In Markov Chain . We will assign the rows in order to. A markov chain presents the random motion of the object. each row in the matrix represents an initial state. markov chains are a happy medium between complete independence and complete dependence. The space on which a markov process \lives can be either. a markov chain is a random process that has a markov property. To better understand markov chains, we need to introduce some definitions. 11.2.4 classification of states. Each column represents a terminal state. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. a markov chain describes a system whose state changes over time. It is a sequence xn of.

from eng.libretexts.org

To better understand markov chains, we need to introduce some definitions. a markov chain describes a system whose state changes over time. each row in the matrix represents an initial state. We will assign the rows in order to. a markov chain is a random process that has a markov property. 11.2.4 classification of states. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. The space on which a markov process \lives can be either. markov chains are a happy medium between complete independence and complete dependence. A markov chain presents the random motion of the object.

3.1 Introduction to Finitestate Markov Chains Engineering LibreTexts

What Is A State In Markov Chain A markov chain presents the random motion of the object. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. It is a sequence xn of. To better understand markov chains, we need to introduce some definitions. a markov chain is a random process that has a markov property. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. Each column represents a terminal state. markov chains are a happy medium between complete independence and complete dependence. each row in the matrix represents an initial state. 11.2.4 classification of states. We will assign the rows in order to. A markov chain presents the random motion of the object. The space on which a markov process \lives can be either. a markov chain describes a system whose state changes over time.

From brilliant.org

Markov Chains Stationary Distributions Practice Problems Online What Is A State In Markov Chain markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. a markov chain is a random process that has a markov property. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. It is a sequence xn of.. What Is A State In Markov Chain.

From www.geeksforgeeks.org

Finding the probability of a state at a given time in a Markov chain What Is A State In Markov Chain 11.2.4 classification of states. We will assign the rows in order to. markov chains are a happy medium between complete independence and complete dependence. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. a markov chain is a mathematical system that experiences transitions from. What Is A State In Markov Chain.

From www.slideserve.com

PPT Markov Chains PowerPoint Presentation, free download ID6008214 What Is A State In Markov Chain The space on which a markov process \lives can be either. each row in the matrix represents an initial state. a markov chain is a random process that has a markov property. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. a markov chain. What Is A State In Markov Chain.

From towardsdatascience.com

Introduction to Markov chains. Definitions, properties and PageRank What Is A State In Markov Chain a markov chain describes a system whose state changes over time. 11.2.4 classification of states. To better understand markov chains, we need to introduce some definitions. We will assign the rows in order to. Each column represents a terminal state. The space on which a markov process \lives can be either. each row in the matrix represents. What Is A State In Markov Chain.

From eng.libretexts.org

3.1 Introduction to Finitestate Markov Chains Engineering LibreTexts What Is A State In Markov Chain each row in the matrix represents an initial state. a markov chain is a random process that has a markov property. A markov chain presents the random motion of the object. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. We will assign the rows in order to.. What Is A State In Markov Chain.

From timeseriesreasoning.com

Introduction to Discrete Time Markov Processes Time Series Analysis What Is A State In Markov Chain each row in the matrix represents an initial state. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. markov chains are a happy medium between complete independence and complete dependence. a markov chain is a random process that has a markov property. It is. What Is A State In Markov Chain.

From www.markhneedham.com

R Markov Chain Wikipedia Example Mark Needham What Is A State In Markov Chain A markov chain presents the random motion of the object. We will assign the rows in order to. To better understand markov chains, we need to introduce some definitions. each row in the matrix represents an initial state. markov chains are a happy medium between complete independence and complete dependence. The space on which a markov process \lives. What Is A State In Markov Chain.

From math.stackexchange.com

What is the expected time to absorption in a Markov Chain given that What Is A State In Markov Chain each row in the matrix represents an initial state. We will assign the rows in order to. a markov chain describes a system whose state changes over time. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. a markov chain is a random process. What Is A State In Markov Chain.

From www.slideserve.com

PPT Markov Chain Part 1 PowerPoint Presentation, free download ID What Is A State In Markov Chain markov chains are a happy medium between complete independence and complete dependence. We will assign the rows in order to. Each column represents a terminal state. a markov chain describes a system whose state changes over time. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. A markov. What Is A State In Markov Chain.

From www.chegg.com

Solved Consider the Markov chain with transition matrix What Is A State In Markov Chain markov chains are a happy medium between complete independence and complete dependence. To better understand markov chains, we need to introduce some definitions. Each column represents a terminal state. a markov chain is a random process that has a markov property. It is a sequence xn of. 11.2.4 classification of states. We will assign the rows in. What Is A State In Markov Chain.

From www.latentview.com

Markov Chain Overview Characteristics & Applications What Is A State In Markov Chain The space on which a markov process \lives can be either. It is a sequence xn of. each row in the matrix represents an initial state. 11.2.4 classification of states. A markov chain presents the random motion of the object. We will assign the rows in order to. a markov chain is a mathematical system that experiences. What Is A State In Markov Chain.

From gregorygundersen.com

A Romantic View of Markov Chains What Is A State In Markov Chain We will assign the rows in order to. 11.2.4 classification of states. markov chains are a happy medium between complete independence and complete dependence. Each column represents a terminal state. a markov chain is a random process that has a markov property. each row in the matrix represents an initial state. markov chains, named after. What Is A State In Markov Chain.

From www.youtube.com

Markov Chains nstep Transition Matrix Part 3 YouTube What Is A State In Markov Chain Each column represents a terminal state. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. a markov chain describes a system whose state changes over time. The space on which a markov process \lives can be either. We will assign the rows in order to. a markov chain. What Is A State In Markov Chain.

From www.researchgate.net

Markov chains a, Markov chain for L = 1. States are represented by What Is A State In Markov Chain The space on which a markov process \lives can be either. We will assign the rows in order to. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. a markov chain is a mathematical system that experiences transitions from one state to another according to certain.. What Is A State In Markov Chain.

From www.quantstart.com

Hidden Markov Models An Introduction QuantStart What Is A State In Markov Chain a markov chain is a random process that has a markov property. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. A markov chain presents the random motion of the object. It is a sequence xn of. To better understand markov chains, we need to introduce. What Is A State In Markov Chain.

From www.machinelearningplus.com

Gentle Introduction to Markov Chain Machine Learning Plus What Is A State In Markov Chain A markov chain presents the random motion of the object. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. a markov chain describes a system whose state changes over time. Each column represents a terminal state. markov chains are a happy medium between complete independence and complete dependence.. What Is A State In Markov Chain.

From www.slideserve.com

PPT Bayesian Methods with Monte Carlo Markov Chains II PowerPoint What Is A State In Markov Chain 11.2.4 classification of states. To better understand markov chains, we need to introduce some definitions. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. It is a sequence xn of. markov chains are a happy medium between complete independence and complete dependence. a markov. What Is A State In Markov Chain.

From stackoverflow.com

r Creating threestate Markov chain plot Stack Overflow What Is A State In Markov Chain Each column represents a terminal state. To better understand markov chains, we need to introduce some definitions. The space on which a markov process \lives can be either. a markov chain describes a system whose state changes over time. markov chains are a happy medium between complete independence and complete dependence. a markov chain is a mathematical. What Is A State In Markov Chain.

From www.big-data.tips

Markov Chain Big Data Mining & Machine Learning What Is A State In Markov Chain markov chains are a happy medium between complete independence and complete dependence. each row in the matrix represents an initial state. a markov chain is a random process that has a markov property. It is a sequence xn of. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or. What Is A State In Markov Chain.

From www.youtube.com

L24.4 DiscreteTime FiniteState Markov Chains YouTube What Is A State In Markov Chain To better understand markov chains, we need to introduce some definitions. markov chains are a happy medium between complete independence and complete dependence. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. a markov chain is a random process that has a markov property. . What Is A State In Markov Chain.

From math.stackexchange.com

How to solve for the nstep state probability vector for the Markov What Is A State In Markov Chain It is a sequence xn of. To better understand markov chains, we need to introduce some definitions. Each column represents a terminal state. 11.2.4 classification of states. a markov chain describes a system whose state changes over time. a markov chain is a random process that has a markov property. We will assign the rows in order. What Is A State In Markov Chain.

From youtube.com

Finite Math Markov Chain SteadyState Calculation YouTube What Is A State In Markov Chain markov chains are a happy medium between complete independence and complete dependence. A markov chain presents the random motion of the object. a markov chain is a random process that has a markov property. It is a sequence xn of. a markov chain is a mathematical system that experiences transitions from one state to another according to. What Is A State In Markov Chain.

From www.slideserve.com

PPT Chapter 17 Markov Chains PowerPoint Presentation, free download What Is A State In Markov Chain A markov chain presents the random motion of the object. The space on which a markov process \lives can be either. 11.2.4 classification of states. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. To better understand markov chains, we need to introduce some definitions. each row in. What Is A State In Markov Chain.

From www.youtube.com

Transient, recurrent states, and irreducible, closed sets in the Markov What Is A State In Markov Chain a markov chain is a mathematical system that experiences transitions from one state to another according to certain. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. a markov chain is a random process that has a markov property. To better understand markov chains, we. What Is A State In Markov Chain.

From www.introtoalgo.com

Markov Chain What Is A State In Markov Chain Each column represents a terminal state. A markov chain presents the random motion of the object. It is a sequence xn of. a markov chain is a random process that has a markov property. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. The space on which a markov. What Is A State In Markov Chain.

From www.slideserve.com

PPT Markov Chain Part 1 PowerPoint Presentation, free download ID What Is A State In Markov Chain a markov chain is a mathematical system that experiences transitions from one state to another according to certain. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. We will assign the rows in order to. The space on which a markov process \lives can be either.. What Is A State In Markov Chain.

From www.youtube.com

ANU MATH1014 Markov Chain 2. Weather Example and Steady State Vector What Is A State In Markov Chain To better understand markov chains, we need to introduce some definitions. We will assign the rows in order to. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. each row in the matrix represents an initial state. A markov chain presents the random motion of the object. 11.2.4. What Is A State In Markov Chain.

From austingwalters.com

Markov Processes (a.k.a. Markov Chains), an Introduction What Is A State In Markov Chain Each column represents a terminal state. a markov chain is a random process that has a markov property. To better understand markov chains, we need to introduce some definitions. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. 11.2.4 classification of states. a markov chain describes a. What Is A State In Markov Chain.

From www.researchgate.net

A Markov chain with five states, where states 3 and 5 are absorbing What Is A State In Markov Chain A markov chain presents the random motion of the object. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. To better understand markov chains, we need to introduce some definitions. The space on which a markov process \lives can be either. a markov chain is a. What Is A State In Markov Chain.

From www.shiksha.com

Markov Chain Types, Properties and Applications Shiksha Online What Is A State In Markov Chain a markov chain describes a system whose state changes over time. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. To better understand markov chains, we need to introduce some definitions. 11.2.4 classification of states. a markov chain is a random process that has a markov property.. What Is A State In Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is A State In Markov Chain Each column represents a terminal state. markov chains are a happy medium between complete independence and complete dependence. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. 11.2.4 classification of states. A markov chain presents the random motion of the object. The space on which a markov process. What Is A State In Markov Chain.

From gregorygundersen.com

A Romantic View of Markov Chains What Is A State In Markov Chain Each column represents a terminal state. We will assign the rows in order to. markov chains, named after andrey markov, are mathematical systems that hop from one state (a situation or set of values) to. each row in the matrix represents an initial state. It is a sequence xn of. 11.2.4 classification of states. a markov. What Is A State In Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is A State In Markov Chain We will assign the rows in order to. To better understand markov chains, we need to introduce some definitions. a markov chain is a random process that has a markov property. The space on which a markov process \lives can be either. a markov chain describes a system whose state changes over time. 11.2.4 classification of states.. What Is A State In Markov Chain.

From userdatarheumatics.z21.web.core.windows.net

Transition Matrix Of A Markov Chain What Is A State In Markov Chain each row in the matrix represents an initial state. markov chains are a happy medium between complete independence and complete dependence. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. Each column represents a terminal state. It is a sequence xn of. a markov chain describes a. What Is A State In Markov Chain.

From www.engati.com

Markov chain Engati What Is A State In Markov Chain a markov chain describes a system whose state changes over time. To better understand markov chains, we need to introduce some definitions. The space on which a markov process \lives can be either. 11.2.4 classification of states. It is a sequence xn of. a markov chain is a random process that has a markov property. markov. What Is A State In Markov Chain.