Markov Process Definition . A markov process is a memoryless random process, i.e. Such a process or experiment is called a markov chain or markov process. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. Basic definitions and properties of markov processes. The process was first studied by a russian mathematician named. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. The idea of a process without an. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. A sequence of random states s1;

from www.geeksforgeeks.org

The idea of a process without an. A markov process is a memoryless random process, i.e. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. The process was first studied by a russian mathematician named. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. Such a process or experiment is called a markov chain or markov process. Basic definitions and properties of markov processes. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A sequence of random states s1;

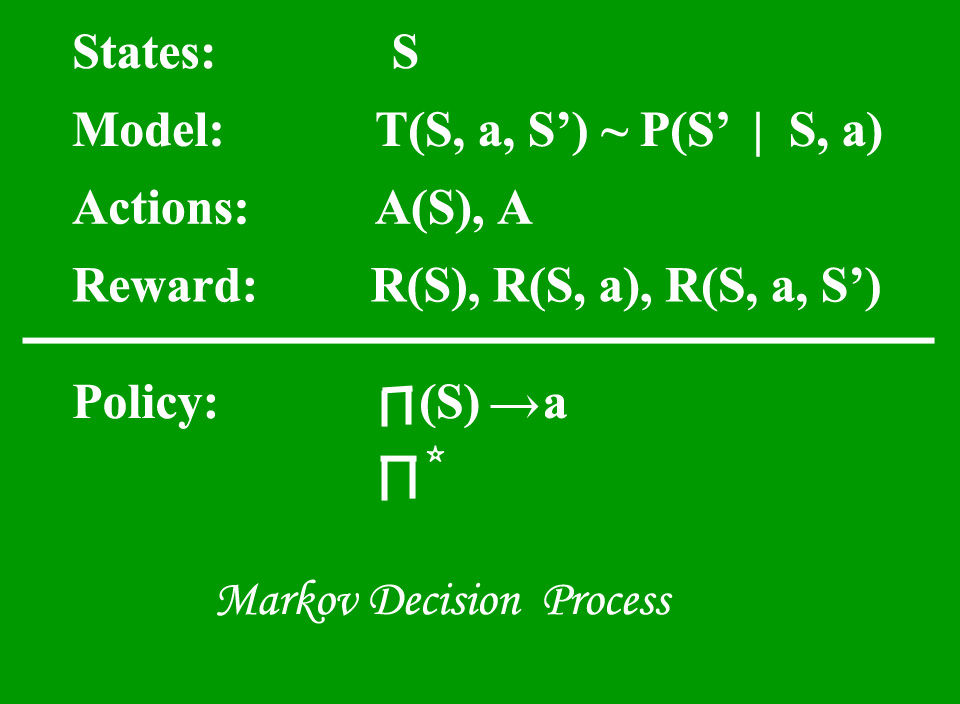

Markov Decision Process

Markov Process Definition A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Basic definitions and properties of markov processes. A markov process is a memoryless random process, i.e. Such a process or experiment is called a markov chain or markov process. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. The idea of a process without an. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. A sequence of random states s1; A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. The process was first studied by a russian mathematician named.

From austingwalters.com

Markov Processes (a.k.a. Markov Chains), an Introduction Markov Process Definition A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A sequence of random states s1; A markov process is a memoryless random process, i.e. Basic definitions and properties of markov processes. The process was first studied by a russian mathematician named. A markov process. Markov Process Definition.

From www.researchgate.net

Fivestate Markov process. Download Scientific Diagram Markov Process Definition Basic definitions and properties of markov processes. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. The process was first studied by a russian mathematician named. A markov chain is a mathematical system that experiences transitions from one state to another. Markov Process Definition.

From medium.com

The Markov Property, Chain, Reward Process and Decision Process by Markov Process Definition A markov process is a memoryless random process, i.e. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. Basic definitions and properties of markov processes. Such a process or experiment is called a markov chain or markov process. A stochastic process whose evolution after. Markov Process Definition.

From www.geeksforgeeks.org

Markov Decision Process Markov Process Definition A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Such a process or experiment is called a markov chain or markov process. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A sequence. Markov Process Definition.

From www.researchgate.net

(A) Graphical representation of the Markov process (X t ) t∈N . (B Markov Process Definition A sequence of random states s1; The process was first studied by a russian mathematician named. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. The idea of a process without an. Such a process or experiment is called a markov chain or markov process. A markov process is. Markov Process Definition.

From www.youtube.com

Markov Decision Process Reinforcement Learning Machine Learning Markov Process Definition A sequence of random states s1; Such a process or experiment is called a markov chain or markov process. A markov process is a memoryless random process, i.e. Basic definitions and properties of markov processes. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the. Markov Process Definition.

From optimization.cbe.cornell.edu

Markov decision process Cornell University Computational Optimization Markov Process Definition A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. A markov process is a random process indexed by time, and with. Markov Process Definition.

From www.youtube.com

L24.2 Introduction to Markov Processes YouTube Markov Process Definition A markov process is a memoryless random process, i.e. The idea of a process without an. The process was first studied by a russian mathematician named. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. Such a process or experiment is called a markov. Markov Process Definition.

From www.slideserve.com

PPT 8. Markov Models PowerPoint Presentation, free download ID3471378 Markov Process Definition The process was first studied by a russian mathematician named. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. A markov process is a random process indexed by time, and with the property that the future is independent of the past,. Markov Process Definition.

From www.52coding.com.cn

RL Markov Decision Processes NIUHE Markov Process Definition A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. Basic definitions and properties of markov processes. The idea of a process without an. A markov process is a random process indexed by time, and with the property that the future is independent of the. Markov Process Definition.

From medium.com

Markov Decision Process(MDP) Simplified by Bibek Chaudhary Medium Markov Process Definition Basic definitions and properties of markov processes. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. The process was first studied by a russian mathematician named. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution. Markov Process Definition.

From www.slideserve.com

PPT Markov Decision Processes PowerPoint Presentation, free download Markov Process Definition The idea of a process without an. A sequence of random states s1; A markov process is a memoryless random process, i.e. Such a process or experiment is called a markov chain or markov process. Basic definitions and properties of markov processes. A markov process is a random process indexed by time, and with the property that the future is. Markov Process Definition.

From arshren.medium.com

An Introduction to Markov Decision Process by Renu Khandelwal Medium Markov Process Definition A sequence of random states s1; Such a process or experiment is called a markov chain or markov process. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A markov chain is a mathematical system that experiences transitions from one state to another according. Markov Process Definition.

From www.researchgate.net

Three different subMarkov processes Download Scientific Diagram Markov Process Definition Such a process or experiment is called a markov chain or markov process. A sequence of random states s1; A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. A markov process is a random process indexed by time, and with the. Markov Process Definition.

From www.scaler.com

Markov Decision Process Scaler Topics Markov Process Definition The idea of a process without an. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the.. Markov Process Definition.

From www.slideserve.com

PPT Markov Decision Process PowerPoint Presentation, free download Markov Process Definition The process was first studied by a russian mathematician named. Such a process or experiment is called a markov chain or markov process. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov process is a random process indexed by time, and with the property that the future. Markov Process Definition.

From www.slideserve.com

PPT CSE 473 Markov Decision Processes PowerPoint Presentation, free Markov Process Definition A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A sequence of random states s1; A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. A. Markov Process Definition.

From www.slideserve.com

PPT Markov Processes and BirthDeath Processes PowerPoint Markov Process Definition Basic definitions and properties of markov processes. The idea of a process without an. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A markov process is a random process indexed by time, and with the property that the future is independent of the. Markov Process Definition.

From www.youtube.com

Markov process YouTube Markov Process Definition Such a process or experiment is called a markov chain or markov process. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. The process was first studied by a russian mathematician named. Basic definitions and properties of markov processes. A markov. Markov Process Definition.

From www.slideserve.com

PPT IEG5300 Tutorial 5 Continuoustime Markov Chain PowerPoint Markov Process Definition A markov process is a memoryless random process, i.e. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. Basic definitions and properties of markov processes. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic. Markov Process Definition.

From www.slideserve.com

PPT Markov Processes and BirthDeath Processes PowerPoint Markov Process Definition The process was first studied by a russian mathematician named. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A sequence of random states s1; A markov process is a memoryless random process, i.e. The idea of a process without an. A markov chain. Markov Process Definition.

From www.slideserve.com

PPT Markov Decision Process PowerPoint Presentation, free download Markov Process Definition The process was first studied by a russian mathematician named. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the. Markov Process Definition.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download Markov Process Definition The process was first studied by a russian mathematician named. Such a process or experiment is called a markov chain or markov process. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. Basic definitions and properties of markov processes. A markov. Markov Process Definition.

From www.slideserve.com

PPT An Introduction to Markov Decision Processes Sarah Hickmott Markov Process Definition The process was first studied by a russian mathematician named. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. The idea of a process without an. A sequence of random states s1; A markov process is a memoryless random process, i.e. Such a process. Markov Process Definition.

From datascience.stackexchange.com

reinforcement learning What is the difference between State Value Markov Process Definition A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. The idea of a process without an. Such a process or experiment is called a. Markov Process Definition.

From www.thoughtco.com

Definition and Example of a Markov Transition Matrix Markov Process Definition A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. The process was first studied by a. Markov Process Definition.

From www.slideserve.com

PPT Markov Processes and BirthDeath Processes PowerPoint Markov Process Definition Basic definitions and properties of markov processes. The idea of a process without an. A markov process is a memoryless random process, i.e. Such a process or experiment is called a markov chain or markov process. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that. Markov Process Definition.

From www.slideserve.com

PPT Dependability Theory and Methods 5. Markov Models PowerPoint Markov Process Definition A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. Basic definitions and properties of markov processes. The process was first studied by a russian mathematician named. A sequence of random states s1; A markov process is a memoryless random process, i.e. A stochastic process. Markov Process Definition.

From www.machinelearningplus.com

Gentle Introduction to Markov Chain Machine Learning Plus Markov Process Definition The process was first studied by a russian mathematician named. A markov process is a memoryless random process, i.e. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. Basic definitions and properties of markov processes. A markov process is a random. Markov Process Definition.

From towardsdatascience.com

Reinforcement Learning — Part 2. Markov Decision Processes by Andreas Markov Process Definition A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov process is a random process indexed by time, and with. Markov Process Definition.

From www.slideserve.com

PPT Markov Processes and BirthDeath Processes PowerPoint Markov Process Definition A sequence of random states s1; Such a process or experiment is called a markov chain or markov process. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A markov chain is a mathematical system that experiences transitions from one state to another according. Markov Process Definition.

From www.youtube.com

Markov Decision Processes YouTube Markov Process Definition A markov process is a memoryless random process, i.e. The process was first studied by a russian mathematician named. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t. Markov Process Definition.

From timeseriesreasoning.com

Introduction to Discrete Time Markov Processes Time Series Analysis Markov Process Definition The idea of a process without an. Such a process or experiment is called a markov chain or markov process. A stochastic process whose evolution after a given time $ t $ does not depend on the evolution before $ t $, given that the value of the. The process was first studied by a russian mathematician named. A markov. Markov Process Definition.

From www.slideserve.com

PPT 8. Markov Models PowerPoint Presentation, free download ID3471378 Markov Process Definition A sequence of random states s1; A markov process is a memoryless random process, i.e. The idea of a process without an. Such a process or experiment is called a markov chain or markov process. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov process is a. Markov Process Definition.

From www.slideserve.com

PPT Chapter 5 PowerPoint Presentation, free download ID308017 Markov Process Definition A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present. A stochastic process whose evolution after a given time $ t $ does not depend. Markov Process Definition.