Deep Learning Inference Server . With this kit, you can explore how to deploy triton inference server in different. Triton inference server simplifies the deployment of deep learning models at scale in production. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Run inference on trained machine learning or. Triton inference server is an open source inference serving software that streamlines ai inferencing.

from learn.microsoft.com

With this kit, you can explore how to deploy triton inference server in different. Run inference on trained machine learning or. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Triton inference server simplifies the deployment of deep learning models at scale in production. Triton inference server is an open source inference serving software that streamlines ai inferencing. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. It supports all major ai frameworks, runs multiple models concurrently to increase. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™.

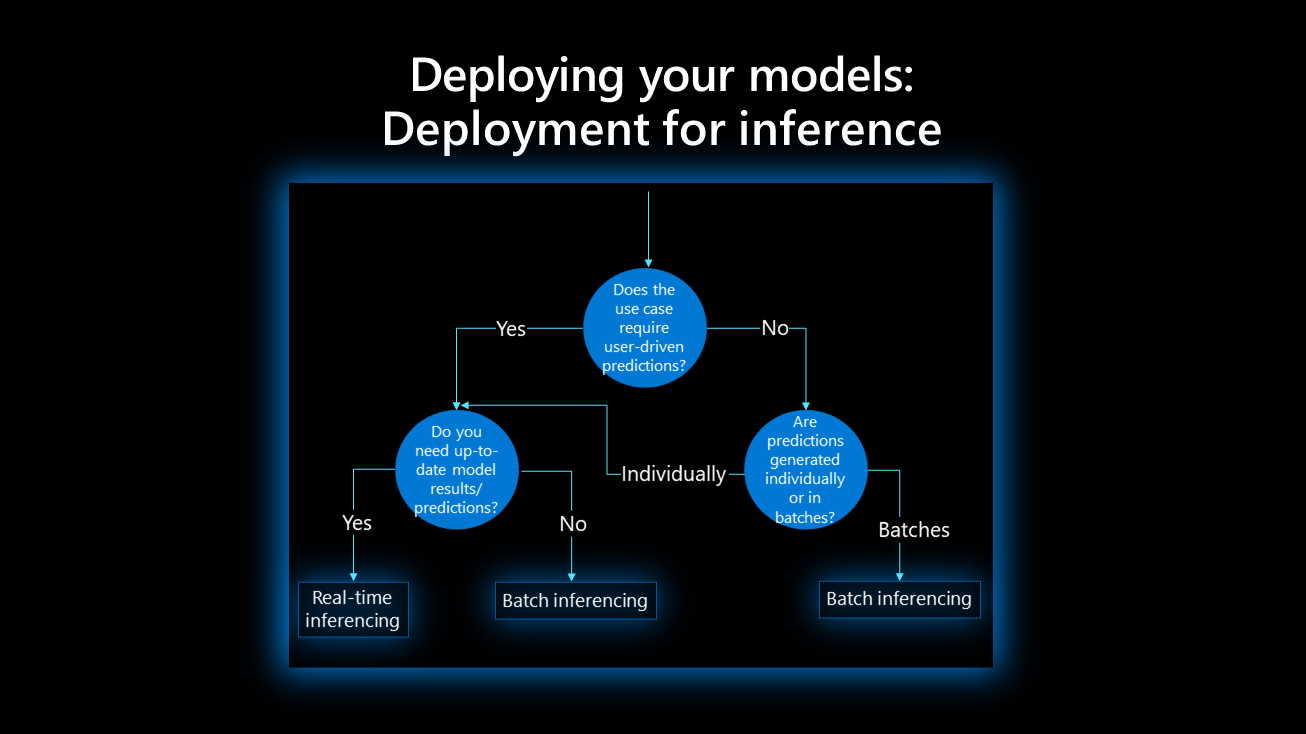

Machine learning inference during deployment Cloud Adoption Framework

Deep Learning Inference Server Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Triton inference server simplifies the deployment of deep learning models at scale in production. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton inference server is an open source inference serving software that streamlines ai inferencing. With this kit, you can explore how to deploy triton inference server in different. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Run inference on trained machine learning or. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud.

From medium.com

Optimizing & scaling Deep Learning inference Part I by Partha Deka Deep Learning Inference Server With this kit, you can explore how to deploy triton inference server in different. Triton inference server is an open source inference serving software that streamlines ai inferencing. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton enables teams to deploy any ai model from. Deep Learning Inference Server.

From www.nextplatform.com

Nvidia Pushes Deep Learning Inference With New Pascal GPUs Deep Learning Inference Server Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Triton inference server is an open source inference serving software that streamlines ai inferencing. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. Triton inference server enables teams to deploy any. Deep Learning Inference Server.

From deepai.org

FaSTGShare Enabling Efficient SpatioTemporal GPU Sharing in Deep Learning Inference Server Triton inference server is an open source inference serving software that streamlines ai inferencing. Run inference on trained machine learning or. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Triton inference server simplifies the deployment of deep learning models at scale. Deep Learning Inference Server.

From developer.nvidia.com

NVIDIA Triton Inference Server Boosts Deep Learning Inference NVIDIA Deep Learning Inference Server It supports all major ai frameworks, runs multiple models concurrently to increase. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton inference server is an open source inference serving software that streamlines ai inferencing. With this kit, you can explore how to deploy triton inference. Deep Learning Inference Server.

From blogs.vmware.com

Maximizing Deep Learning Inference Performance on Intel Architecture in Deep Learning Inference Server Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Triton inference server is an open source inference serving software that streamlines ai inferencing.. Deep Learning Inference Server.

From www.researchgate.net

(PDF) FaSTGShare Enabling Efficient SpatioTemporal GPU Sharing in Deep Learning Inference Server Run inference on trained machine learning or. With this kit, you can explore how to deploy triton inference server in different. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Triton inference server is an open source inference serving software that streamlines ai inferencing. Run inference on trained machine learning. Deep Learning Inference Server.

From connecttech.com

Deep Learning Servers Products Connect Tech Inc. Deep Learning Inference Server Run inference on trained machine learning or. With this kit, you can explore how to deploy triton inference server in different. Triton inference server is an open source inference serving software that streamlines ai inferencing. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Triton inference server includes many features and tools to help. Deep Learning Inference Server.

From resources.nvidia.com

Minimizing Deep Learning Inference Latency with NVIDIA MultiInstance GPU Deep Learning Inference Server With this kit, you can explore how to deploy triton inference server in different. Triton inference server is an open source inference serving software that streamlines ai inferencing. Triton inference server simplifies the deployment of deep learning models at scale in production. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton inference server includes many features. Deep Learning Inference Server.

From www.eeweb.com

A Breakthrough in FPGABased Deep Learning Inference EE Deep Learning Inference Server Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Run inference on. Deep Learning Inference Server.

From www.edge-ai-vision.com

Benchmark Embedded Deep Learning Inference in Minutes Edge AI and Deep Learning Inference Server Triton inference server is an open source inference serving software that streamlines ai inferencing. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton inference server simplifies the deployment of deep learning models at scale in production. Run inference on trained machine. Deep Learning Inference Server.

From blog.51cto.com

深度学习部署架构:以 Triton Inference Server(TensorRT)为例_禅与计算机程序设计艺术的技术博客_51CTO博客 Deep Learning Inference Server It supports all major ai frameworks, runs multiple models concurrently to increase. Triton inference server simplifies the deployment of deep learning models at scale in production. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with. Deep Learning Inference Server.

From aws.amazon.com

Deploy fast and scalable AI with NVIDIA Triton Inference Server in Deep Learning Inference Server Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. Run inference on trained machine learning or. Triton inference server simplifies the deployment of deep learning models at scale in production. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine. Deep Learning Inference Server.

From www.youtube.com

How to Build Deep Learning Inference Through Knative Server... Huamin Deep Learning Inference Server Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton inference server is an open source inference serving software that streamlines ai inferencing. With this kit, you can explore how to deploy triton inference server in different. It supports all major ai frameworks, runs multiple models. Deep Learning Inference Server.

From blogs.nvidia.com

What’s the Difference Between Deep Learning Training and Inference Deep Learning Inference Server Triton enables teams to deploy any ai model from multiple deep learning and machine learning. With this kit, you can explore how to deploy triton inference server in different. Run inference on trained machine learning or. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton. Deep Learning Inference Server.

From iqua.ece.toronto.edu

Distributed Inference of Deep Learning Models iQua — iQua Group Deep Learning Inference Server Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton inference server simplifies the deployment of deep learning models at scale in production. It supports all major ai frameworks, runs multiple models concurrently to increase. Run inference on trained machine learning or deep learning models from. Deep Learning Inference Server.

From developer.nvidia.com

NVIDIA Triton Inference Server Boosts Deep Learning Inference NVIDIA Deep Learning Inference Server Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Run inference on trained machine learning or. With this kit, you can explore how to deploy triton inference server in different. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia. Deep Learning Inference Server.

From deci.ai

The Ultimate Deep Learning Inference Acceleration Guide Deci Deep Learning Inference Server Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Run inference on trained machine learning or. Triton inference server is an open source inference serving software that streamlines ai inferencing. It. Deep Learning Inference Server.

From developer.nvidia.com

Boosting AI Model Inference Performance on Azure Machine Learning Deep Learning Inference Server It supports all major ai frameworks, runs multiple models concurrently to increase. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. Triton inference server is an open source inference serving software. Deep Learning Inference Server.

From developer.nvidia.com

Choosing a Server for Deep Learning Inference NVIDIA Technical Blog Deep Learning Inference Server Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. Triton inference server simplifies the deployment of deep learning models at scale in production. Triton inference server enables. Deep Learning Inference Server.

From aws.amazon.com

Using Fewer Resources to Run Deep Learning Inference on Intel FPGA Edge Deep Learning Inference Server Run inference on trained machine learning or. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Triton inference server simplifies the deployment of deep learning models at scale in production. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton enables teams to deploy any ai model. Deep Learning Inference Server.

From blog.51cto.com

深度学习部署架构:以 Triton Inference Server(TensorRT)为例_禅与计算机程序设计艺术的技术博客_51CTO博客 Deep Learning Inference Server Run inference on trained machine learning or. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. It supports all major ai frameworks, runs multiple models concurrently to increase. With this kit, you can explore how to deploy triton inference server in different. Triton inference server is an open source inference serving software that streamlines. Deep Learning Inference Server.

From community.intel.com

The Difference Between Deep Learning Training and Inference Intel Deep Learning Inference Server Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. Triton inference server. Deep Learning Inference Server.

From cloudxlab.com

Inference Engine & integration with Deep Learning applications CloudxLab Deep Learning Inference Server Triton inference server is an open source inference serving software that streamlines ai inferencing. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. With this kit, you. Deep Learning Inference Server.

From www.pinterest.jp

NVIDIA Triton Inference Server Boosts Deep Learning Inference Deep Deep Learning Inference Server Triton inference server is an open source inference serving software that streamlines ai inferencing. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Triton inference server simplifies the deployment of deep learning models at scale in production. It supports all major ai frameworks, runs multiple models concurrently to increase. Run. Deep Learning Inference Server.

From developer.nvidia.com

Serving ML Model Pipelines on NVIDIA Triton Inference Server with Deep Learning Inference Server Triton inference server is an open source inference serving software that streamlines ai inferencing. Run inference on trained machine learning or. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Run inference on trained machine learning or deep. Deep Learning Inference Server.

From developer.nvidia.com

Deep Learning Software NVIDIA Developer Deep Learning Inference Server Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton enables teams to deploy any. Deep Learning Inference Server.

From www.youtube.com

Production Deep Learning Inference with NVIDIA Triton Inference Server Deep Learning Inference Server Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Run inference on trained machine learning or. Triton inference server is an open source inference serving software that streamlines ai inferencing. With this kit, you can explore how to deploy triton inference server in different. It supports. Deep Learning Inference Server.

From learn.microsoft.com

Machine learning inference during deployment Cloud Adoption Framework Deep Learning Inference Server Triton inference server is an open source inference serving software that streamlines ai inferencing. Run inference on trained machine learning or. With this kit, you can explore how to deploy triton inference server in different. Triton enables teams to deploy any ai model from multiple deep learning and machine learning. It supports all major ai frameworks, runs multiple models concurrently. Deep Learning Inference Server.

From neuralmagic.com

Deploy Serverless Machine Learning Inference on AWS with DeepSparse Deep Learning Inference Server Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. With this kit, you can explore how to deploy triton inference server in different. Triton inference server simplifies the deployment of deep learning models at scale in production. It supports all major ai frameworks, runs multiple models. Deep Learning Inference Server.

From capalearning.com

What Is Inference In Machine Learning? Capa Learning Deep Learning Inference Server It supports all major ai frameworks, runs multiple models concurrently to increase. Run inference on trained machine learning or. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Triton inference server is an open source inference serving software that streamlines ai inferencing. Triton inference server includes. Deep Learning Inference Server.

From www.researchgate.net

The intelligent collaborative inference system architecture with three Deep Learning Inference Server It supports all major ai frameworks, runs multiple models concurrently to increase. Triton inference server simplifies the deployment of deep learning models at scale in production. With this kit, you can explore how to deploy triton inference server in different. Run inference on trained machine learning or. Triton inference server is an open source inference serving software that streamlines ai. Deep Learning Inference Server.

From developer.nvidia.com

Minimizing Deep Learning Inference Latency with NVIDIA MultiInstance Deep Learning Inference Server Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Triton inference server enables teams to deploy any ai model from multiple deep learning and machine learning frameworks, including tensorrt, tensorflow, pytorch, onnx,. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or. Deep Learning Inference Server.

From www.nvidia.com

Easily Deploy AI Deep Learning Models at Scale with Triton Inference Deep Learning Inference Server With this kit, you can explore how to deploy triton inference server in different. Run inference on trained machine learning or deep learning models from any framework on any processor—gpu, cpu, or other—with nvidia triton inference server™. It supports all major ai frameworks, runs multiple models concurrently to increase. Run inference on trained machine learning or. Triton inference server enables. Deep Learning Inference Server.

From mooccourse.wordpress.com

Deep Learning Inference with Azure ML Studio (Coursera) MOOC Course Deep Learning Inference Server It supports all major ai frameworks, runs multiple models concurrently to increase. With this kit, you can explore how to deploy triton inference server in different. Triton inference server includes many features and tools to help deploy deep learning at scale and in the cloud. Triton inference server simplifies the deployment of deep learning models at scale in production. Triton. Deep Learning Inference Server.

From neuralservers.com

Deep Learning Neural Servers Deep Learning Inference Server Triton inference server is an open source inference serving software that streamlines ai inferencing. Triton inference server simplifies the deployment of deep learning models at scale in production. With this kit, you can explore how to deploy triton inference server in different. It supports all major ai frameworks, runs multiple models concurrently to increase. Triton enables teams to deploy any. Deep Learning Inference Server.