Transformers Explained Attention . And this happens because it captures the relationships between each word in a sequence with every other word. The transformer calls each attention processor an attention head and repeats it several times in parallel. It gives its attention greater power of discrimination, by combining several similar attention calculations. In this post, we will. Challenges with rnns and how transformer models can help overcome those challenges Attention is a concept that helped improve the performance of neural machine translation applications. How attention is used in the transformer. All of these similar attention calculations are then combined. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. Each of these is called an attention head. As we discussed in part 2, attention is used in the transformer in three places: In the transformer, the attention module repeats its computations multiple times in parallel. To understand transformers we first must understand the attention mechanism. The transformer gets its powers because of the attention module. The attention mechanism enables the transformers.

from machinelearningmastery.com

In the transformer, the attention module repeats its computations multiple times in parallel. Attention is a concept that helped improve the performance of neural machine translation applications. In this post, we will. As we discussed in part 2, attention is used in the transformer in three places: Each of these is called an attention head. All of these similar attention calculations are then combined. And this happens because it captures the relationships between each word in a sequence with every other word. It gives its attention greater power of discrimination, by combining several similar attention calculations. To understand transformers we first must understand the attention mechanism. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture.

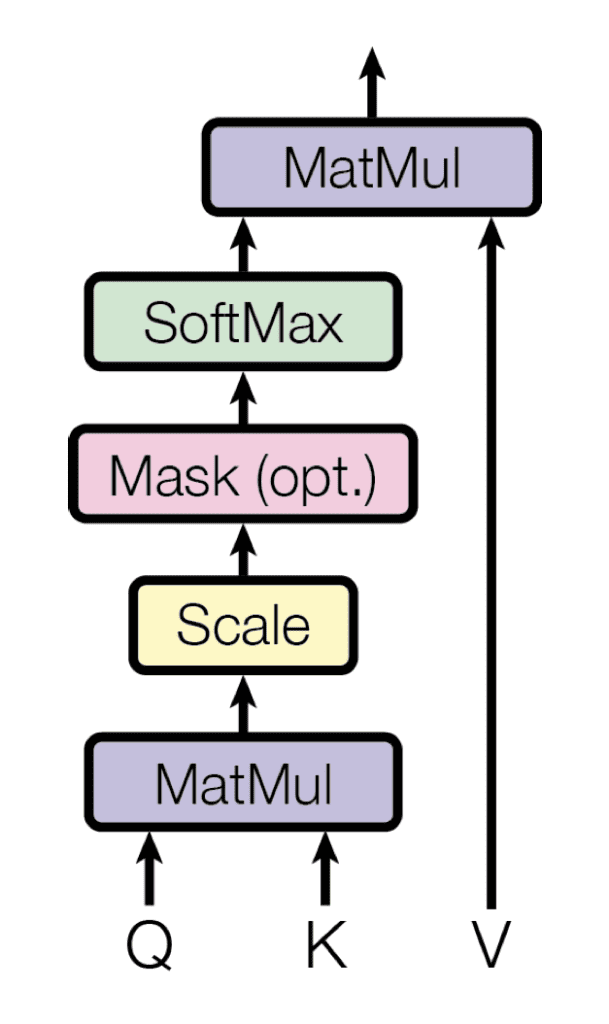

The Transformer Attention Mechanism

Transformers Explained Attention All of these similar attention calculations are then combined. Each of these is called an attention head. In this post, we will. The transformer calls each attention processor an attention head and repeats it several times in parallel. How attention is used in the transformer. It gives its attention greater power of discrimination, by combining several similar attention calculations. The transformer gets its powers because of the attention module. To understand transformers we first must understand the attention mechanism. Attention is a concept that helped improve the performance of neural machine translation applications. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. The attention mechanism enables the transformers. As we discussed in part 2, attention is used in the transformer in three places: In the transformer, the attention module repeats its computations multiple times in parallel. All of these similar attention calculations are then combined. Challenges with rnns and how transformer models can help overcome those challenges And this happens because it captures the relationships between each word in a sequence with every other word.

From medium.com

Attention Mechanisms in Transformers by Aaqilah Medium Transformers Explained Attention Each of these is called an attention head. How attention is used in the transformer. As we discussed in part 2, attention is used in the transformer in three places: The transformer calls each attention processor an attention head and repeats it several times in parallel. Attention is a concept that helped improve the performance of neural machine translation applications.. Transformers Explained Attention.

From medium.com

Transformer The SelfAttention Mechanism by Sudipto Baul Machine Transformers Explained Attention The transformer gets its powers because of the attention module. To understand transformers we first must understand the attention mechanism. The attention mechanism enables the transformers. And this happens because it captures the relationships between each word in a sequence with every other word. It gives its attention greater power of discrimination, by combining several similar attention calculations. In this. Transformers Explained Attention.

From www.vrogue.co

Visualization Of Attention Mechanism In Transformer A vrogue.co Transformers Explained Attention The attention mechanism enables the transformers. And this happens because it captures the relationships between each word in a sequence with every other word. How attention is used in the transformer. In this post, we will. It gives its attention greater power of discrimination, by combining several similar attention calculations. Challenges with rnns and how transformer models can help overcome. Transformers Explained Attention.

From vaclavkosar.com

Transformer’s SelfAttention Mechanism Simplified Transformers Explained Attention The transformer gets its powers because of the attention module. Each of these is called an attention head. And this happens because it captures the relationships between each word in a sequence with every other word. As we discussed in part 2, attention is used in the transformer in three places: Attention is a concept that helped improve the performance. Transformers Explained Attention.

From www.researchgate.net

The vanilla selfattention mechanism in Transformer. Download Transformers Explained Attention The transformer calls each attention processor an attention head and repeats it several times in parallel. Each of these is called an attention head. It gives its attention greater power of discrimination, by combining several similar attention calculations. In the transformer, the attention module repeats its computations multiple times in parallel. In this article, i cover all the attention blocks,. Transformers Explained Attention.

From towardsdatascience.com

Transformers Explained Visually (Part 3) Multihead Attention, deep Transformers Explained Attention In the transformer, the attention module repeats its computations multiple times in parallel. And this happens because it captures the relationships between each word in a sequence with every other word. To understand transformers we first must understand the attention mechanism. The attention mechanism enables the transformers. In this post, we will. In this article, i cover all the attention. Transformers Explained Attention.

From www.youtube.com

Transformer SelfAttention Mechanism Explained Attention Is All You Transformers Explained Attention The transformer calls each attention processor an attention head and repeats it several times in parallel. Each of these is called an attention head. How attention is used in the transformer. In the transformer, the attention module repeats its computations multiple times in parallel. All of these similar attention calculations are then combined. The transformer gets its powers because of. Transformers Explained Attention.

From medium.com

Understanding and Coding the Attention Mechanism — The Magic Behind Transformers Explained Attention Attention is a concept that helped improve the performance of neural machine translation applications. All of these similar attention calculations are then combined. To understand transformers we first must understand the attention mechanism. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. Each of these is called. Transformers Explained Attention.

From www.youtube.com

EE599 Project 12 Transformer and SelfAttention mechanism YouTube Transformers Explained Attention It gives its attention greater power of discrimination, by combining several similar attention calculations. And this happens because it captures the relationships between each word in a sequence with every other word. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. Each of these is called an. Transformers Explained Attention.

From luv-bansal.medium.com

Transformer — Attention Is All You Need Easily Explained With Transformers Explained Attention In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. Attention is a concept that helped improve the performance of neural machine translation applications. To understand transformers we first must understand the attention mechanism. In the transformer, the attention module repeats its computations multiple times in parallel. All. Transformers Explained Attention.

From ketanhdoshi.github.io

Transformers Explained Visually Multihead Attention, deep dive Transformers Explained Attention The transformer calls each attention processor an attention head and repeats it several times in parallel. Challenges with rnns and how transformer models can help overcome those challenges It gives its attention greater power of discrimination, by combining several similar attention calculations. All of these similar attention calculations are then combined. The transformer gets its powers because of the attention. Transformers Explained Attention.

From www.comet.com

Explainable AI Visualizing Attention in Transformers Comet Transformers Explained Attention The transformer calls each attention processor an attention head and repeats it several times in parallel. The attention mechanism enables the transformers. How attention is used in the transformer. The transformer gets its powers because of the attention module. In this post, we will. In this article, i cover all the attention blocks, and in the next story, i will. Transformers Explained Attention.

From ketanhdoshi.github.io

Transformers Explained Visually How it works, stepbystep Ketan Transformers Explained Attention How attention is used in the transformer. It gives its attention greater power of discrimination, by combining several similar attention calculations. And this happens because it captures the relationships between each word in a sequence with every other word. The transformer gets its powers because of the attention module. The attention mechanism enables the transformers. Challenges with rnns and how. Transformers Explained Attention.

From medium.com

Attention Mechanisms in Transformers by Aaqilah Medium Transformers Explained Attention Attention is a concept that helped improve the performance of neural machine translation applications. As we discussed in part 2, attention is used in the transformer in three places: The transformer calls each attention processor an attention head and repeats it several times in parallel. The attention mechanism enables the transformers. In this post, we will. To understand transformers we. Transformers Explained Attention.

From www.scribd.com

Transformers explained “Attention is all you need.” PDF Applied Transformers Explained Attention The attention mechanism enables the transformers. Attention is a concept that helped improve the performance of neural machine translation applications. Each of these is called an attention head. In this post, we will. Challenges with rnns and how transformer models can help overcome those challenges The transformer calls each attention processor an attention head and repeats it several times in. Transformers Explained Attention.

From www.youtube.com

Transformers are RNNs Fast Autoregressive Transformers with Linear Transformers Explained Attention In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. The transformer calls each attention processor an attention head and repeats it several times in parallel. In this post, we will. Attention is a concept that helped improve the performance of neural machine translation applications. Each of these. Transformers Explained Attention.

From developers.reinfer.io

Efficient Transformers I Attention Mechanisms Reinfer Docs Transformers Explained Attention It gives its attention greater power of discrimination, by combining several similar attention calculations. Each of these is called an attention head. The transformer gets its powers because of the attention module. The transformer calls each attention processor an attention head and repeats it several times in parallel. In the transformer, the attention module repeats its computations multiple times in. Transformers Explained Attention.

From lilianweng.github.io

Attention? Attention! Transformers Explained Attention As we discussed in part 2, attention is used in the transformer in three places: Attention is a concept that helped improve the performance of neural machine translation applications. To understand transformers we first must understand the attention mechanism. Each of these is called an attention head. It gives its attention greater power of discrimination, by combining several similar attention. Transformers Explained Attention.

From www.scribd.com

Transformers Explained Visually (Part 3)_ Multihead Attention, Deep Transformers Explained Attention To understand transformers we first must understand the attention mechanism. All of these similar attention calculations are then combined. In this post, we will. And this happens because it captures the relationships between each word in a sequence with every other word. In this article, i cover all the attention blocks, and in the next story, i will dive into. Transformers Explained Attention.

From blog.pratiksanghavi.in

Transformers Explained Part I Transformers Explained Attention It gives its attention greater power of discrimination, by combining several similar attention calculations. All of these similar attention calculations are then combined. And this happens because it captures the relationships between each word in a sequence with every other word. How attention is used in the transformer. Attention is a concept that helped improve the performance of neural machine. Transformers Explained Attention.

From learnopencv.com

Understanding Attention Mechanism in Transformer Neural Networks Transformers Explained Attention The attention mechanism enables the transformers. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. Attention is a concept that helped improve the performance of neural machine translation applications. In the transformer, the attention module repeats its computations multiple times in parallel. It gives its attention greater. Transformers Explained Attention.

From www.comet.com

Explainable AI Visualizing Attention in Transformers Comet Transformers Explained Attention Attention is a concept that helped improve the performance of neural machine translation applications. And this happens because it captures the relationships between each word in a sequence with every other word. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. The transformer gets its powers because. Transformers Explained Attention.

From medium.com

Attention Mechanisms in Transformers by Aaqilah Medium Transformers Explained Attention Each of these is called an attention head. To understand transformers we first must understand the attention mechanism. All of these similar attention calculations are then combined. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. It gives its attention greater power of discrimination, by combining several. Transformers Explained Attention.

From www.analyticsvidhya.com

A Comprehensive Guide to Attention Mechanism in Deep Learning Transformers Explained Attention Challenges with rnns and how transformer models can help overcome those challenges And this happens because it captures the relationships between each word in a sequence with every other word. All of these similar attention calculations are then combined. It gives its attention greater power of discrimination, by combining several similar attention calculations. Each of these is called an attention. Transformers Explained Attention.

From ketanhdoshi.github.io

Transformers Explained Visually Multihead Attention, deep dive Transformers Explained Attention In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. How attention is used in the transformer. The attention mechanism enables the transformers. In this post, we will. Challenges with rnns and how transformer models can help overcome those challenges All of these similar attention calculations are then. Transformers Explained Attention.

From ketanhdoshi.github.io

Transformers Explained Visually Multihead Attention, deep dive Transformers Explained Attention To understand transformers we first must understand the attention mechanism. The attention mechanism enables the transformers. Challenges with rnns and how transformer models can help overcome those challenges And this happens because it captures the relationships between each word in a sequence with every other word. In this post, we will. Attention is a concept that helped improve the performance. Transformers Explained Attention.

From www.youtube.com

Quantifying Attention Flow In Transformers (Effective Way to Interpret Transformers Explained Attention To understand transformers we first must understand the attention mechanism. Attention is a concept that helped improve the performance of neural machine translation applications. In this post, we will. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. All of these similar attention calculations are then combined.. Transformers Explained Attention.

From machinelearningmastery.com

The Transformer Attention Mechanism Transformers Explained Attention In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. The attention mechanism enables the transformers. In this post, we will. All of these similar attention calculations are then combined. Attention is a concept that helped improve the performance of neural machine translation applications. To understand transformers we. Transformers Explained Attention.

From machinelearningmastery.com

The Transformer Attention Mechanism Transformers Explained Attention How attention is used in the transformer. The transformer gets its powers because of the attention module. All of these similar attention calculations are then combined. To understand transformers we first must understand the attention mechanism. Challenges with rnns and how transformer models can help overcome those challenges It gives its attention greater power of discrimination, by combining several similar. Transformers Explained Attention.

From www.researchgate.net

Transformer layer with reattention mechanism vs. selfattention Transformers Explained Attention It gives its attention greater power of discrimination, by combining several similar attention calculations. In this article, i cover all the attention blocks, and in the next story, i will dive into the transformer network architecture. How attention is used in the transformer. As we discussed in part 2, attention is used in the transformer in three places: Each of. Transformers Explained Attention.

From www.youtube.com

Transformer Architecture Explained Attention Is All You Need Transformers Explained Attention The attention mechanism enables the transformers. In the transformer, the attention module repeats its computations multiple times in parallel. To understand transformers we first must understand the attention mechanism. Attention is a concept that helped improve the performance of neural machine translation applications. It gives its attention greater power of discrimination, by combining several similar attention calculations. And this happens. Transformers Explained Attention.

From ketanhdoshi.github.io

Transformers Explained Visually Multihead Attention, deep dive Transformers Explained Attention The transformer calls each attention processor an attention head and repeats it several times in parallel. To understand transformers we first must understand the attention mechanism. How attention is used in the transformer. All of these similar attention calculations are then combined. In this article, i cover all the attention blocks, and in the next story, i will dive into. Transformers Explained Attention.

From paperswithcode.com

Attention Free Transformer Explained Papers With Code Transformers Explained Attention The transformer calls each attention processor an attention head and repeats it several times in parallel. In this post, we will. Challenges with rnns and how transformer models can help overcome those challenges How attention is used in the transformer. Each of these is called an attention head. In the transformer, the attention module repeats its computations multiple times in. Transformers Explained Attention.

From www.youtube.com

Transformers Explained Attention Simplified! YouTube Transformers Explained Attention Attention is a concept that helped improve the performance of neural machine translation applications. In the transformer, the attention module repeats its computations multiple times in parallel. The transformer gets its powers because of the attention module. How attention is used in the transformer. In this article, i cover all the attention blocks, and in the next story, i will. Transformers Explained Attention.

From medium.com

Transformers (2017) one minute summary by Jeffrey Boschman One Transformers Explained Attention In the transformer, the attention module repeats its computations multiple times in parallel. The transformer calls each attention processor an attention head and repeats it several times in parallel. To understand transformers we first must understand the attention mechanism. All of these similar attention calculations are then combined. The attention mechanism enables the transformers. Attention is a concept that helped. Transformers Explained Attention.