Back Propagation Neural Network Mathematics . This is of course backpropagation. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. back prop in rnn — recurrent neural network. backpropagation is a machine learning technique essential to the optimization of artificial neural networks. For the rest of this tutorial we’re going to work with a single training set: backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. Backpropagation is an iterative algorithm, that helps to minimize the cost function by determining which weights and biases should be adjusted. the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence on the output. so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between output and hidden.

from towardsdatascience.com

Backpropagation is an iterative algorithm, that helps to minimize the cost function by determining which weights and biases should be adjusted. backpropagation is a machine learning technique essential to the optimization of artificial neural networks. Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence on the output. back prop in rnn — recurrent neural network. the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. This is of course backpropagation. For the rest of this tutorial we’re going to work with a single training set: so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between output and hidden.

How Does BackPropagation Work in Neural Networks? by Kiprono Elijah

Back Propagation Neural Network Mathematics backpropagation is a machine learning technique essential to the optimization of artificial neural networks. Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between output and hidden. This is of course backpropagation. backpropagation is a machine learning technique essential to the optimization of artificial neural networks. Backpropagation is an iterative algorithm, that helps to minimize the cost function by determining which weights and biases should be adjusted. back prop in rnn — recurrent neural network. For the rest of this tutorial we’re going to work with a single training set: the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence on the output.

From www.researchgate.net

A threelayer backpropagation (BP) neural network structure Back Propagation Neural Network Mathematics the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. the method takes a neural networks output error and propagates this error backwards through the network determining which paths. Back Propagation Neural Network Mathematics.

From www.researchgate.net

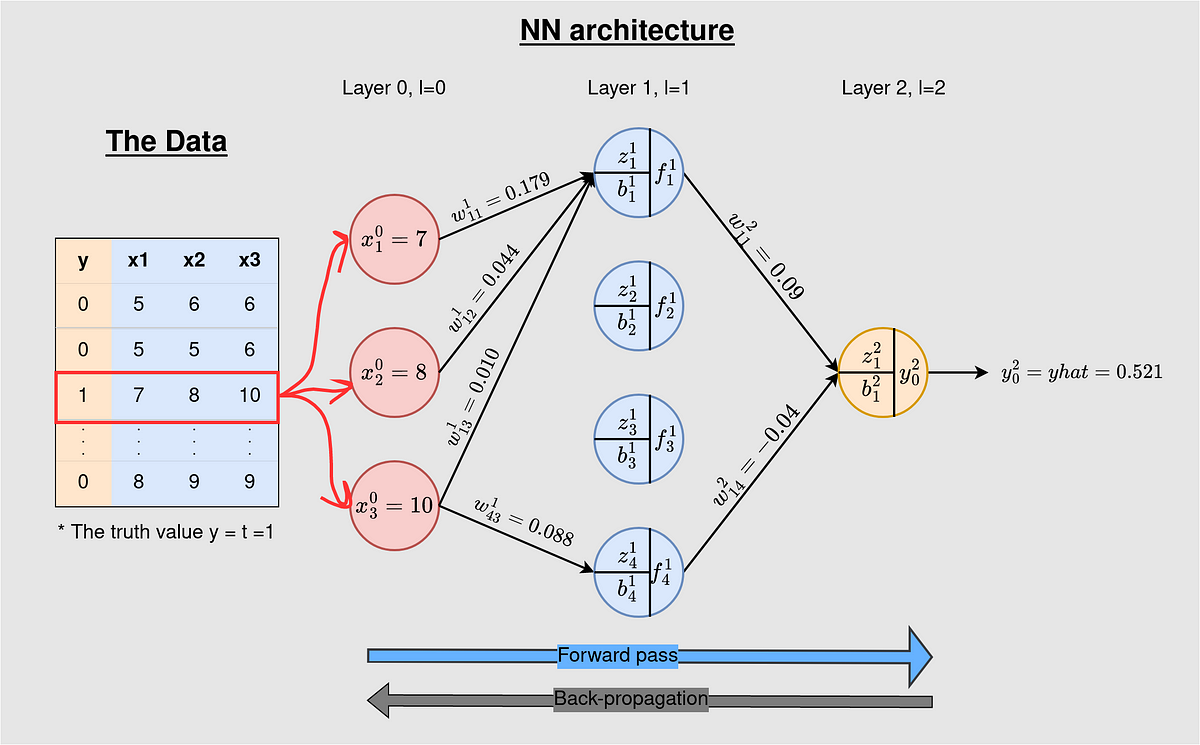

Feedforward Backpropagation Neural Network architecture. Download Back Propagation Neural Network Mathematics For the rest of this tutorial we’re going to work with a single training set: the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence on the output. Backpropagation is an iterative algorithm, that helps to minimize the cost function by determining which weights and biases. Back Propagation Neural Network Mathematics.

From www.jeremyjordan.me

Neural networks training with backpropagation. Back Propagation Neural Network Mathematics so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between output and hidden. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. Things get a little tricky in rnns because unlike nns, where the. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Structure and schematic diagram of the backpropagation neural network Back Propagation Neural Network Mathematics Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. backpropagation is a machine learning technique essential to the optimization of artificial neural networks. so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between. Back Propagation Neural Network Mathematics.

From www.researchgate.net

The structure of back propagation neural network (BPN). Download Back Propagation Neural Network Mathematics Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. so we are propagating back the error signal (hence the name backpropagation) through the entire network, in. Back Propagation Neural Network Mathematics.

From www.youtube.com

Solved Example Back Propagation Algorithm Neural Networks YouTube Back Propagation Neural Network Mathematics Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. Backpropagation is an iterative algorithm, that helps to minimize the cost function by determining which weights and biases. Back Propagation Neural Network Mathematics.

From www.youtube.com

Neural Networks 11 Backpropagation in detail YouTube Back Propagation Neural Network Mathematics backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. This is of course backpropagation. Backpropagation is an iterative algorithm, that helps to minimize. Back Propagation Neural Network Mathematics.

From georgepavlides.info

Matrixbased implementation of neural network backpropagation training Back Propagation Neural Network Mathematics the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Mathematical description of backpropagation artificial neural network Back Propagation Neural Network Mathematics so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between output and hidden. the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. Given inputs. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Architecture of backpropagation neural network (BPNN) with one hidden Back Propagation Neural Network Mathematics backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. For the rest of this tutorial we’re going to work with a single training. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Structure of back propagation neural network. Download Scientific Diagram Back Propagation Neural Network Mathematics Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. Backpropagation is an iterative algorithm, that helps to minimize the cost function by determining which weights and biases. Back Propagation Neural Network Mathematics.

From medium.com

Neural networks and backpropagation explained in a simple way by Back Propagation Neural Network Mathematics Backpropagation is an iterative algorithm, that helps to minimize the cost function by determining which weights and biases should be adjusted. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. so we. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Structure diagram of back propagation neural network. Download Back Propagation Neural Network Mathematics so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between output and hidden. the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. Things get. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Structure of the backpropagation neural network. Download Scientific Back Propagation Neural Network Mathematics For the rest of this tutorial we’re going to work with a single training set: Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. backpropagation, short. Back Propagation Neural Network Mathematics.

From www.youtube.com

Back Propagation Neural Network Basic Concepts Neural Networks Back Propagation Neural Network Mathematics backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. backpropagation is a machine learning technique essential to the optimization of artificial neural networks. the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Back propagation principle diagram of neural network The Minbatch Back Propagation Neural Network Mathematics backpropagation is a machine learning technique essential to the optimization of artificial neural networks. so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between output and hidden. Backpropagation is an iterative algorithm, that helps to minimize the cost function. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Back propagation neural network topology structural diagram. Download Back Propagation Neural Network Mathematics backpropagation is a machine learning technique essential to the optimization of artificial neural networks. backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Illustration of the architecture of the back propagation neural network Back Propagation Neural Network Mathematics backpropagation is a machine learning technique essential to the optimization of artificial neural networks. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence on the output. so we. Back Propagation Neural Network Mathematics.

From towardsdatascience.com

How Does BackPropagation Work in Neural Networks? by Kiprono Elijah Back Propagation Neural Network Mathematics Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. backpropagation is a machine learning technique essential to the optimization of artificial neural networks. Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Architecture of the backpropagation neural network (BPNN) algorithm Back Propagation Neural Network Mathematics backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in. Back Propagation Neural Network Mathematics.

From www.datasciencecentral.com

Neural Networks The Backpropagation algorithm in a picture Back Propagation Neural Network Mathematics For the rest of this tutorial we’re going to work with a single training set: Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. the method. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Basic structure of backpropagation neural network. Download Back Propagation Neural Network Mathematics Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. Backpropagation is an iterative algorithm, that helps to minimize the cost function by determining which weights and biases. Back Propagation Neural Network Mathematics.

From www.slideshare.net

Backpropagation And Gradient Descent In Neural Networks Neural Netw… Back Propagation Neural Network Mathematics Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. back prop in rnn — recurrent neural network. the method takes a neural networks output error. Back Propagation Neural Network Mathematics.

From studyglance.in

Back Propagation NN Tutorial Study Glance Back Propagation Neural Network Mathematics the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence on the output. the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. For the rest of this tutorial we’re going. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Backpropagation neural network (BPNN). Download Scientific Diagram Back Propagation Neural Network Mathematics the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence on the output. For the rest of this tutorial we’re going to work with a single training set: backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks. Back Propagation Neural Network Mathematics.

From builtin.com

Backpropagation in a Neural Network Explained Built In Back Propagation Neural Network Mathematics backpropagation is a machine learning technique essential to the optimization of artificial neural networks. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence on the output. backpropagation, short. Back Propagation Neural Network Mathematics.

From www.researchgate.net

The architecture of back propagation function neural network diagram Back Propagation Neural Network Mathematics the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. back prop in rnn — recurrent neural network. For the rest of this tutorial we’re going to work with a single training set: the method takes a neural networks output error and propagates. Back Propagation Neural Network Mathematics.

From towardsdatascience.com

Understanding Backpropagation Algorithm by Simeon Kostadinov Back Propagation Neural Network Mathematics For the rest of this tutorial we’re going to work with a single training set: so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between output and hidden. the goal of backpropagation is to optimize the weights so that. Back Propagation Neural Network Mathematics.

From www.geeksforgeeks.org

Backpropagation in Neural Network Back Propagation Neural Network Mathematics backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. This is of course backpropagation. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. the goal of backpropagation is to optimize the weights so that the neural network can learn how to. Back Propagation Neural Network Mathematics.

From www.vrogue.co

Understanding Backpropagation In Neural Network A Ste vrogue.co Back Propagation Neural Network Mathematics the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. This is of course backpropagation. the method takes a neural networks output error and propagates this error backwards through the network determining which paths have the greatest influence on the output. For the rest. Back Propagation Neural Network Mathematics.

From www.researchgate.net

The structure of back propagation neural network. Download Scientific Back Propagation Neural Network Mathematics For the rest of this tutorial we’re going to work with a single training set: Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. the method. Back Propagation Neural Network Mathematics.

From www.3blue1brown.com

3Blue1Brown Backpropagation calculus Back Propagation Neural Network Mathematics For the rest of this tutorial we’re going to work with a single training set: the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient. Back Propagation Neural Network Mathematics.

From www.tpsearchtool.com

Figure 1 From Derivation Of Backpropagation In Convolutional Neural Images Back Propagation Neural Network Mathematics Things get a little tricky in rnns because unlike nns, where the output and inputs of a node are independent of each other, the output of the current step is fed as an input to the same node in the next step. Given inputs 0.05 and 0.10, we want the neural network to output 0.01 and 0.99. so we. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Network Architecture of Back Propagation Neural Network. Download Back Propagation Neural Network Mathematics the goal of backpropagation is to optimize the weights so that the neural network can learn how to correctly map arbitrary inputs to outputs. back prop in rnn — recurrent neural network. backpropagation, short for backward propagation of errors, is an algorithm for supervised learning of artificial neural networks using gradient descent. backpropagation is a machine. Back Propagation Neural Network Mathematics.

From www.researchgate.net

Schematic representation of a model of back propagation neural network Back Propagation Neural Network Mathematics so we are propagating back the error signal (hence the name backpropagation) through the entire network, in a way that is proportional to the weight of the connection between output and hidden. Backpropagation is an iterative algorithm, that helps to minimize the cost function by determining which weights and biases should be adjusted. the goal of backpropagation is. Back Propagation Neural Network Mathematics.