Pytorch Fix Embedding . nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. a simple lookup table that stores embeddings of a fixed dictionary and size. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. This module is often used to store word. This mapping is done through an embedding matrix, which is a. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example.

from opensourcebiology.eu

a simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space.

PyTorch Linear and PyTorch Embedding Layers Open Source Biology

Pytorch Fix Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. a simple lookup table that stores embeddings of a fixed dictionary and size. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. This module is often used to store word. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension.

From github.com

GitHub vfdev5/FixMatchpytorch Implementation of FixMatch in Pytorch Fix Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. This simple operation is. Pytorch Fix Embedding.

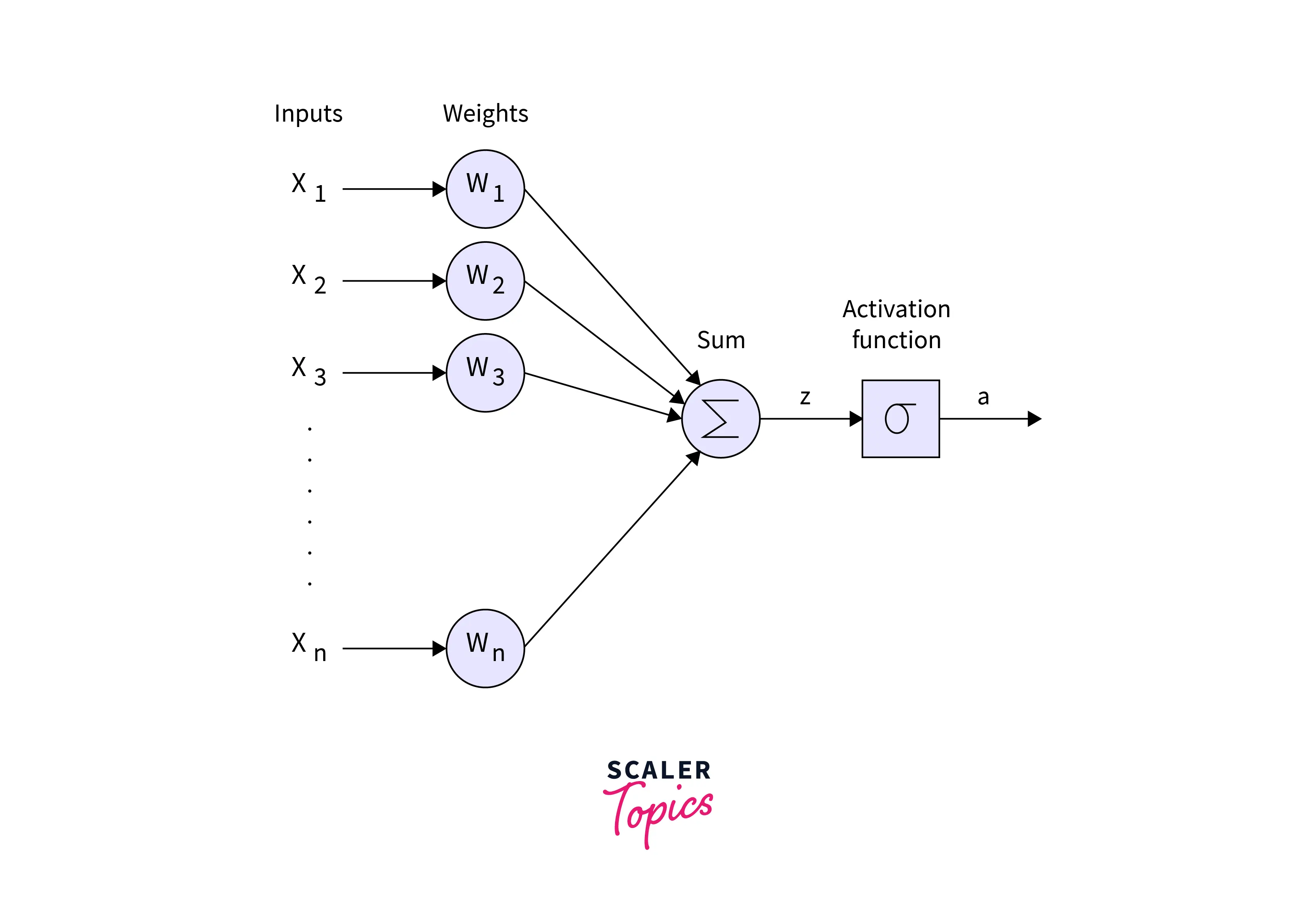

From www.scaler.com

PyTorch Linear and PyTorch Embedding Layers Scaler Topics Pytorch Fix Embedding This mapping is done through an embedding matrix, which is a. This module is often used to store word. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix. Pytorch Fix Embedding.

From jamesmccaffrey.wordpress.com

Anomaly Detection for Tabular Data Using a PyTorch Transformer with Pytorch Fix Embedding This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. a simple lookup table that stores embeddings of a fixed dictionary and size. This mapping is done through an embedding matrix, which is a. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. nn.embedding is a pytorch. Pytorch Fix Embedding.

From www.youtube.com

nn.Embedding in PyTorch YouTube Pytorch Fix Embedding This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense. Pytorch Fix Embedding.

From towardsdatascience.com

PyTorch Geometric Graph Embedding by Anuradha Wickramarachchi Pytorch Fix Embedding the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. a simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word. This mapping is done through an embedding matrix, which is a. This simple operation is the. Pytorch Fix Embedding.

From github.com

Torch/Pytorch Install Error (FIX!) · AUTOMATIC1111 stablediffusion Pytorch Fix Embedding torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size,. Pytorch Fix Embedding.

From wandb.ai

Interpret any PyTorch Model Using W&B Embedding Projector embedding Pytorch Fix Embedding you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. a simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word. the nn.embedding layer is a simple lookup. Pytorch Fix Embedding.

From github.com

fixmatchpytorch/label_guessor.py at master · CoinCheung/fixmatch Pytorch Fix Embedding torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. a simple lookup table that stores embeddings. Pytorch Fix Embedding.

From www.youtube.com

What are PyTorch Embeddings Layers (6.4) YouTube Pytorch Fix Embedding you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to. Pytorch Fix Embedding.

From github.com

GitHub xdweixia/GECMC The Pytorch implementation for our TIP paper Pytorch Fix Embedding This module is often used to store word. a simple lookup table that stores embeddings of a fixed dictionary and size. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. in this brief article i will show how. Pytorch Fix Embedding.

From github.com

GitHub fbuchert/fixmatchpytorch PyTorch implementation of FixMatch Pytorch Fix Embedding a simple lookup table that stores embeddings of a fixed dictionary and size. This mapping is done through an embedding matrix, which is a. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. you are directly feeding the. Pytorch Fix Embedding.

From github.com

Embedding layer tensor shape · Issue 99268 · pytorch/pytorch · GitHub Pytorch Fix Embedding This module is often used to store word. This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term). Pytorch Fix Embedding.

From github.com

GitHub valencebond/FixMatch_pytorch Unofficial PyTorch Pytorch Fix Embedding This mapping is done through an embedding matrix, which is a. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This simple operation is the. Pytorch Fix Embedding.

From github.com

[bug] Fix Pytorch profiler with emit_nvtx by tchaton · Pull Request Pytorch Fix Embedding This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. a simple lookup table that stores embeddings of a fixed. Pytorch Fix Embedding.

From github.com

GitHub manitadayon/pytorchforecasting_Nhits_fix Time series Pytorch Fix Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in. Pytorch Fix Embedding.

From www.bilibili.com

PyTorch Tutorial 17 Saving and Load... 哔哩哔哩 Pytorch Fix Embedding This module is often used to store word. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. This mapping is done through an embedding matrix, which is a. in this brief article i will show how an embedding. Pytorch Fix Embedding.

From www.youtube.com

Understanding Embedding Layer in Pytorch YouTube Pytorch Fix Embedding This module is often used to store word. a simple lookup table that stores embeddings of a fixed dictionary and size. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. in this brief article i will show how. Pytorch Fix Embedding.

From opensourcebiology.eu

PyTorch Linear and PyTorch Embedding Layers Open Source Biology Pytorch Fix Embedding a simple lookup table that stores embeddings of a fixed dictionary and size. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. This module is often used to store word. This mapping is done through an embedding matrix,. Pytorch Fix Embedding.

From wandb.ai

Interpret any PyTorch Model Using W&B Embedding Projector embedding Pytorch Fix Embedding the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. This mapping is done through an embedding matrix, which is a. a simple lookup table that stores embeddings of a fixed dictionary and size. in this brief article i will show how an embedding layer is. Pytorch Fix Embedding.

From www.educba.com

PyTorch Embedding Complete Guide on PyTorch Embedding Pytorch Fix Embedding the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. a simple lookup table that stores embeddings of a fixed dictionary and size. This mapping is done through an embedding matrix, which is a. This simple operation is the foundation of many advanced nlp architectures, allowing for. Pytorch Fix Embedding.

From towardsdatascience.com

The Secret to Improved NLP An InDepth Look at the nn.Embedding Layer Pytorch Fix Embedding This module is often used to store word. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. This simple operation is. Pytorch Fix Embedding.

From barkmanoil.com

Pytorch Nn Embedding? The 18 Correct Answer Pytorch Fix Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This module is often used to store word. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. This simple operation is the foundation of many. Pytorch Fix Embedding.

From lightning.ai

Introduction to Coding Neural Networks with PyTorch + Lightning Pytorch Fix Embedding This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. This mapping is done through an embedding matrix, which is a. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. in this. Pytorch Fix Embedding.

From blog.csdn.net

pytorch 笔记: torch.nn.Embedding_pytorch embeding的权重CSDN博客 Pytorch Fix Embedding the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. a simple lookup table that stores embeddings of a fixed dictionary and size. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a. Pytorch Fix Embedding.

From discuss.pytorch.org

Is embedding layer different from linear layer nlp PyTorch Forums Pytorch Fix Embedding This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done through an embedding matrix, which is a. in this brief article i will show how an embedding layer is equivalent to a linear layer (without. Pytorch Fix Embedding.

From coderzcolumn.com

Word Embeddings for PyTorch Text Classification Networks Pytorch Fix Embedding in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. This module is often used to store word. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. you are directly. Pytorch Fix Embedding.

From clay-atlas.com

[PyTorch] Use "Embedding" Layer To Process Text ClayTechnology World Pytorch Fix Embedding This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. the nn.embedding layer is a simple lookup table that maps an index value to. Pytorch Fix Embedding.

From www.aritrasen.com

Deep Learning with Pytorch Text Generation LSTMs 3.3 Pytorch Fix Embedding in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. . Pytorch Fix Embedding.

From www.scaler.com

How to Install PyTorch? Scaler Topics Pytorch Fix Embedding This module is often used to store word. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. This simple operation is. Pytorch Fix Embedding.

From blog.acolyer.org

PyTorchBigGraph a largescale graph embedding system the morning paper Pytorch Fix Embedding This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of discrete input symbols in a continuous space. you are directly feeding the embedding output to lstm, this will fix the input size of lstm to context size of 1. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. nn.embedding is a pytorch layer that. Pytorch Fix Embedding.

From pytorch.org

Optimizing Production PyTorch Models’ Performance with Graph Pytorch Fix Embedding the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This simple operation is the foundation of many advanced nlp architectures, allowing for the processing of. Pytorch Fix Embedding.

From github.com

FixMatchpytorch/dataset/randaugment.py at master · kekmodel/FixMatch Pytorch Fix Embedding the nn.embedding layer is a simple lookup table that maps an index value to a weight matrix of a certain dimension. This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none,. Pytorch Fix Embedding.

From theaisummer.com

Pytorch AI Summer Pytorch Fix Embedding torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This module is often used to store word. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. a simple lookup table that stores embeddings of a fixed dictionary and size. you are directly feeding the embedding output to. Pytorch Fix Embedding.

From stackoverflow.com

deep learning Faster way to do multiple embeddings in PyTorch Pytorch Fix Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. in this brief article i will show how an embedding layer is equivalent to a linear layer (without the bias term) through a simple example. This simple operation is the foundation of many advanced nlp architectures, allowing. Pytorch Fix Embedding.

From www.youtube.com

[pytorch] Embedding, LSTM 입출력 텐서(Tensor) Shape 이해하고 모델링 하기 YouTube Pytorch Fix Embedding a simple lookup table that stores embeddings of a fixed dictionary and size. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This module is often used to store word. This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known. Pytorch Fix Embedding.