American Sign Language Recognition

"By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Stella Batalama, PhD, dean, FAU College of Engineering and Computer Science. "The model's ability to reliably interpret gestures opens the door to more inclusive solutions that support accessibility, making daily.

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

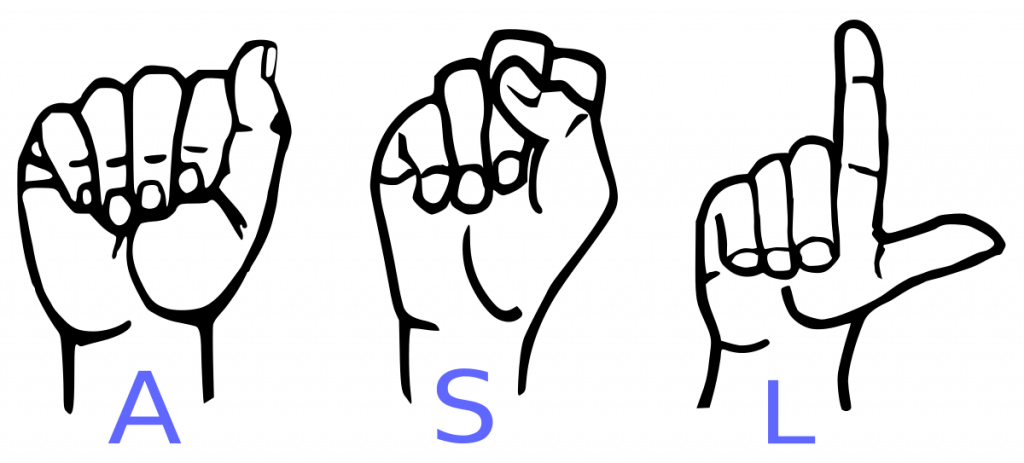

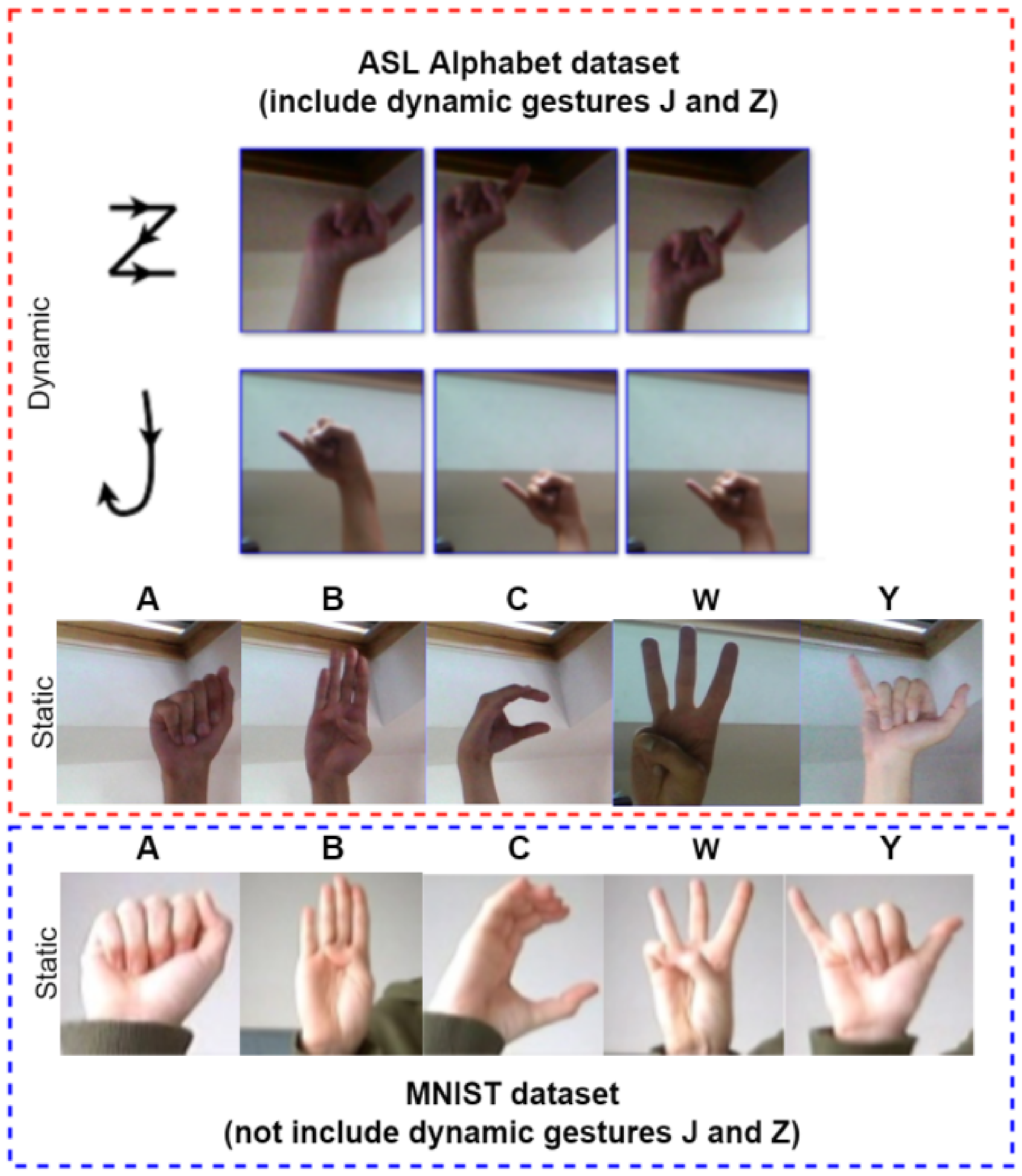

In this article, a simple and efficient vision-based approach for American Sign Language (ASL) alphabets recognition has been discussed to recognize both static and dynamic gestures. Mediapipe introduced by Google had been used to get hand landmarks and a custom dataset has been created and used for the experimental study.

A study is the first-of-its-kind to recognize American Sign Language (ASL) alphabet gestures using computer vision. Researchers developed a custom dataset of 29,820 static images of ASL hand.

(PDF) American Sign Language Recognition Model Using Complex Zernike ...

In this article, a simple and efficient vision-based approach for American Sign Language (ASL) alphabets recognition has been discussed to recognize both static and dynamic gestures. Mediapipe introduced by Google had been used to get hand landmarks and a custom dataset has been created and used for the experimental study.

"By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Stella Batalama, PhD, dean, FAU College of Engineering and Computer Science. "The model's ability to reliably interpret gestures opens the door to more inclusive solutions that support accessibility, making daily.

Abstract Our paper presents a two-pronged ablation study for sign language recognition for American Sign Language (ASL) characters on two datasets. Experimentation re-vealed that hyperparameter tuning, data augmentation, and hand landmark detection can help improve accuracy. The fi-nal model achieved a test accuracy of 96.42%. Future work includes running the model for a greater number of.

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

American Sign Language Recognition Using Python Opencv Keras - Riset

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

American Sign Language (ASL) recognition aims to recognize hand gestures, and it is a crucial solution to communicating between the deaf community and hearing people. However, existing sign language recognition algorithms still have some drawbacks, such as difficulty recognizing hand movements and low recognition accuracy for most sign language recognition. A Modified Convolutional Neural.

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

"By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Stella Batalama, PhD, dean, FAU College of Engineering and Computer Science. "The model's ability to reliably interpret gestures opens the door to more inclusive solutions that support accessibility, making daily.

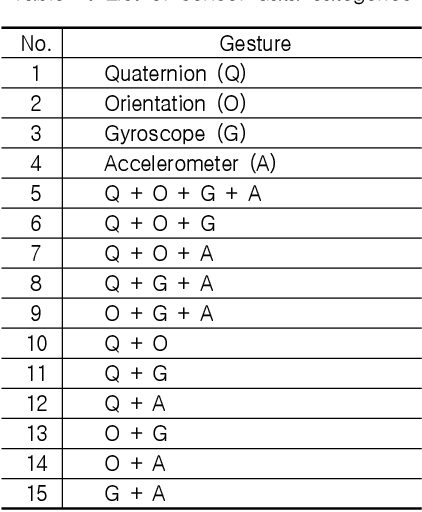

Table 2 From American Sign Language Recognition System Using Wearable ...

This project aims to detect American Sign Language using PyTorch and deep learning. The neural network can also detect the sign language letters in real-time from a webcam video feed. Developed a program that lets users search dictionaries of American Sign Language (ASL), to look up the meaning of.

Gesture recognition plays a vital role in computer vision, especially for interpreting sign language and enabling human.

American Sign Language (ASL) recognition aims to recognize hand gestures, and it is a crucial solution to communicating between the deaf community and hearing people. However, existing sign language recognition algorithms still have some drawbacks, such as difficulty recognizing hand movements and low recognition accuracy for most sign language recognition. A Modified Convolutional Neural.

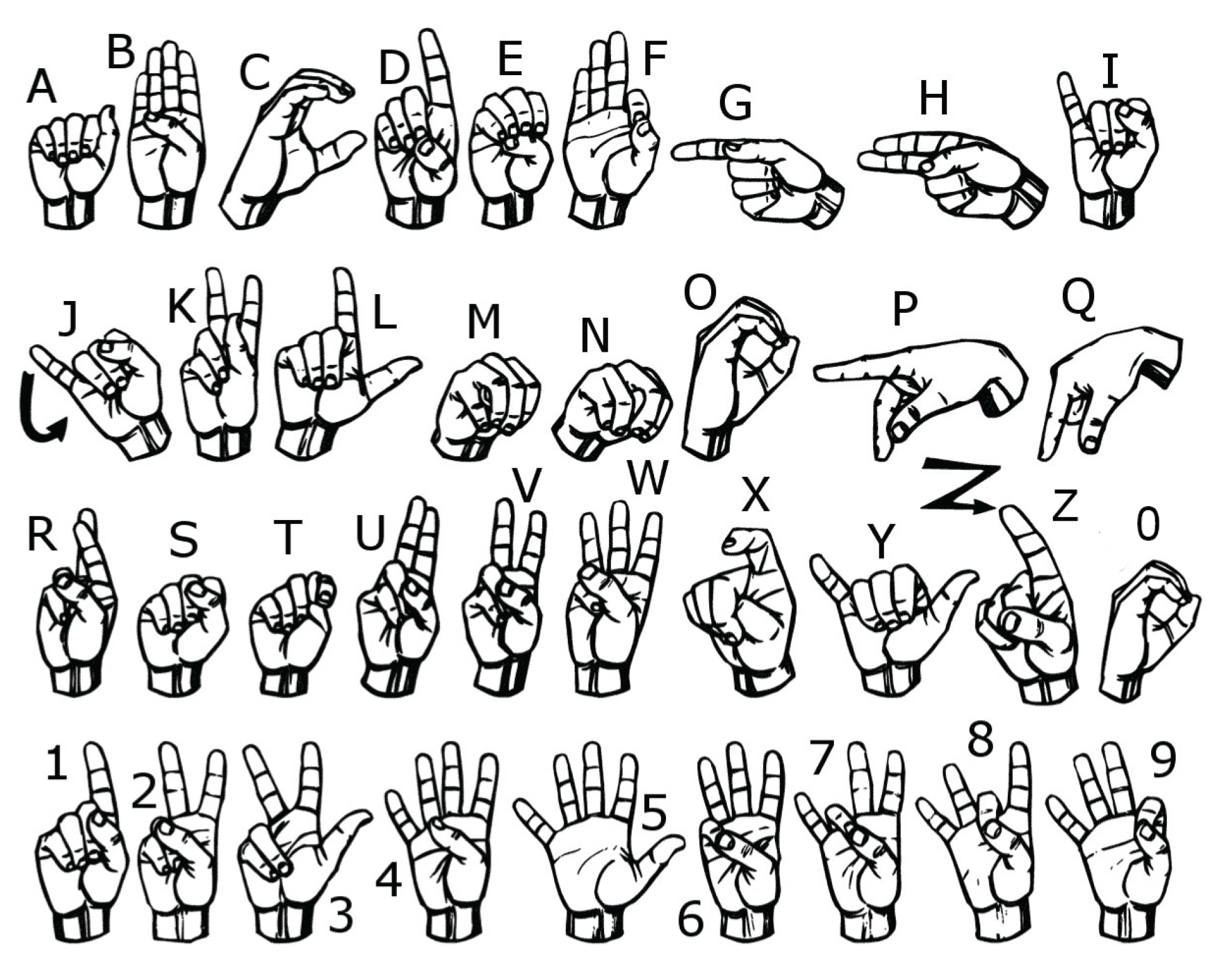

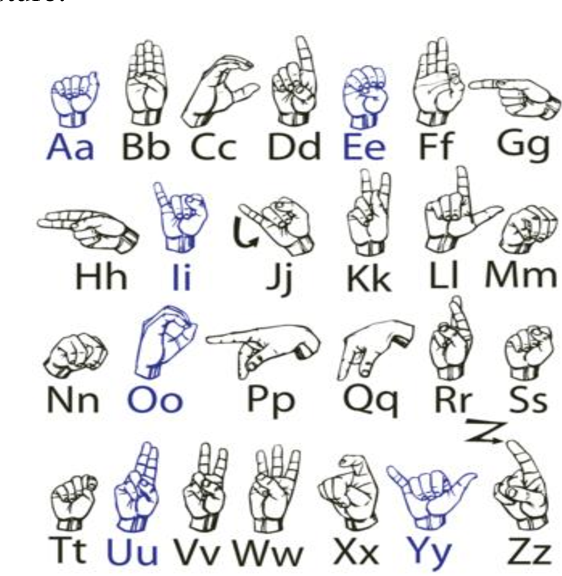

American Alphabet Sign Language Recognition System The most ordinary form of communication relies on alphabetic expression through speech, writing, or sign language.

American Sign Language Hand Gesture Recognition | By Rawini Dias ...

American Sign Language (ASL) recognition aims to recognize hand gestures, and it is a crucial solution to communicating between the deaf community and hearing people. However, existing sign language recognition algorithms still have some drawbacks, such as difficulty recognizing hand movements and low recognition accuracy for most sign language recognition. A Modified Convolutional Neural.

American Alphabet Sign Language Recognition System The most ordinary form of communication relies on alphabetic expression through speech, writing, or sign language.

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

American Sign Language Recognition Using Leap Motion Controller With ...

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

American Sign Language (ASL) recognition aims to recognize hand gestures, and it is a crucial solution to communicating between the deaf community and hearing people. However, existing sign language recognition algorithms still have some drawbacks, such as difficulty recognizing hand movements and low recognition accuracy for most sign language recognition. A Modified Convolutional Neural.

A study is the first-of-its-kind to recognize American Sign Language (ASL) alphabet gestures using computer vision. Researchers developed a custom dataset of 29,820 static images of ASL hand.

Abstract Our paper presents a two-pronged ablation study for sign language recognition for American Sign Language (ASL) characters on two datasets. Experimentation re-vealed that hyperparameter tuning, data augmentation, and hand landmark detection can help improve accuracy. The fi-nal model achieved a test accuracy of 96.42%. Future work includes running the model for a greater number of.

Figure 2.1 From American Sign Language Recognition Using Convolution ...

This project aims to detect American Sign Language using PyTorch and deep learning. The neural network can also detect the sign language letters in real-time from a webcam video feed. Developed a program that lets users search dictionaries of American Sign Language (ASL), to look up the meaning of.

Gesture recognition plays a vital role in computer vision, especially for interpreting sign language and enabling human.

American Sign Language (ASL) recognition aims to recognize hand gestures, and it is a crucial solution to communicating between the deaf community and hearing people. However, existing sign language recognition algorithms still have some drawbacks, such as difficulty recognizing hand movements and low recognition accuracy for most sign language recognition. A Modified Convolutional Neural.

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

In this article, a simple and efficient vision-based approach for American Sign Language (ASL) alphabets recognition has been discussed to recognize both static and dynamic gestures. Mediapipe introduced by Google had been used to get hand landmarks and a custom dataset has been created and used for the experimental study.

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

"By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Stella Batalama, PhD, dean, FAU College of Engineering and Computer Science. "The model's ability to reliably interpret gestures opens the door to more inclusive solutions that support accessibility, making daily.

Gesture recognition plays a vital role in computer vision, especially for interpreting sign language and enabling human.

GitHub - Gunnika/Sign_Language_Detector-PyTorch: Recognition Of Hand ...

This project aims to detect American Sign Language using PyTorch and deep learning. The neural network can also detect the sign language letters in real-time from a webcam video feed. Developed a program that lets users search dictionaries of American Sign Language (ASL), to look up the meaning of.

A study is the first-of-its-kind to recognize American Sign Language (ASL) alphabet gestures using computer vision. Researchers developed a custom dataset of 29,820 static images of ASL hand.

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

In this article, a simple and efficient vision-based approach for American Sign Language (ASL) alphabets recognition has been discussed to recognize both static and dynamic gestures. Mediapipe introduced by Google had been used to get hand landmarks and a custom dataset has been created and used for the experimental study.

American Sign Language (ASL) recognition aims to recognize hand gestures, and it is a crucial solution to communicating between the deaf community and hearing people. However, existing sign language recognition algorithms still have some drawbacks, such as difficulty recognizing hand movements and low recognition accuracy for most sign language recognition. A Modified Convolutional Neural.

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

A study is the first-of-its-kind to recognize American Sign Language (ASL) alphabet gestures using computer vision. Researchers developed a custom dataset of 29,820 static images of ASL hand.

In this article, a simple and efficient vision-based approach for American Sign Language (ASL) alphabets recognition has been discussed to recognize both static and dynamic gestures. Mediapipe introduced by Google had been used to get hand landmarks and a custom dataset has been created and used for the experimental study.

GitHub - Themechanicalcoder/American-Sign-Language-Recognition: Real ...

In this article, a simple and efficient vision-based approach for American Sign Language (ASL) alphabets recognition has been discussed to recognize both static and dynamic gestures. Mediapipe introduced by Google had been used to get hand landmarks and a custom dataset has been created and used for the experimental study.

Gesture recognition plays a vital role in computer vision, especially for interpreting sign language and enabling human.

A study is the first-of-its-kind to recognize American Sign Language (ASL) alphabet gestures using computer vision. Researchers developed a custom dataset of 29,820 static images of ASL hand.

"By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Stella Batalama, PhD, dean, FAU College of Engineering and Computer Science. "The model's ability to reliably interpret gestures opens the door to more inclusive solutions that support accessibility, making daily.

American Sign Language Recognition Using Deep Learning

American Alphabet Sign Language Recognition System The most ordinary form of communication relies on alphabetic expression through speech, writing, or sign language.

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

Abstract Our paper presents a two-pronged ablation study for sign language recognition for American Sign Language (ASL) characters on two datasets. Experimentation re-vealed that hyperparameter tuning, data augmentation, and hand landmark detection can help improve accuracy. The fi-nal model achieved a test accuracy of 96.42%. Future work includes running the model for a greater number of.

American Sign Language (ASL) recognition aims to recognize hand gestures, and it is a crucial solution to communicating between the deaf community and hearing people. However, existing sign language recognition algorithms still have some drawbacks, such as difficulty recognizing hand movements and low recognition accuracy for most sign language recognition. A Modified Convolutional Neural.

Figure 2 From American Sign Language Recognition System Using Image ...

Abstract Our paper presents a two-pronged ablation study for sign language recognition for American Sign Language (ASL) characters on two datasets. Experimentation re-vealed that hyperparameter tuning, data augmentation, and hand landmark detection can help improve accuracy. The fi-nal model achieved a test accuracy of 96.42%. Future work includes running the model for a greater number of.

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

"By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Stella Batalama, PhD, dean, FAU College of Engineering and Computer Science. "The model's ability to reliably interpret gestures opens the door to more inclusive solutions that support accessibility, making daily.

American Alphabet Sign Language Recognition System The most ordinary form of communication relies on alphabetic expression through speech, writing, or sign language.

Two-Stream Mixed Convolutional Neural Network For American Sign ...

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

In this article, a simple and efficient vision-based approach for American Sign Language (ASL) alphabets recognition has been discussed to recognize both static and dynamic gestures. Mediapipe introduced by Google had been used to get hand landmarks and a custom dataset has been created and used for the experimental study.

This project aims to detect American Sign Language using PyTorch and deep learning. The neural network can also detect the sign language letters in real-time from a webcam video feed. Developed a program that lets users search dictionaries of American Sign Language (ASL), to look up the meaning of.

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

American Sign Language Recognition In Python Using Deep Learning ...

American Sign Language (ASL) recognition aims to recognize hand gestures, and it is a crucial solution to communicating between the deaf community and hearing people. However, existing sign language recognition algorithms still have some drawbacks, such as difficulty recognizing hand movements and low recognition accuracy for most sign language recognition. A Modified Convolutional Neural.

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

"By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Stella Batalama, PhD, dean, FAU College of Engineering and Computer Science. "The model's ability to reliably interpret gestures opens the door to more inclusive solutions that support accessibility, making daily.

In this article, a simple and efficient vision-based approach for American Sign Language (ASL) alphabets recognition has been discussed to recognize both static and dynamic gestures. Mediapipe introduced by Google had been used to get hand landmarks and a custom dataset has been created and used for the experimental study.

American Sign Language Alphabet Recognition Using Deep Learning | DeepAI

"By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Stella Batalama, PhD, dean, FAU College of Engineering and Computer Science. "The model's ability to reliably interpret gestures opens the door to more inclusive solutions that support accessibility, making daily.

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

A study is the first-of-its-kind to recognize American Sign Language (ASL) alphabet gestures using computer vision. Researchers developed a custom dataset of 29,820 static images of ASL hand.

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

This project aims to detect American Sign Language using PyTorch and deep learning. The neural network can also detect the sign language letters in real-time from a webcam video feed. Developed a program that lets users search dictionaries of American Sign Language (ASL), to look up the meaning of.

In order to bridge this gap and connect deaf- mute with the rest of the world, with the help of deep learning, Sign Language Recognition (SLR) model has been introduced to revolutionize the way sign language is integrated into daily life, enhance communication, accessibility, and inclusively.

Gesture recognition plays a vital role in computer vision, especially for interpreting sign language and enabling human.

Image of Sign Language 'F' from Pexels Sign Language is a form of communication used primarily by people hard of hearing or deaf. This type of gesture.

Abstract Our paper presents a two-pronged ablation study for sign language recognition for American Sign Language (ASL) characters on two datasets. Experimentation re-vealed that hyperparameter tuning, data augmentation, and hand landmark detection can help improve accuracy. The fi-nal model achieved a test accuracy of 96.42%. Future work includes running the model for a greater number of.

"By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Stella Batalama, PhD, dean, FAU College of Engineering and Computer Science. "The model's ability to reliably interpret gestures opens the door to more inclusive solutions that support accessibility, making daily.

American Sign Language (ASL) recognition aims to recognize hand gestures, and it is a crucial solution to communicating between the deaf community and hearing people. However, existing sign language recognition algorithms still have some drawbacks, such as difficulty recognizing hand movements and low recognition accuracy for most sign language recognition. A Modified Convolutional Neural.

A study is the first-of-its-kind to recognize American Sign Language (ASL) alphabet gestures using computer vision. Researchers developed a custom dataset of 29,820 static images of ASL hand.

In this article, a simple and efficient vision-based approach for American Sign Language (ASL) alphabets recognition has been discussed to recognize both static and dynamic gestures. Mediapipe introduced by Google had been used to get hand landmarks and a custom dataset has been created and used for the experimental study.

American Alphabet Sign Language Recognition System The most ordinary form of communication relies on alphabetic expression through speech, writing, or sign language.