{

"cells": [

{

"cell_type": "markdown",

"metadata": {

"id": "ZjN_IJ8mhJ-4"

},

"source": [

"##### Copyright 2023 The TensorFlow Authors."

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {

"cellView": "form",

"execution": {

"iopub.execute_input": "2024-01-11T18:39:09.613493Z",

"iopub.status.busy": "2024-01-11T18:39:09.612915Z",

"iopub.status.idle": "2024-01-11T18:39:09.616588Z",

"shell.execute_reply": "2024-01-11T18:39:09.615947Z"

},

"id": "sY3Ffd83hK3b"

},

"outputs": [],

"source": [

"#@title Licensed under the Apache License, Version 2.0 (the \"License\");\n",

"# you may not use this file except in compliance with the License.\n",

"# You may obtain a copy of the License at\n",

"#\n",

"# https://www.apache.org/licenses/LICENSE-2.0\n",

"#\n",

"# Unless required by applicable law or agreed to in writing, software\n",

"# distributed under the License is distributed on an \"AS IS\" BASIS,\n",

"# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n",

"# See the License for the specific language governing permissions and\n",

"# limitations under the License."

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "03Pw58e6mTHI"

},

"source": [

"# TF-NumPy 型昇格"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "l9nPKvxK-_pM"

},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "uma-W5v__DYh"

},

"source": [

"## 概要\n",

"\n",

"TensorFlow の型昇格には 4 つのオプションがあります。\n",

"\n",

"- デフォルトでは、混合型の演算に対し、TensorFlow は型を昇格する代わりにエラーを発します。\n",

"- `tf.numpy.experimental_enable_numpy_behavior()` を実行すると、TensorFlow が [NumPy 型の昇格ルール](https://www.tensorflow.org/guide/tf_numpy#type_promotion)を使用するように切り替えられます。\n",

"- **このドキュメント**では、TensorFlow 2.15 で提供予定の新しい 2 つのオプションについて説明します(現在は、`tf-nightly` で提供されています)。"

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:09.620176Z",

"iopub.status.busy": "2024-01-11T18:39:09.619812Z",

"iopub.status.idle": "2024-01-11T18:39:34.309902Z",

"shell.execute_reply": "2024-01-11T18:39:34.308809Z"

},

"id": "vMvEKDFOsau7"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\u001b[31mERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.\r\n",

"tensorflow 2.15.0.post1 requires ml-dtypes~=0.2.0, but you have ml-dtypes 0.3.2 which is incompatible.\u001b[0m\u001b[31m\r\n",

"\u001b[0m"

]

}

],

"source": [

"!pip install -q tf_nightly"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "a6hOFBfPsd3y"

},

"source": [

"**注意**: `experimental_enable_numpy_behavior` は、TensorFlow のすべての動作を変更します。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "ob1HNwUmYR5b"

},

"source": [

"## セットアップ"

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:34.314508Z",

"iopub.status.busy": "2024-01-11T18:39:34.314247Z",

"iopub.status.idle": "2024-01-11T18:39:36.671809Z",

"shell.execute_reply": "2024-01-11T18:39:36.671118Z"

},

"id": "AJR558zjAZQu"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Using TensorFlow version 2.16.0-dev20240110\n"

]

}

],

"source": [

"import numpy as np\n",

"import tensorflow as tf\n",

"import tensorflow.experimental.numpy as tnp\n",

"\n",

"print(\"Using TensorFlow version %s\" % tf.__version__)"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "M6tacoy0DU6e"

},

"source": [

"### 新しい型昇格の有効化\n",

"\n",

"[JAX のような型昇格](https://jax.readthedocs.io/en/latest/type_promotion.html)を TF-Numpy で使用するには、TensorFlow で NumPy の動作を有効にする際に、dtype 変換モードとして `'all'` または `'safe'` のいずれかを指定します。\n",

"\n",

"この新しい組(`dtype_conversion_mode=\"all\"` を使用)は結合的で可換であり、最終的にどのような幅の浮動小数になるかを制御するのが簡単になります(自動的により幅の広い float に変換しません)。ただし、オーバーフローと精度損失のリスクがいくらか導入されますが、`dtype_conversion_mode=\"safe\"` によってそれらのケースの明示的な処理が強制されます。2 つのモードについては、[次のセクション](#two_modes)で詳しく説明されています。"

]

},

{

"cell_type": "code",

"execution_count": 4,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:36.675284Z",

"iopub.status.busy": "2024-01-11T18:39:36.674925Z",

"iopub.status.idle": "2024-01-11T18:39:36.679183Z",

"shell.execute_reply": "2024-01-11T18:39:36.678508Z"

},

"id": "TfCyofpFDQxm"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

}

],

"source": [

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"all\")"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "sEMXK8-ZWMun"

},

"source": [

"\n",

"\n",

"\n",

"## 2 つのモード: ALL モードと SAFE モード\n",

"\n",

"新しい型昇格システムでは、`ALL` モードと `SAFE` モードの 2 つのモードが導入されています。`SAFE` モードは精度損失またはビット拡張となる「リスクのある」昇格の懸念を緩和するために使用されます。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "-ULvTWj_KnHU"

},

"source": [

"### Dtype\n",

"\n",

"簡潔さの目的で、以下の略語を使用します。\n",

"\n",

"- `b` は `tf.bool` です\n",

"- `u8` は `tf.uint8` です\n",

"- `i16` は `tf.int16` です\n",

"- `i32` は `tf.int32` です\n",

"- `bf16` は `tf.bfloat16` です\n",

"- `f32` は `tf.float32` です\n",

"- `f64` は `tf.float64` です\n",

"- `i32*` は Python の `int` または弱く型付けされた `i32` です\n",

"- `f32*` は Python の `float` または弱く型付けされた `f32` です\n",

"- `c128*` は Python の `complex` または弱く型付けされた `c128` です\n",

"\n",

"アスタリスク(*)は、対応する方が「弱い」ことを示します。そのような dtype は一時的にシステムによって推論されるため、他の dtype に従う可能性があります。この概念は、[こちら](#weak_tensor)でより詳しく説明されています。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "hXZxLCkuzzq3"

},

"source": [

"### 精度を損失する演算の例\n",

"\n",

"次の例では、`i32` + `f32` は `ALL` モードでは可能ですが、精度損失のリスクにより、`SAFE` モードでは行えません。"

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:36.682553Z",

"iopub.status.busy": "2024-01-11T18:39:36.682299Z",

"iopub.status.idle": "2024-01-11T18:39:38.940240Z",

"shell.execute_reply": "2024-01-11T18:39:38.939470Z"

},

"id": "Y-yeIvstWStL"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

},

{

"data": {

"text/plain": [

""

]

},

"execution_count": 5,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# i32 + f32 returns a f32 result in ALL mode.\n",

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"all\")\n",

"a = tf.constant(10, dtype = tf.int32)\n",

"b = tf.constant(5.0, dtype = tf.float32)\n",

"a + b # "

]

},

{

"cell_type": "code",

"execution_count": 6,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:38.944342Z",

"iopub.status.busy": "2024-01-11T18:39:38.943682Z",

"iopub.status.idle": "2024-01-11T18:39:38.949645Z",

"shell.execute_reply": "2024-01-11T18:39:38.948939Z"

},

"id": "JNNmZow2WY3G"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

": In promotion mode PromoMode.SAFE, implicit dtype promotion between (, weak=False) and (, weak=False) is disallowed. You need to explicitly specify the dtype in your op, or relax your dtype promotion rules (such as from SAFE mode to ALL mode).\n"

]

}

],

"source": [

"# This promotion is not allowed in SAFE mode.\n",

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"safe\")\n",

"a = tf.constant(10, dtype = tf.int32)\n",

"b = tf.constant(5.0, dtype = tf.float32)\n",

"try:\n",

" a + b\n",

"except TypeError as e:\n",

" print(f'{type(e)}: {e}') # TypeError: explicitly specify the dtype or switch to ALL mode."

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "f0x4Qhff0AKS"

},

"source": [

"### ビット拡張の演算の例\n",

"\n",

"次の例において、`i8` + `u32` は `ALL` モードでは可能ですが、入力のビットの数よりも多いビットを使用するビット拡張により、`SAFE` モードでは行えません。新しい型昇格セマンティクスでは必要なビット拡張のみが許可されることに注意してください。"

]

},

{

"cell_type": "code",

"execution_count": 7,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:38.953095Z",

"iopub.status.busy": "2024-01-11T18:39:38.952539Z",

"iopub.status.idle": "2024-01-11T18:39:38.966514Z",

"shell.execute_reply": "2024-01-11T18:39:38.965879Z"

},

"id": "Etbv-WoWzUXf"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

},

{

"data": {

"text/plain": [

""

]

},

"execution_count": 7,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# i8 + u32 returns an i64 result in ALL mode.\n",

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"all\")\n",

"a = tf.constant(10, dtype = tf.int8)\n",

"b = tf.constant(5, dtype = tf.uint32)\n",

"a + b"

]

},

{

"cell_type": "code",

"execution_count": 8,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:38.969549Z",

"iopub.status.busy": "2024-01-11T18:39:38.969271Z",

"iopub.status.idle": "2024-01-11T18:39:38.975040Z",

"shell.execute_reply": "2024-01-11T18:39:38.974386Z"

},

"id": "yKRdvtvw0Lvt"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

": In promotion mode PromoMode.SAFE, implicit dtype promotion between (, weak=False) and (, weak=False) is disallowed. You need to explicitly specify the dtype in your op, or relax your dtype promotion rules (such as from SAFE mode to ALL mode).\n"

]

}

],

"source": [

"# This promotion is not allowed in SAFE mode.\n",

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"safe\")\n",

"a = tf.constant(10, dtype = tf.int8)\n",

"b = tf.constant(5, dtype = tf.uint32)\n",

"try:\n",

" a + b\n",

"except TypeError as e:\n",

" print(f'{type(e)}: {e}') # TypeError: explicitly specify the dtype or switch to ALL mode."

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "yh2BwqUzH3C3"

},

"source": [

"## 格子に基づくシステム"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "HHUnfTPiYVN5"

},

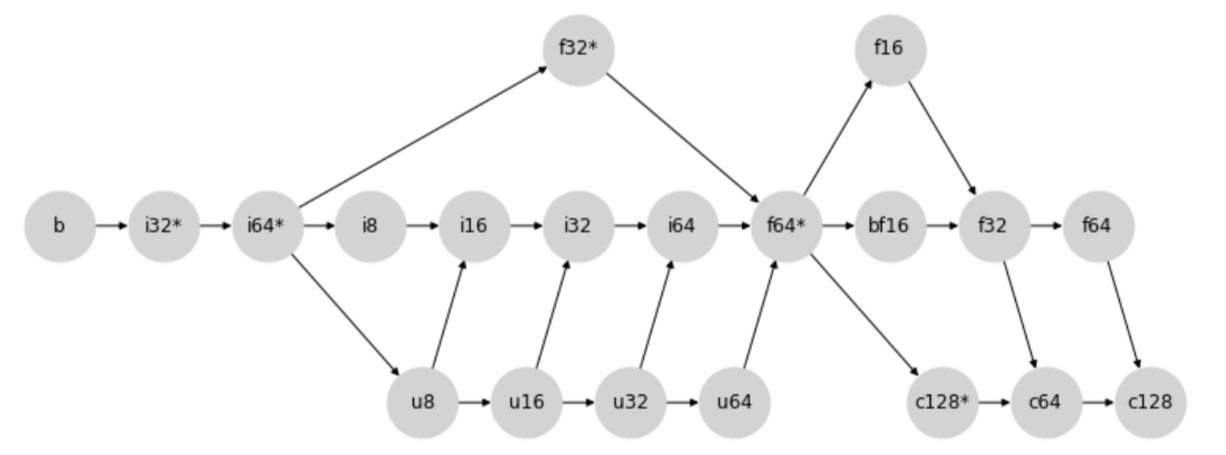

"source": [

"### 型昇格格子\n",

"\n",

"新しい型昇格の動作は、次の型昇格の格子を通じて決定されます。\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "QykluwRyDDle"

},

"source": [

"より具体的には、2 つの型の間の昇格は、2 つのノード(ノード事態を含む)の最初の共通の子を見つけて決定されます。\n",

"\n",

"たとえば、上のダイアグラムの場合、`i8` と `i32` の最初の共通の子は `i32` です。この 2 つのノードは矢印の方向に進む際に最初に `i32` で交差するためです。\n",

"\n",

"もう 1 つの例とも同様に、`u64` と `f16` の間の昇格の結果の方は `f16` となります。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "nthziRHaDAUY"

},

"source": [

"\n",

"\n",

"\n",

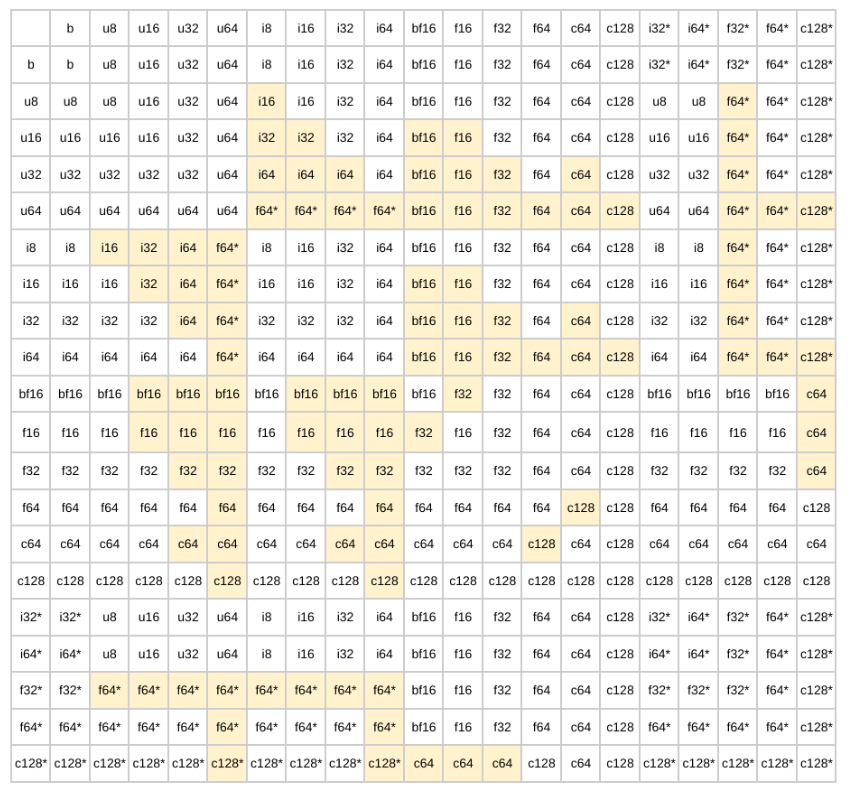

"### 型昇格テーブル\n",

"\n",

"格子に従うと、以下のバイナリ昇格テーブルが生成されます。\n",

"\n",

"**注意**: `SAFE` モードでは、ハイライトされたセルは許可されません。`ALL` モードではすべてのケースが許可されます。\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "TPDt5QTkucSC"

},

"source": [

"## 新しい型昇格のメリット\n",

"\n",

"新しい型昇格には、以下のメリットのある JAX のような格子ベースのシステムを採用します。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "NUS_b13nue1p"

},

"source": [

"\n",

"\n",

"\n",

"#### 格子ベースのシステムのメリット\n",

"\n",

"まず、格子ベースのシステムを使用することで、3 つの非常に重要な特性が確保されます。\n",

"\n",

"- 存在: あらゆる型の組み合わせに固有の結果昇格型があります。\n",

"- 可換性: `a + b = b + a`\n",

"- 結合性: `a + (b + c) = (a + b) + c`\n",

"\n",

"これらの 3 つの特性は、一貫性と予測可能性を備えた型昇格セマンティクスを構築する上で重要な特性です。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "Sz88hRR6uhls"

},

"source": [

"#### JAX のような格子系のメリット\n",

"\n",

"JAX のような格子系のもう 1 つの重大なメリットは、符号なしの int の外側では、必要以上に広範なプロモーションをすべて回避することです。つまり、64 ビットの入力なしに 64 ビットの結果を取得することはできません。これは以前の型昇格で頻繁であった不要な 64 ビット値を回避するため、特にアクセラレータで処理する際に大きなメリットがあります。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "rlylb7ieOVbJ"

},

"source": [

"ただし、これにはトレードオフがあります。float/integer が混合する昇格には精度損失の非常に強い傾向があることです。たとえば、下の例では `i64` + `f16` は `i64` を `f16` に昇格してしまいます。"

]

},

{

"cell_type": "code",

"execution_count": 9,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:38.979317Z",

"iopub.status.busy": "2024-01-11T18:39:38.978688Z",

"iopub.status.idle": "2024-01-11T18:39:38.988076Z",

"shell.execute_reply": "2024-01-11T18:39:38.987492Z"

},

"id": "abqIkV02OXEF"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

},

{

"data": {

"text/plain": [

""

]

},

"execution_count": 9,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# The first input is promoted to f16 in ALL mode.\n",

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"all\")\n",

"tf.constant(1, tf.int64) + tf.constant(3.2, tf.float16) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "mYnh1gZdObfI"

},

"source": [

"このような懸念を緩和するために、こういった「リスクのある」昇格を許可しない `SAFE` モードを導入しました。\n",

"\n",

"**注意**: 格子系の構築における設計上の考慮点については、[JAX の型昇格セマンティクスの設計](https://jax.readthedocs.io/en/latest/jep/9407-type-promotion.html)をご覧ください。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "gAc7LFV0S2dP"

},

"source": [

"\n",

"\n",

"\n",

"## WeakTensor"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "olQ2gsFlS9BH"

},

"source": [

"### 概要\n",

"\n",

"*弱いテンソル*とは、[JAX における概念](https://jax.readthedocs.io/en/latest/type_promotion.html#weakly-typed-values-in-jax)に似た「弱く型付けされた」テンソルです。\n",

"\n",

"`WeakTensor` の dtype は一時的にシステムによって推論され、他の dtype に従う可能性があります。この概念は、TF 値と、Python のスカラーリテラルのように明示的にユーザーが指定した型がない値の間で行われるバイナリ演算内で不要な型昇格が行われないようにするために、新しい型昇格に導入されています。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "MYmoFIqZTFtw"

},

"source": [

"たとえば下の例では、`tf.constant(1.2)` には特定の dtype がないため、「弱い」と見なされます。したがって、`tf.constant(1.2)` は `tf.constant(3.1, tf.float16)` の型に従い、`f16` の出力結果が得られます。"

]

},

{

"cell_type": "code",

"execution_count": 10,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:38.991522Z",

"iopub.status.busy": "2024-01-11T18:39:38.991283Z",

"iopub.status.idle": "2024-01-11T18:39:38.997655Z",

"shell.execute_reply": "2024-01-11T18:39:38.997064Z"

},

"id": "eSBv_mzyTE97"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 10,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"tf.constant(1.2) + tf.constant(3.1, tf.float16) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "KxuqBIFuTm5Z"

},

"source": [

"### WeakTensor の構造\n",

"\n",

"WeakTensor は、dtype を指定せずにテンソルを作成した場合に作成され、その結果として WeakTensor となります。テンソルが「弱い」かどうかは、テンソルの文字列表現の最後にある weak 属性をチェックすることでわかります。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "7UmunnJ8Tru3"

},

"source": [

"**最初のケース**: `tf.constant` が、ユーザー指定の dtype のない入力で呼び出された場合。"

]

},

{

"cell_type": "code",

"execution_count": 11,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.001017Z",

"iopub.status.busy": "2024-01-11T18:39:39.000500Z",

"iopub.status.idle": "2024-01-11T18:39:39.005039Z",

"shell.execute_reply": "2024-01-11T18:39:39.004447Z"

},

"id": "fLEtMluNTsI5"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 11,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"tf.constant(5) # "

]

},

{

"cell_type": "code",

"execution_count": 12,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.008026Z",

"iopub.status.busy": "2024-01-11T18:39:39.007499Z",

"iopub.status.idle": "2024-01-11T18:39:39.013076Z",

"shell.execute_reply": "2024-01-11T18:39:39.012523Z"

},

"id": "ZQX6MBWHTt__"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 12,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"tf.constant([5.0, 10.0, 3]) # "

]

},

{

"cell_type": "code",

"execution_count": 13,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.016037Z",

"iopub.status.busy": "2024-01-11T18:39:39.015666Z",

"iopub.status.idle": "2024-01-11T18:39:39.020121Z",

"shell.execute_reply": "2024-01-11T18:39:39.019499Z"

},

"id": "ftsKSC5BTweP"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 13,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# A normal Tensor is created when dtype arg is specified.\n",

"tf.constant(5, tf.int32) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "RqhoRy5iTyag"

},

"source": [

"**2 つ目のケース**: ユーザー指定の dtype のない入力が [WeakTensor をサポートする API](#weak_tensor_apis) に渡された場合。"

]

},

{

"cell_type": "code",

"execution_count": 14,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.023281Z",

"iopub.status.busy": "2024-01-11T18:39:39.022835Z",

"iopub.status.idle": "2024-01-11T18:39:39.028996Z",

"shell.execute_reply": "2024-01-11T18:39:39.028286Z"

},

"id": "DuwpgoQJTzE-"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 14,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"tf.math.abs([100.0, 4.0]) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "UTcoR1xvR39k"

},

"source": [

"##新しい型昇格をオンにした効果\n",

"\n",

"以下は、新しい型昇格をオンにしたことによる変更の部分リストです。\n",

"\n",

"- より一貫性のある予測可能な昇格結果。\n",

"- ビット拡張のリスクの軽減。\n",

"- `tf.Tensor` の数学的ダンダーメソッドでは、新しい型の昇格が使用されます。\n",

"- `tf.constant` は `WeakTensor` を戻せます。\n",

"- `tf.constant` は、`dtype` 引数とは異なる dtype を持つテンソル入力が渡された場合に、暗黙的な変換を行えます。\n",

"- `tf.Variable` インプレース演算(`assign`、`assign-add`、`assign-sub`)で暗黙の変換が可能です。\n",

"- `tnp.array(1)` と `tnp.array(1.0)` は 32 ビット WeakTensor を返します。\n",

"- `WeakTensor` が作成され、[WeakTensor がサポートする単項およびバイナリ API](#weak_tensor_apis) に使用されます。\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "KyvonwYcsFX2"

},

"source": [

"### より一貫性のある予測可能な昇格結果\n",

"\n",

"[格子ベースのシステム](#lattice_system_design)を使用することで、新しい型昇格により、一貫性のある予測可能な型昇格結果を生成することができます。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "q0Z1njfb7lRa"

},

"source": [

"#### 以前の型昇格\n",

"\n",

"以前の型昇格を使用すると、演算の順序の変更によって結果にばらつきが生じます。"

]

},

{

"cell_type": "code",

"execution_count": 15,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.032666Z",

"iopub.status.busy": "2024-01-11T18:39:39.032109Z",

"iopub.status.idle": "2024-01-11T18:39:39.036008Z",

"shell.execute_reply": "2024-01-11T18:39:39.035394Z"

},

"id": "M1Ca9v4m7z8e"

},

"outputs": [],

"source": [

"# Setup\n",

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"legacy\")\n",

"a = np.array(1, dtype=np.int8)\n",

"b = tf.constant(1)\n",

"c = np.array(1, dtype=np.float16)"

]

},

{

"cell_type": "code",

"execution_count": 16,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.038770Z",

"iopub.status.busy": "2024-01-11T18:39:39.038526Z",

"iopub.status.idle": "2024-01-11T18:39:39.043639Z",

"shell.execute_reply": "2024-01-11T18:39:39.043018Z"

},

"id": "WwhTzJ-a4rTc"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

": cannot compute AddV2 as input #1(zero-based) was expected to be a int8 tensor but is a int32 tensor [Op:AddV2] name: \n"

]

}

],

"source": [

"# (a + b) + c throws an InvalidArgumentError.\n",

"try:\n",

" tf.add(tf.add(a, b), c)\n",

"except tf.errors.InvalidArgumentError as e:\n",

" print(f'{type(e)}: {e}') # InvalidArgumentError"

]

},

{

"cell_type": "code",

"execution_count": 17,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.046642Z",

"iopub.status.busy": "2024-01-11T18:39:39.046171Z",

"iopub.status.idle": "2024-01-11T18:39:39.051767Z",

"shell.execute_reply": "2024-01-11T18:39:39.051171Z"

},

"id": "d3qDgVYn7ezT"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 17,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# (b + a) + c returns an i32 result.\n",

"tf.add(tf.add(b, a), c) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "YMH1skEs7oI5"

},

"source": [

"#### 新しい型昇格\n",

"\n",

"新しい型昇格では、順序に関係なく一貫した結果を得られます。"

]

},

{

"cell_type": "code",

"execution_count": 18,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.055106Z",

"iopub.status.busy": "2024-01-11T18:39:39.054531Z",

"iopub.status.idle": "2024-01-11T18:39:39.059146Z",

"shell.execute_reply": "2024-01-11T18:39:39.058485Z"

},

"id": "BOHyJJ8z8uCN"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

}

],

"source": [

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"all\")\n",

"a = np.array(1, dtype=np.int8)\n",

"b = tf.constant(1)\n",

"c = np.array(1, dtype=np.float16)"

]

},

{

"cell_type": "code",

"execution_count": 19,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.061945Z",

"iopub.status.busy": "2024-01-11T18:39:39.061712Z",

"iopub.status.idle": "2024-01-11T18:39:39.069640Z",

"shell.execute_reply": "2024-01-11T18:39:39.068917Z"

},

"id": "ZUKU70jf7E1l"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 19,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# (a + b) + c returns a f16 result.\n",

"tf.add(tf.add(a, b), c) # "

]

},

{

"cell_type": "code",

"execution_count": 20,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.072713Z",

"iopub.status.busy": "2024-01-11T18:39:39.072473Z",

"iopub.status.idle": "2024-01-11T18:39:39.078540Z",

"shell.execute_reply": "2024-01-11T18:39:39.077906Z"

},

"id": "YOEycjFx7qDn"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 20,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"# (b + a) + c also returns a f16 result.\n",

"tf.add(tf.add(b, a), c) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "FpGMkm6aJsn6"

},

"source": [

"### ビット拡張のリスクの軽減"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "JxV2AL-U9Grg"

},

"source": [

"#### 以前の型昇格\n",

"\n",

"以前の型昇格では、64 ビットの結果が生成されることがありました。"

]

},

{

"cell_type": "code",

"execution_count": 21,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.082062Z",

"iopub.status.busy": "2024-01-11T18:39:39.081475Z",

"iopub.status.idle": "2024-01-11T18:39:39.084657Z",

"shell.execute_reply": "2024-01-11T18:39:39.084060Z"

},

"id": "7L1pxyvn9MlP"

},

"outputs": [],

"source": [

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"legacy\")"

]

},

{

"cell_type": "code",

"execution_count": 22,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.088150Z",

"iopub.status.busy": "2024-01-11T18:39:39.087529Z",

"iopub.status.idle": "2024-01-11T18:39:39.096471Z",

"shell.execute_reply": "2024-01-11T18:39:39.095882Z"

},

"id": "zMJVFdWf4XHp"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 22,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"np.array(3.2, np.float16) + tf.constant(1, tf.int8) + tf.constant(50) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "fBhUH_wD9Is7"

},

"source": [

"#### 新しい型昇格\n",

"\n",

"新しい型昇格では、必要最小限のビット数で結果が返されます。"

]

},

{

"cell_type": "code",

"execution_count": 23,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.099601Z",

"iopub.status.busy": "2024-01-11T18:39:39.099337Z",

"iopub.status.idle": "2024-01-11T18:39:39.103131Z",

"shell.execute_reply": "2024-01-11T18:39:39.102551Z"

},

"id": "aJsj2ZyI9T9Y"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

}

],

"source": [

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"all\")"

]

},

{

"cell_type": "code",

"execution_count": 24,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.106381Z",

"iopub.status.busy": "2024-01-11T18:39:39.105957Z",

"iopub.status.idle": "2024-01-11T18:39:39.113206Z",

"shell.execute_reply": "2024-01-11T18:39:39.112627Z"

},

"id": "jj0N_Plp4X9l"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 24,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"np.array(3.2, np.float16) + tf.constant(1, tf.int8) + tf.constant(50) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "yKUx7xe-KZ5O"

},

"source": [

"### tf.Tensor の数学的ダンダーメソッド\n",

"\n",

"すべての `tf.Tensor` 数学的ダンダーメソッドは、新しい型昇格に従います。"

]

},

{

"cell_type": "code",

"execution_count": 25,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.116686Z",

"iopub.status.busy": "2024-01-11T18:39:39.116057Z",

"iopub.status.idle": "2024-01-11T18:39:39.121760Z",

"shell.execute_reply": "2024-01-11T18:39:39.121145Z"

},

"id": "2c3icBUX4wNl"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 25,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"-tf.constant(5) # "

]

},

{

"cell_type": "code",

"execution_count": 26,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.125172Z",

"iopub.status.busy": "2024-01-11T18:39:39.124612Z",

"iopub.status.idle": "2024-01-11T18:39:39.133228Z",

"shell.execute_reply": "2024-01-11T18:39:39.132646Z"

},

"id": "ydJHQjid45s7"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 26,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"tf.constant(5, tf.int16) - tf.constant(1, tf.float32) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "pLbIjIvbKqcU"

},

"source": [

"### tf.Variable インプレース演算\n",

"\n",

"`tf.Variable` インプレース演算では、暗黙的変換が可能です。\n",

"\n",

"**注意**: 変数の元の dtype とは異なる dtype を生成する昇格は許可されません。これは、`tf.Variable` がその dtype を変更できないためです。"

]

},

{

"cell_type": "code",

"execution_count": 27,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.136501Z",

"iopub.status.busy": "2024-01-11T18:39:39.136102Z",

"iopub.status.idle": "2024-01-11T18:39:39.147199Z",

"shell.execute_reply": "2024-01-11T18:39:39.146619Z"

},

"id": "QsXhyK1h-i5S"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

},

{

"data": {

"text/plain": [

""

]

},

"execution_count": 27,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"all\")\n",

"a = tf.Variable(10, tf.int32)\n",

"a.assign_add(tf.constant(5, tf.int16)) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "PiA4H-otLDit"

},

"source": [

"### tf.constant の暗黙的変換\n",

"\n",

"以前の型昇格の場合、`tf.constant` では、入力テンソルに dtype 引数と同じ dtype が使用されている必要がありましたが、新しい型昇格では、テンソルが指定された dtype に暗黙的に変換されます。"

]

},

{

"cell_type": "code",

"execution_count": 28,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.150553Z",

"iopub.status.busy": "2024-01-11T18:39:39.150094Z",

"iopub.status.idle": "2024-01-11T18:39:39.156202Z",

"shell.execute_reply": "2024-01-11T18:39:39.155628Z"

},

"id": "ArrQ9Dj0_OR8"

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"WARNING:tensorflow:UserWarning: enabling the new type promotion must happen at the beginning of the program. Please ensure no TF APIs have been used yet.\n"

]

},

{

"data": {

"text/plain": [

""

]

},

"execution_count": 28,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"tnp.experimental_enable_numpy_behavior(dtype_conversion_mode=\"all\")\n",

"a = tf.constant(10, tf.int16)\n",

"tf.constant(a, tf.float32) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "WAcK_-XnLWaP"

},

"source": [

"### TF-NumPy の配列\n",

"\n",

"新しい型昇格では、Python の `tnp.array` はデフォルトで `i32*` と `f32*` になります。"

]

},

{

"cell_type": "code",

"execution_count": 29,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.159310Z",

"iopub.status.busy": "2024-01-11T18:39:39.159065Z",

"iopub.status.idle": "2024-01-11T18:39:39.164072Z",

"shell.execute_reply": "2024-01-11T18:39:39.163483Z"

},

"id": "K1pZnYNh_ahm"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 29,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"tnp.array(1) # "

]

},

{

"cell_type": "code",

"execution_count": 30,

"metadata": {

"execution": {

"iopub.execute_input": "2024-01-11T18:39:39.166790Z",

"iopub.status.busy": "2024-01-11T18:39:39.166573Z",

"iopub.status.idle": "2024-01-11T18:39:39.171785Z",

"shell.execute_reply": "2024-01-11T18:39:39.171206Z"

},

"id": "QoQl2PYP_fMT"

},

"outputs": [

{

"data": {

"text/plain": [

""

]

},

"execution_count": 30,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"tnp.array(1.0) # "

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "wK5DpQ3Pz3k5"

},

"source": [

"##入力の型推論\n",

"\n",

"新しい型昇格では、異なる入力の型は以下のようにして推論されます。\n",

"\n",

"- `tf.Tensor`: `tf.Tensor` には dtype プロパティがあるため、それ以上の推論は行われません。\n",

"- NumPy 型: これには `np.array(1)`、`np.int16(1)`、`np.float` などの型が含まれます。NumPy 型入力にも dtype プロパティが含まれているため、その dtype プロパティが結果の推論型として使用されます。NumPy はデフォルトで `i64` と `f64` になることに注意してください。\n",

"- Python スカラー/ネスト型: これには `1`、`[1, 2, 3]`、`(1.0, 2.0)` などの型が含まれます。\n",

" - Python `int` は `i32*` として推論されます。\n",

" - Python `float` は `f32*` として推論されます。\n",

" - Python `complex` は `c128*` として推論されます。\n",

"- 入力が上記のいずれのカテゴリにも当てはまらない場合でも dtype プロパティがある場合には、その dtype プロパティが結果の推論型として使用されます。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "g_SPfalfSPgg"

},

"source": [

"# その他の資料\n",

"\n",

"新しい型昇格は JAC-NumPy の型昇格に非常によく似ています。新しい型昇格とその設計上の選択についての詳細は、以下のリソースをご覧ください。\n",

"\n",

"- [JAX Type Promotion Semantics](https://jax.readthedocs.io/en/latest/type_promotion.html)\n",

"- [Design of Type Promotion Semantics for JAX](https://jax.readthedocs.io/en/latest/jep/9407-type-promotion.html)\n",

"- [以前の TF-NumPy の昇格セマンティクス](https://www.tensorflow.org/guide/tf_numpy#type_promotion)\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "Qg5xBbImT31S"

},

"source": [

"# 参考資料"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "gjB0CVhVXBfW"

},

"source": [

"\n",

"\n",

"\n",

"## WeakTensor をサポートしている API"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "_GVbqlN9aBS2"

},

"source": [

"以下は、`WeakTensor` をサポートしている API のリストです。\n",

"\n",

"単項演算については、ユーザー指定の型がない入力が渡されると、`WeakTensor` を返します。\n",

"\n",

"バイナリ演算については、[こちら](#promotion_table)の昇格テーブルに従います。2 つの入力の昇格結果に応じて、`WeakTensor` が返される場合とそうでない場合があります。\n",

"\n",

"**注意**: すべての数学的演算(`+`、`-`、`*` など)がサポートされています。"

]

},

{

"cell_type": "markdown",

"metadata": {

"id": "Gi-G68Z8WN2P"

},

"source": [

"- `tf.bitwise.invert`\n",

"- `tf.clip_by_value`\n",

"- `tf.debugging.check_numerics`\n",

"- `tf.expand_dims`\n",

"- `tf.identity`\n",

"- `tf.image.adjust_brightness`\n",

"- `tf.image.adjust_gamma`\n",

"- `tf.image.extract_patches`\n",

"- `tf.image.random_brightness`\n",

"- `tf.image.stateless_random_brightness`\n",

"- `tf.linalg.diag`\n",

"- `tf.linalg.diag_part`\n",

"- `tf.linalg.matmul`\n",

"- `tf.linalg.matrix_transpose`\n",

"- `tf.linalg.tensor_diag_part`\n",

"- `tf.linalg.trace`\n",

"- `tf.math.abs`\n",

"- `tf.math.acos`\n",

"- `tf.math.acosh`\n",

"- `tf.math.add`\n",

"- `tf.math.angle`\n",

"- `tf.math.asin`\n",

"- `tf.math.asinh`\n",

"- `tf.math.atan`\n",

"- `tf.math.atanh`\n",

"- `tf.math.ceil`\n",

"- `tf.math.conj`\n",

"- `tf.math.cos`\n",

"- `tf.math.cosh`\n",

"- `tf.math.digamma`\n",

"- `tf.math.divide_no_nan`\n",

"- `tf.math.divide`\n",

"- `tf.math.erf`\n",

"- `tf.math.erfc`\n",

"- `tf.math.erfcinv`\n",

"- `tf.math.erfinv`\n",

"- `tf.math.exp`\n",

"- `tf.math.expm1`\n",

"- `tf.math.floor`\n",

"- `tf.math.floordiv`\n",

"- `tf.math.floormod`\n",

"- `tf.math.imag`\n",

"- `tf.math.lgamma`\n",

"- `tf.math.log1p`\n",

"- `tf.math.log_sigmoid`\n",

"- `tf.math.log`\n",

"- `tf.math.multiply_no_nan`\n",

"- `tf.math.multiply`\n",

"- `tf.math.ndtri`\n",

"- `tf.math.negative`\n",

"- `tf.math.pow`\n",

"- `tf.math.real`\n",

"- `tf.math.real`\n",

"- `tf.math.reciprocal_no_nan`\n",

"- `tf.math.reciprocal`\n",

"- `tf.math.reduce_euclidean_norm`\n",

"- `tf.math.reduce_logsumexp`\n",

"- `tf.math.reduce_max`\n",

"- `tf.math.reduce_mean`\n",

"- `tf.math.reduce_min`\n",

"- `tf.math.reduce_prod`\n",

"- `tf.math.reduce_std`\n",

"- `tf.math.reduce_sum`\n",

"- `tf.math.reduce_variance`\n",

"- `tf.math.rint`\n",

"- `tf.math.round`\n",

"- `tf.math.rsqrt`\n",

"- `tf.math.scalar_mul`\n",

"- `tf.math.sigmoid`\n",

"- `tf.math.sign`\n",

"- `tf.math.sin`\n",

"- `tf.math.sinh`\n",

"- `tf.math.softplus`\n",

"- `tf.math.special.bessel_i0`\n",

"- `tf.math.special.bessel_i0e`\n",

"- `tf.math.special.bessel_i1`\n",

"- `tf.math.special.bessel_i1e`\n",

"- `tf.math.special.bessel_j0`\n",

"- `tf.math.special.bessel_j1`\n",

"- `tf.math.special.bessel_k0`\n",

"- `tf.math.special.bessel_k0e`\n",

"- `tf.math.special.bessel_k1`\n",

"- `tf.math.special.bessel_k1e`\n",

"- `tf.math.special.bessel_y0`\n",

"- `tf.math.special.bessel_y1`\n",

"- `tf.math.special.dawsn`\n",

"- `tf.math.special.expint`\n",

"- `tf.math.special.fresnel_cos`\n",

"- `tf.math.special.fresnel_sin`\n",

"- `tf.math.special.spence`\n",

"- `tf.math.sqrt`\n",

"- `tf.math.square`\n",

"- `tf.math.subtract`\n",

"- `tf.math.tan`\n",

"- `tf.math.tanh`\n",

"- `tf.nn.depth_to_space`\n",

"- `tf.nn.elu`\n",

"- `tf.nn.gelu`\n",

"- `tf.nn.leaky_relu`\n",

"- `tf.nn.log_softmax`\n",

"- `tf.nn.relu6`\n",

"- `tf.nn.relu`\n",

"- `tf.nn.selu`\n",

"- `tf.nn.softsign`\n",

"- `tf.nn.space_to_depth`\n",

"- `tf.nn.swish`\n",

"- `tf.ones_like`\n",

"- `tf.realdiv`\n",

"- `tf.reshape`\n",

"- `tf.squeeze`\n",

"- `tf.stop_gradient`\n",

"- `tf.transpose`\n",

"- `tf.truncatediv`\n",

"- `tf.truncatemod`\n",

"- `tf.zeros_like`\n",

"- `tf.experimental.numpy.abs`\n",

"- `tf.experimental.numpy.absolute`\n",

"- `tf.experimental.numpy.amax`\n",

"- `tf.experimental.numpy.amin`\n",

"- `tf.experimental.numpy.angle`\n",

"- `tf.experimental.numpy.arange`\n",

"- `tf.experimental.numpy.arccos`\n",

"- `tf.experimental.numpy.arccosh`\n",

"- `tf.experimental.numpy.arcsin`\n",

"- `tf.experimental.numpy.arcsinh`\n",

"- `tf.experimental.numpy.arctan`\n",

"- `tf.experimental.numpy.arctanh`\n",

"- `tf.experimental.numpy.around`\n",

"- `tf.experimental.numpy.array`\n",

"- `tf.experimental.numpy.asanyarray`\n",

"- `tf.experimental.numpy.asarray`\n",

"- `tf.experimental.numpy.ascontiguousarray`\n",

"- `tf.experimental.numpy.average`\n",

"- `tf.experimental.numpy.bitwise_not`\n",

"- `tf.experimental.numpy.cbrt`\n",

"- `tf.experimental.numpy.ceil`\n",

"- `tf.experimental.numpy.conj`\n",

"- `tf.experimental.numpy.conjugate`\n",

"- `tf.experimental.numpy.copy`\n",

"- `tf.experimental.numpy.cos`\n",

"- `tf.experimental.numpy.cosh`\n",

"- `tf.experimental.numpy.cumprod`\n",

"- `tf.experimental.numpy.cumsum`\n",

"- `tf.experimental.numpy.deg2rad`\n",

"- `tf.experimental.numpy.diag`\n",

"- `tf.experimental.numpy.diagflat`\n",

"- `tf.experimental.numpy.diagonal`\n",

"- `tf.experimental.numpy.diff`\n",

"- `tf.experimental.numpy.empty_like`\n",

"- `tf.experimental.numpy.exp2`\n",

"- `tf.experimental.numpy.exp`\n",

"- `tf.experimental.numpy.expand_dims`\n",

"- `tf.experimental.numpy.expm1`\n",

"- `tf.experimental.numpy.fabs`\n",

"- `tf.experimental.numpy.fix`\n",

"- `tf.experimental.numpy.flatten`\n",

"- `tf.experimental.numpy.flip`\n",

"- `tf.experimental.numpy.fliplr`\n",

"- `tf.experimental.numpy.flipud`\n",

"- `tf.experimental.numpy.floor`\n",

"- `tf.experimental.numpy.full_like`\n",

"- `tf.experimental.numpy.imag`\n",

"- `tf.experimental.numpy.log10`\n",

"- `tf.experimental.numpy.log1p`\n",

"- `tf.experimental.numpy.log2`\n",

"- `tf.experimental.numpy.log`\n",

"- `tf.experimental.numpy.max`\n",

"- `tf.experimental.numpy.mean`\n",

"- `tf.experimental.numpy.min`\n",

"- `tf.experimental.numpy.moveaxis`\n",

"- `tf.experimental.numpy.nanmean`\n",

"- `tf.experimental.numpy.negative`\n",

"- `tf.experimental.numpy.ones_like`\n",

"- `tf.experimental.numpy.positive`\n",

"- `tf.experimental.numpy.prod`\n",

"- `tf.experimental.numpy.rad2deg`\n",

"- `tf.experimental.numpy.ravel`\n",

"- `tf.experimental.numpy.real`\n",

"- `tf.experimental.numpy.reciprocal`\n",

"- `tf.experimental.numpy.repeat`\n",

"- `tf.experimental.numpy.reshape`\n",

"- `tf.experimental.numpy.rot90`\n",

"- `tf.experimental.numpy.round`\n",

"- `tf.experimental.numpy.signbit`\n",

"- `tf.experimental.numpy.sin`\n",

"- `tf.experimental.numpy.sinc`\n",

"- `tf.experimental.numpy.sinh`\n",

"- `tf.experimental.numpy.sort`\n",

"- `tf.experimental.numpy.sqrt`\n",

"- `tf.experimental.numpy.square`\n",

"- `tf.experimental.numpy.squeeze`\n",

"- `tf.experimental.numpy.std`\n",

"- `tf.experimental.numpy.sum`\n",

"- `tf.experimental.numpy.swapaxes`\n",

"- `tf.experimental.numpy.tan`\n",

"- `tf.experimental.numpy.tanh`\n",

"- `tf.experimental.numpy.trace`\n",

"- `tf.experimental.numpy.transpose`\n",

"- `tf.experimental.numpy.triu`\n",

"- `tf.experimental.numpy.vander`\n",

"- `tf.experimental.numpy.var`\n",

"- `tf.experimental.numpy.zeros_like`"

]

}

],

"metadata": {

"accelerator": "GPU",

"colab": {

"name": "tf_numpy_type_promotion.ipynb",

"toc_visible": true

},

"kernelspec": {

"display_name": "Python 3",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.9.18"

}

},

"nbformat": 4,

"nbformat_minor": 0

}

TensorFlow.org で表示

TensorFlow.org で表示 Google Colab で実行

Google Colab で実行 GitHub でソースを表示

GitHub でソースを表示 ノートブックをダウンロード

ノートブックをダウンロード TensorFlow.org で表示

TensorFlow.org で表示 Google Colab で実行

Google Colab で実行 GitHub でソースを表示

GitHub でソースを表示 ノートブックをダウンロード

ノートブックをダウンロード