Torch Distributed Example . This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. In this tutorial we will demonstrate how to structure a distributed model training. Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. It will showcase training on multiple gpus through a process called. The dataset is downloaded using torchvision and the dataset is. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. Pytorch provides two settings for distributed training: By default for linux, the gloo and. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Launching and configuring distributed data parallel applications.

from huggingface.co

In this tutorial we will demonstrate how to structure a distributed model training. By default for linux, the gloo and. The dataset is downloaded using torchvision and the dataset is. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. Launching and configuring distributed data parallel applications. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). Pytorch provides two settings for distributed training: It will showcase training on multiple gpus through a process called. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis.

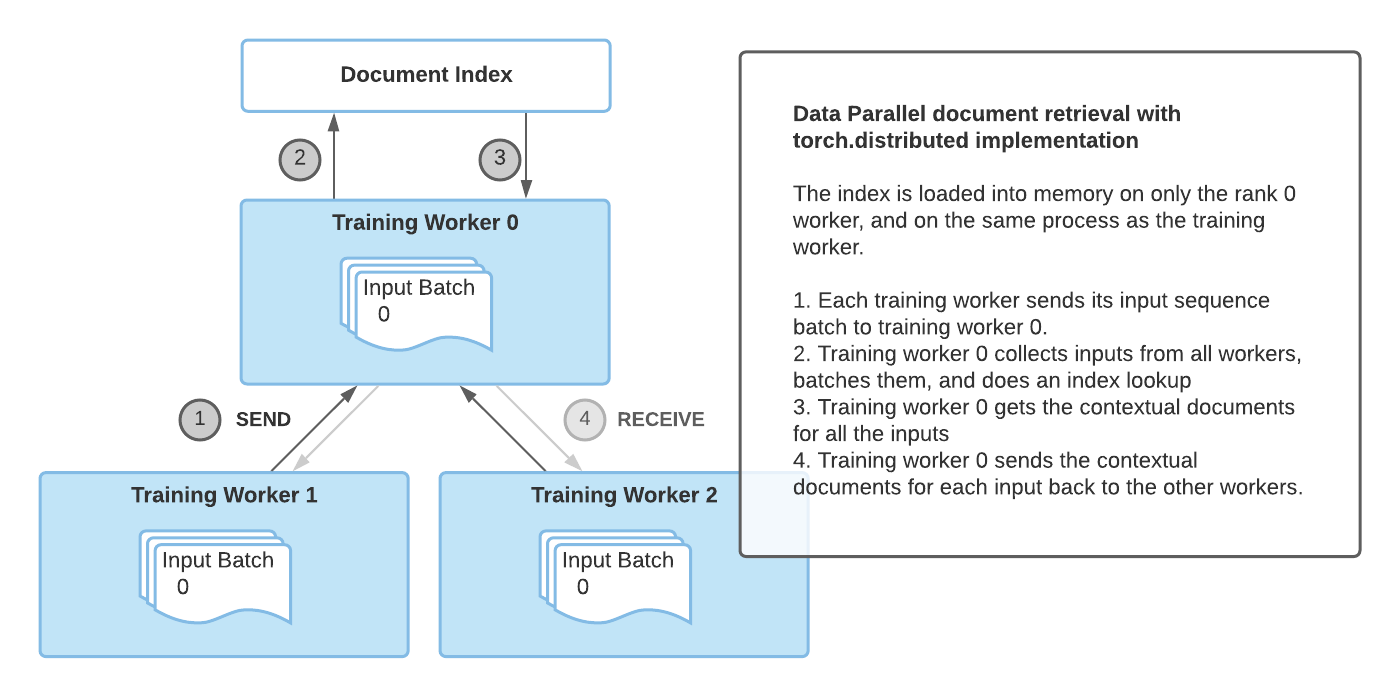

Retrieval Augmented Generation with Huggingface Transformers and Ray

Torch Distributed Example The dataset is downloaded using torchvision and the dataset is. The dataset is downloaded using torchvision and the dataset is. Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. It will showcase training on multiple gpus through a process called. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. Launching and configuring distributed data parallel applications. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. In this tutorial we will demonstrate how to structure a distributed model training. By default for linux, the gloo and. Pytorch provides two settings for distributed training: Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),.

From blog.csdn.net

torch 多进程训练(详细例程)CSDN博客 Torch Distributed Example Pytorch provides two settings for distributed training: Launching and configuring distributed data parallel applications. Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. By default for linux, the gloo and. The dataset is downloaded using torchvision and the dataset is. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their.. Torch Distributed Example.

From cai-jianfeng.github.io

The Basic Knowledge of PyTorch Distributed Cai Jianfeng Torch Distributed Example This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. It will showcase training on multiple gpus through a process called. Pytorch distributed package supports linux (stable), macos. Torch Distributed Example.

From clear.ml

PyTorch Distributed ClearML Torch Distributed Example The dataset is downloaded using torchvision and the dataset is. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. By default for linux, the gloo and. Pytorch provides two settings for distributed training: It will showcase. Torch Distributed Example.

From cztianchao.cn

Argmax Flows and Multinomial DiffusionLearning Categorical Torch Distributed Example The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. In this tutorial we will demonstrate how to structure a distributed model training. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. By default for linux, the. Torch Distributed Example.

From blog.csdn.net

torch.distributed.launch多卡多机_torch.distributed.launch多机多卡训练命令CSDN博客 Torch Distributed Example It will showcase training on multiple gpus through a process called. By default for linux, the gloo and. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. In this tutorial we will demonstrate how to structure. Torch Distributed Example.

From blog.csdn.net

torch 多进程训练(详细例程)CSDN博客 Torch Distributed Example This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. In this tutorial we will demonstrate how to structure a distributed model training. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. The dataset is downloaded using. Torch Distributed Example.

From clear.ml

PyTorch Distributed ClearML Torch Distributed Example Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. In this tutorial we will demonstrate how to structure a distributed model training. Pytorch provides two settings for distributed training: By default for linux, the gloo and. This. Torch Distributed Example.

From github.com

torch.distributed.init_process_group() get stuck after torch Torch Distributed Example In this tutorial we will demonstrate how to structure a distributed model training. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). By default for linux, the gloo and. The dataset is downloaded using torchvision and the dataset is. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples. Torch Distributed Example.

From machinelearningknowledge.ai

[Diagram] How to use torch.gather() Function in PyTorch with Examples Torch Distributed Example By default for linux, the gloo and. The dataset is downloaded using torchvision and the dataset is. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis.. Torch Distributed Example.

From github.com

torch.distributed.init_process_group setting variables · Issue 13 Torch Distributed Example It will showcase training on multiple gpus through a process called. Launching and configuring distributed data parallel applications. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. The dataset is downloaded using torchvision and the dataset is. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes,. Torch Distributed Example.

From github.com

torch.distributed and subprocess do not work together? · Issue 62381 Torch Distributed Example In this tutorial we will demonstrate how to structure a distributed model training. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). Launching and configuring distributed data parallel applications. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. By default for linux, the gloo and. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model.. Torch Distributed Example.

From morioh.com

Introducing PyTorch Fully Sharded Data Parallel (FSDP) API Torch Distributed Example The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. By default for linux, the gloo and. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. It will showcase training on multiple. Torch Distributed Example.

From codeantenna.com

Pytorch DDP分布式数据合并通信 torch.distributed.all_gather() CodeAntenna Torch Distributed Example Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. The dataset is downloaded using torchvision and the dataset is. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with. Torch Distributed Example.

From github.com

GitHub sckim0430/torchdistribution Torch Distributed Example By default for linux, the gloo and. The dataset is downloaded using torchvision and the dataset is. Launching and configuring distributed data parallel applications. In this tutorial we will demonstrate how to structure a distributed model training. It will showcase training on multiple gpus through a process called. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. Pytorch provides two settings for distributed training:. Torch Distributed Example.

From aws.amazon.com

Distributed training with Amazon EKS and Torch Distributed Elastic Torch Distributed Example It will showcase training on multiple gpus through a process called. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. The dataset is downloaded using. Torch Distributed Example.

From machinelearningknowledge.ai

[Diagram] How to use torch.gather() Function in PyTorch with Examples Torch Distributed Example In this tutorial we will demonstrate how to structure a distributed model training. By default for linux, the gloo and. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. The dataset is downloaded using torchvision and. Torch Distributed Example.

From github.com

GitHub sterow/distributed_torch_bench Various distributed Torch Torch Distributed Example This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. It will showcase training on multiple gpus through a process called. In this tutorial we will demonstrate how to structure a distributed model training. Launching and configuring distributed data parallel applications. Pytorch provides two. Torch Distributed Example.

From clear.ml

PyTorch Distributed ClearML Torch Distributed Example The dataset is downloaded using torchvision and the dataset is. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. By default for linux, the gloo and. It will showcase training on multiple gpus through a process called. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize. Torch Distributed Example.

From blog.csdn.net

Pytorch DDP分布式数据合并通信 torch.distributed.all_gather()_ddp中指标的数据归约CSDN博客 Torch Distributed Example It will showcase training on multiple gpus through a process called. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. The. Torch Distributed Example.

From www.telesens.co

Distributed data parallel training using Pytorch on AWS Telesens Torch Distributed Example The dataset is downloaded using torchvision and the dataset is. This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. By default for linux, the gloo and. In this tutorial we will demonstrate how to structure a distributed model training. It will showcase training. Torch Distributed Example.

From blog.csdn.net

torch.distributed多卡/多GPU/分布式DPP(一) —— torch.distributed.launch & all Torch Distributed Example The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. The dataset is downloaded using torchvision and the dataset is. Launching and configuring distributed data parallel applications. It will showcase training on multiple gpus through a process called. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). Pytorch provides two settings for. Torch Distributed Example.

From blog.paperspace.com

PyTorch Basics Understanding Autograd and Computation Graphs Torch Distributed Example It will showcase training on multiple gpus through a process called. The dataset is downloaded using torchvision and the dataset is. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. Pytorch provides two settings for distributed. Torch Distributed Example.

From github.com

Can it run with torch.distributed, for example,i want to run with torch Torch Distributed Example This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. Ddp uses collective communications in the torch.distributed package to synchronize gradients and. Torch Distributed Example.

From stackoverflow.com

python torch distributed binding on too many ports and hindering 128 Torch Distributed Example This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). The dataset is downloaded using torchvision and the dataset is. By default for linux, the gloo and. In this tutorial we will demonstrate. Torch Distributed Example.

From blog.csdn.net

Pytorch多GPU分布式训练_python3 m torch.distributed.runCSDN博客 Torch Distributed Example This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. It will showcase training on multiple gpus through a process called. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). In this tutorial we will demonstrate how to structure a distributed model. Torch Distributed Example.

From clear.ml

PyTorch Distributed ClearML Torch Distributed Example The dataset is downloaded using torchvision and the dataset is. By default for linux, the gloo and. Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Launching and configuring distributed data parallel applications. In this tutorial we will demonstrate how. Torch Distributed Example.

From github.com

Add alltoall collective communication support to torch.distributed Torch Distributed Example Pytorch provides two settings for distributed training: Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. By default for linux, the gloo and. The dataset is downloaded using torchvision and the dataset is. This tutorial assumes you have a basic understanding of. Torch Distributed Example.

From aws.amazon.com

Distributed training with Amazon EKS and Torch Distributed Elastic Torch Distributed Example By default for linux, the gloo and. Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. It will showcase training on multiple gpus through a process called. Launching and configuring distributed data parallel applications. Pytorch provides two settings for distributed training: Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). In this tutorial we. Torch Distributed Example.

From huggingface.co

Retrieval Augmented Generation with Huggingface Transformers and Ray Torch Distributed Example By default for linux, the gloo and. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. In this tutorial we will demonstrate how to structure a distributed model training. Pytorch provides two settings for distributed training: Launching and configuring distributed data parallel applications. This tutorial assumes you have. Torch Distributed Example.

From caffe2.ai

Caffe2 Python API torch.distributions.distribution.Distribution Torch Distributed Example Pytorch provides two settings for distributed training: Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). By default for linux, the gloo and. Launching and configuring distributed data parallel applications. The dataset is downloaded using torchvision and the dataset is. In this tutorial we will demonstrate how to structure a distributed model training. The distributed package included in. Torch Distributed Example.

From github.com

torch.distributed.elastic.multiprocessing.errors.ChildFailedError(name Torch Distributed Example Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). This tutorial summarizes how to write and launch pytorch distributed data parallel jobs across multiple nodes, with working examples with the torch.distributed.launch, torchrun and mpirun apis. Pytorch provides two settings for distributed training: The dataset is downloaded using torchvision and the dataset is. Ddp uses collective communications in the. Torch Distributed Example.

From github.com

How to use torch.distributed.gather? · Issue 14536 · pytorch/pytorch Torch Distributed Example The dataset is downloaded using torchvision and the dataset is. Pytorch distributed package supports linux (stable), macos (stable), and windows (prototype). Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. By default for linux, the gloo and. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. Launching and configuring distributed data parallel applications. Pytorch provides two settings for distributed training:. Torch Distributed Example.

From github.com

GitHub narumiruna/pytorchdistributedexample Torch Distributed Example Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. In this tutorial we will demonstrate how to structure a distributed model training. The dataset is downloaded using torchvision and the dataset is. Launching and configuring distributed data parallel applications. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model.. Torch Distributed Example.

From blog.csdn.net

【Pytorch学习笔记】2.动手生成计算图——将Tensor间的计算流程和梯度传递可视化,使用torchviz生成计算图CSDN博客 Torch Distributed Example Ddp uses collective communications in the torch.distributed package to synchronize gradients and buffers. This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. In this tutorial we will demonstrate how to structure a distributed model training. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. Pytorch provides two settings for distributed training: It will showcase training on. Torch Distributed Example.

From www.educba.com

PyTorch Distributed Learn the Overview of PyTorch Distributed Torch Distributed Example This tutorial assumes you have a basic understanding of pytorch and how to train a simple model. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Torch.nn.dataparallel (dp) and torch.nn.parallel.distributeddataparallel (ddp),. In this tutorial we will demonstrate how to structure a distributed model training. This tutorial summarizes how to write and launch pytorch. Torch Distributed Example.